After a (very) long read I am currently building a new NAS to replace my 15-year old and quite reliable Ubuntu server. The main items have arrived and I am testing with the various aspects of TrueNAS that I intend to use.

The purpose of the system is documents and media for family and friends, VM’s for myself to produce and experiment and maybe some more apps that prove to be useful (like Passbolt).

The system consists of:

- case: Jonsbo N5

- motherboard: Supermicro H12SSL-NT

- processor: AMD EPYC 7642 (48 cores)

- cooler: Arctic Kühler Freezer 4U-M

- memory: 8x Samsung M393A8G40AB2-CWE 64GB

- power supply: Seasonic Prime TX 850

- boot pool: 2x Kingston KC3000 PCIe 4.0 NVMe M.2 SSD 512GB (mirror)

- data pool: 2x Asus Hyper M.2 x16 Gen 4 with 8x WD Black SN850X 2TB (RAIDZ2)

- backup pool: 2x Seagate HDD 3.5" EXOS X16 16TB (mirror)

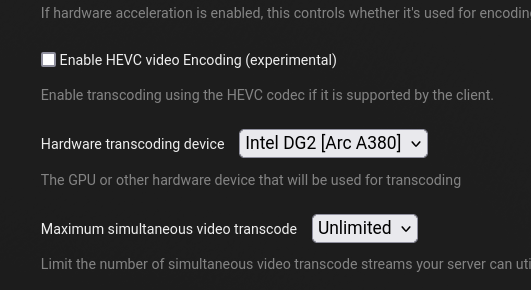

- apps GPU: Sparkle Intel Arc A380 ELF 6GB

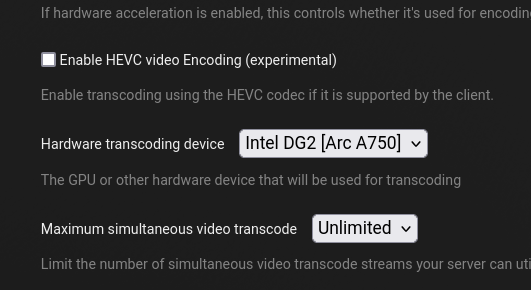

- VM GPU: Sparkle Intel Arc A750 ROC OC 8GB

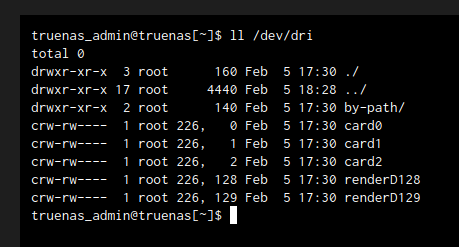

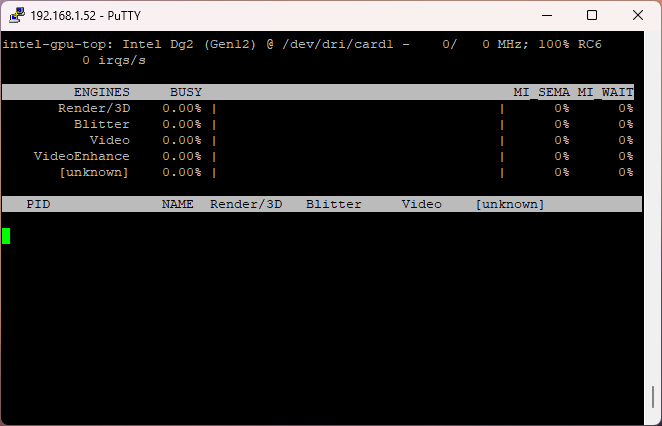

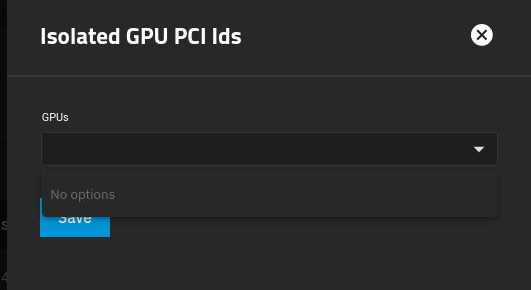

Nextcloud is running, yet have Plex to try, but am currently stuck at the two GPU’s. Via lspci | grep VGA both appear to be there, but via the GUI I cannot find them.

43:00.0 VGA compatible controller: ASPEED Technology, Inc. ASPEED Graphics Family (rev 41)

c3:00.0 VGA compatible controller: Intel Corporation DG2 [Arc A750] (rev 08)

c7:00.0 VGA compatible controller: Intel Corporation DG2 [Arc A380] (rev 05)

Also the BMC of the board shows nothing under GPU. But since I am a bit disappointed by the functionality of this BMC, maybe it is just what it is.

I know that there are quite a few that succesfully use an Intel Arc GPU, so I hope the solution is quite trivial.

Any help is appreciated.