Hi Alejandro,

Please note I’m going to point out a few things to make sure you get the best setup. There are imbalances in the components listed for use as a “normal” NAS, with any NAS software, but your specific requirements might be an edge case. There is a way to make a couple of changes to make this hardware work if you are sure of your long term requirements. There’s a lot of emphasis on pcie lanes because they are critical for expansion.

The 7950X3D is very powerful CPU but it doesn’t have many pcie lanes. Those lanes are what allow NVME drives and expansion cards to communicate with the CPU. Server grade CPUs (Xeon or Epyc) usually have many more lanes, giving you more pcie slots.

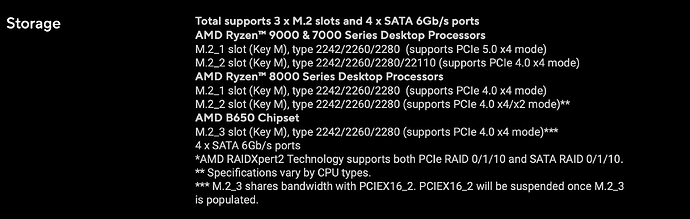

The number of slots on a motherboard can be misleading. The motherboard you listed has one 16x slot usually for a GPU and the other x16 (physical) slot only has 4 pcie lanes, that means you could install a card that expects more lanes and it could very easily be bottlenecked or not work as expected.

If you populate the third m.2 slot it will disable the second pcie slot (x16 size, 4 lanes). You will need two cards so only 2 NVME drives are possible.

The 7950X3D would usually need to be used in a NAS that’s running some heavy workloads for the cost and power usage to be optimal. I guess you chose this for a lot of CPU intensive workloads, this could work but lock you into a system that can’t take more pcie cards (apart from the x1 slots that don’t support much). You won’t be able to put in a GPU for example.

The RAM you listed is DDR4. That won’t work with the motherboard/CPU as they need DDR5.

If you need more pcie lanes, server CPUs also tend to use registered memory, that’s different to the unregistered DDR4 you listed. Workstation Xeon CPUs can use unregistered DDR4 but they usually have fewer pcie lanes than server specific CPUs. You should see if you can think of any upgrade path you want to take with the system that would require a pcie card to make sure you spec a system that can handle an upgrade. You will usually sacrifice a significant amount of CPU single core speed with server CPUs versus the 7950X3D though.

The two NVME drives don’t have much space and if you use them as a mirrored boot drive you will realistically be limiting your upgrade options. I would look at a pair of low capacity but reliable SATA SSDs for booting (attached to the motherboard sata ports). The NVME drives would be available for a small fast mirror, until replacing with larger ones maybe, they would come in handy for Docker or Virtualization.

The power supply is a higher rating than usually required but I guess it will handle the spikes in power at boot very well.

The X540 T1 (assuming it’s that as BT1 might be a typo or a mezzanine card, which mezzanine is certainly an issue) is likely to be pcie version 2. Putting that in the pcie version 4 x4 (16 physical) slot isn’t something I have tried. I’d suggest trying it, check it negotiates a 10gbe connection and see if you get acceptable performance in a test that saturates the connection (remembering that some workloads can push lots of data both ways so you might hit a bottleneck there if everything else works).

Moving on to the drives. I assume you are using the Server Rack 4U HSW4520. If so, the USB3 on the front won’t interfere with the drives in the hot swap bays. The 20 drives are connected to 5 backplanes in the case, each backplane handling 4 drives. Each backplane needs molex power and has a connector that needs to be attached to a pcie card known as a host bus adapter (HBA), this shouldn’t ideally be a RAID card for TrueNas as it does the job of raid and much more and needs direct access to the drives. A RAID card would need to be in “IT mode” for TrueNas to work. HBAs usually work with SATA and SAS drives but it’s best not to mix types on the same backplane, if you haven’t bought drives already you definitely want to read up or watch videos on TrueNas in regards to the storage options.

The HBA card you’ll need is very important, you have the x16 slot for this but it’ll need to have enough connections to address all 20 bays. These come as internal, external or a mixture. You’ll want a one that’s internal for 24 drives. It’ll have 6 ports but you’ll only need 5 cables to connect to the 5 backplanes. You should check the connections in your case (I’m only assuming it’s got 5 based on what I found). It’s also vital to ensure you know the connection type, there are lots and they have very similar names/numbers.

If you are connecting mechanical hard drives there are bargains to be found on eBay for old LSI cards. They often have 24i at the end of the card name. If you intend to hook up 20 SSDs you shouldn’t be looking at the cheaper end of used. SSDs will need a newer card for best performance. That brings up cooling… Server cards need a lot of cooling, sometimes even if they aren’t being pushed too hard. Make sure you have a lot of airflow going through the case, the drives need that too.

It’s getting late here, hopefully there’s enough information above to point you in the right direction. I’ll check back tomorrow evening to see if you have any questions.