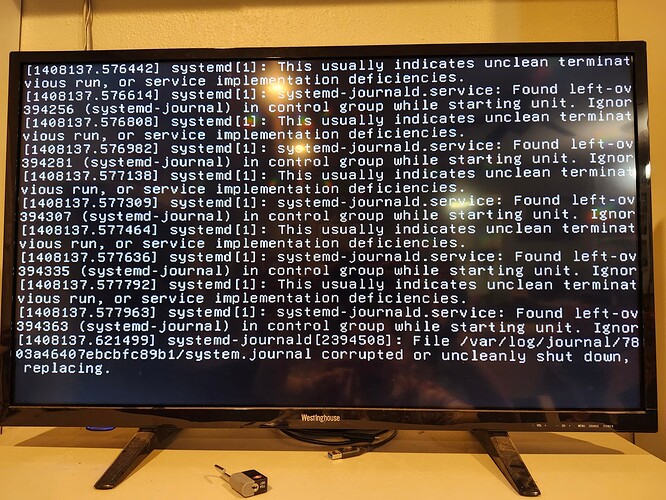

Tried to get a Virtual Machine working for the first time on my Scale machine, and the whole NAS ended up crashing with a “system.journal corrupted” error. This is after running plenty of apps and SMB shares on it for the past 6mos and never missing a beat. The only previous potential issues were one of the drives having a high temp alarm on SMART tests, and the boot pool drives being slightly different sizes. It’s possible I over-allocated the memory with the VM, but I fail to see how this would corrupt the journal… (doesn’t Debian have a swap partition / page files??) plus the VM wasn’t even booting so I don’t think it used any memory.

Any suggestions on how to recover?

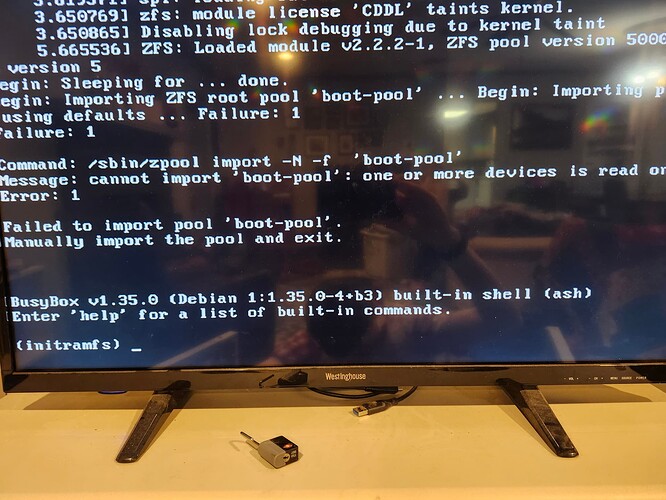

I have already tried rebooting, the machine fails to boot from the regular boot pool now. I have never gotten into using Grub… I guess I will now. BUT it’s not even giving me Grub, it’s giving me a BusyBox prompt which seems even less helpful. (see second picture)

I have mirrored drives for the system pool (and the data pool). Maybe one of those system drives is faulty. But my TrueNAS image is on a USB drive… so that is probably the first thing to replace to fix the boot issue.

Thanks in advance for any suggestions.

Nuke the boot drive and proceed with a clean installation, then upload the updated config backup you most definitely should have.

Assuming you mean boot pool, it’s kinda useless. If you require redundancy, please read Highly Available Boot Pool Strategy | TrueNAS Community.

“Useless” is a very strong word… It does confer benefits, even if most UEFI implementations are too stupid/too hampered by the boot model/too unaware of ZFS to provide the seamless reliability one would expect from a native ZFS boot solution.

Agreed.

It prevents your user land from being wiped out by corruption.

I’m not sure if scale uses mirrored swap… but it does use swap on boot ssds… so maybe it does.

And finally, if your primary boot does fail, it’s just a matter of switching to the other.

And the failure would only impact you in a restart generally.

I you require redundancy, there is a proper way of having a redundant boot pool.

If you do not require redundancy, it’s better to just have a USB pen ready for a reinstall and the updated backup config, and to keep the second drive as a spare boot drive to use in case of failure.

Also, boot drive corruption is something I have never experienced in the few years I have been running TN. But hey, CORE doesn’t use GRUB ![]()

It’s a bit more common with USB thumb drives.

But I’ve had a boot SSD die twice on me. Each time it was mirrored. Each time no unscheduled downtime.

One was actually in a macOS Server (not zfs) but mirrored none the less.

Schedule maintenance, replace with new SSD. No unscheduled downtime.

Kinda ironic I am running one then ![]()

I’ve burnt through 3 or 4 of them over the years. It fails you chuck it out and plug another in, hit replace ![]()

One failed on me just after a scale upgrade (a big amount of churn). Ie a few weeks later.

In the end I actually rejigged things to get some sata drives for the boot drives on that system because scale is affected by boot drive perf more than core.

Yep, something I personally find kinda hard to digest… but we are going OT.

Exactly. It’s the difference between “d’oh, need to open the IPMI webGUI and fix this” and “f@#% the server needs to be pulled”. At home, it’s a pain, though rare enough that it’s not a big deal if using SSDs. At work, it’s the difference between fixing something in five minutes or having to coordinate remote hands, talk them through the process, hope they don’t cram in the tray upside down (true story - Supermicro, redesign your 2.5" trays to more sanely reject upside-down trays!) and then reinstall TrueNAS, upload the config, etc. Best case, a whole hour was lost. Typical case would be half a day before you’re up again.

I went through this process on purpose the other day to switch from a pair of USB thumb drives to Sata SSD Boot.

Had a tonne of issues with the X10 IPMI and getting scales networking up. The realizing that I was missing some files in the root home dir, the getting those restored off the thumb drives.

When I was done I chucked in another SSD and mirrored it. Not going through that again if I can help it.

Scale writes more to the boot pool in various situations. That said, this case might be the near side of the bathtub curve (infant mortality). If TrueNAS is being used for important workloads, one should view the boot device(s) as a critical component of the NAS since failure modes for them can be bad and potentially take you out of production until you can get remote hands (which are sometimes all thumbs) on the server.

Will do, I appreciate the advice. I was thinking along these lines that it must be the boot drive.

I do have a configuration backup from fairly recent.

This incident re-emphasizes how important it is to have that config backup, and how I should take one before changing any important parameters.

I do not have a mirrored boot pool, so I will keep that in mind for the future. I guess it’s no surprise if the USB thumb drive failed, since I’ve had it running for almost 4yrs… It is just odd that it failed while I was performing some significant operations on the system pool.

It was my understanding that the boot drive only gets written to upon updates, and all other config and operations take place on the system pool (or data pool, as the case may be)…