I think you have been given some good advice. My two cents:

What is the capacity of the NAS over the next 3 to 5 years (I prefer to use 5 years as HDDs last typically that long, however NVMe’s may last considerably longer, it depends on the usage). Right now you are looking at about 38TB using the hardware you have (Twelve 4TB NVMe drives in RAIDZ2). Will this capacity be good for that long? I suspect not.

If you can get away with using eleven of the 4TB drives in a RAIDZ2 configuration, then double that using 8TB drives over the year, that brings you up to 70TB using eleven NVMe drives.

I would purchase one 512GB (or similar) NVMe drive as your boot drive. Buy a Gen 3 for all future NVMe purchases for this system. Do not waste your money on Gen 4 or Gen 5, you will never see the speed benefits and you will really heat up the system for no reason.

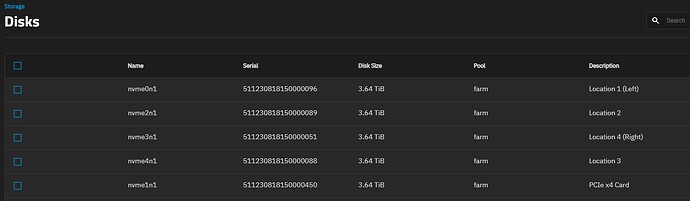

For each of your NVMe drives, take a photo or draw yourself a little map and write down the serial numbers of each drive and it’s physical location. If the M.2 slots are numbered and you can see those numbers without removing the NVMe, that works best. When you boot up TrueNAS, in the Disk section you can add a “Description” of your drive. Enter the physical location. See my screen shot for the example. It sure makes locating a module fast, for me the serial numbers are on the side against the board, I needed this feature.

Replace one of the NVMe drives with the smaller Boot Drive (I’d use Slot 1 if you can, or slot 12). This size difference will be a key feature to you as it will be by far the smallest drive and when you need to install TrueNAS, you will know which drive to install it to.

Keep your 4TB drive as a spare, you never know when you will need it.

Now build your RAIDZ2 using eleven 4TB NVMe drives.

You will have about 35TB of storage at this point. When you can afford it, replace each drive one at a time, resilver, and then repeat until all eleven have been replaced. At that point the pool will pop to about 70TB, but only after all eleven drives have been replaced.

If your data is not critical, then RAIDZ1 is fine, however most of us value our data, or just would not like to rebuild the pool and copy all that data back as that is time consuming.

I would not use a USB boot device as you have already seen the problems. However an experiment… Create a CRON JOB to reboot the system every night. That is a temporary solution possibly. There are a few commands and each is simple. You have reboot or shutdown -r now or if you wanted a 1 minute delay you could use shutdown -r 1 for example. Either command will do the job.

Hope some of this helped.