Apologies in advance for the length of this post. There is a lot to digest about the question that is posed at end about why the resilver time of a single drive is taking so long. It may also be a cautionary tail about purchasing equipment harvested from enterprise servers.

I purchased four 10TB HDDs from eBay describe as:

“HGST 10TB 3.5” SAS HDD 7200 RPM HUH721010AL5204, P/N: 0F27385 GRADE A"

These are labeled as Advanced Format (AF) meaning sector size greater than 512 bytes

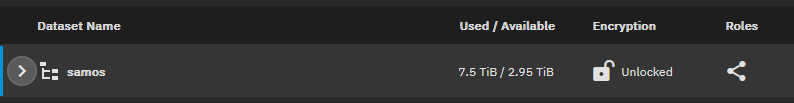

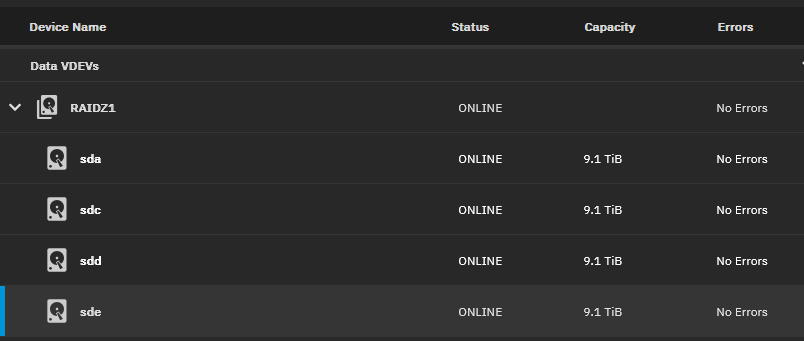

The intention for this purchase is to increase the size of a RAIDZ1 ZFS pool containing 4 x 4TB SAS HDDs (logical sector size 512). This pool has been running for a couple of years now and is reaching 75% full and needs expansion.

I had an existing Seagate 10TB 4kn drive and I resilvered the first drive successfully while waiting for the new drives to arrive. The resilver was an over night success completing in about 8 to 10 hours. I did not track this time, but it established the expectation for completion time.

When the new drives arrived, TrueNAS Scale did not recognize them and reported zero size. The dmesg report: cannot recognize 520 byte sector size.

Smartctl reports the drives as:

NETAPP X377_HLBRE10TA07

Which are harvested from a proprietary NAS. The HGST label was still attached, but they are internally reporting the NETAPP part number.

This post describes a simple method for reformatting.

sudo sg_format --size=4096 /dev/<your device>

I reformatted the fist drive to a 4K logical sector size and it resilvered in about the same time as the Seagate drive. The second reformatted drive completed in about the same time.

At this point, three of four 4TB HDDs have been resilvered with 10TB drives. The pool reported as normal but mixed size (expected). Now the last replacement was started and as I write this the resilver has been running for about 30 hours and is 36% complete.

I do not want to interrupt this process, because no errors are reported by zpool status. But it does seem unusual.

Does anyone have thoughts about why this last drive resilver is taking so long?

Description of the TrueNAS installation:

- Dragonfish-24.04.2

- Dell R720 w/ single Intel(R) Xeon(R) E5-2630

- 96G ECC RAM

- Dell Perc H310 mini controller w/ IT firmware installed

Oh, yes I do have a backup of my data just in case the very worst happens.