Which means you’ll have to create mounts/binds for every directory you wish to sync? (Assuming the app doesn’t get filesystem access by default, which the native service on Core obviously does.)

Well, you could just bind /mnt

I’m confused, why would rsyncd want/need “filesystem” access? What’s wrong with mounts?

Common language: on a server/NAS your typically “pulling” (from your laptop) and (assumption) you’d have datasets for the server/NAS to pull to.

Agreed. Same with NFS (which is insecure by default). I think an upcoming version of SCALE is also going offer NFS as an “app” to harden the NAS’s security. Just mount as needed for directories you wish to access via NFS.

No. There are no plans to make the NFS server an app, and I don’t understand the justification for doing such.

I believe the point was: removing a “built-in”. Like if someone were to try and remove Vi from base. -i.e. Even though rsync isn’t really a ‘built-in’ it is relied on by many as it were.

The absolute basic form of rsync is rsync <from> <to> (pulling on the ‘local thread’) but that functionality is already baked in with cp, tar etc. (I’d go with tar just for the file exclusions, and preservation of acls, xattrs, and whatnot but that could just be my ignorance).

[strikethrough]

Having rsync as a ‘separate app’ would allow for policy-based routing so I think the tradeoff of having to make a few mount points is little.

[/strikethrough]

There is something to say about security, but I’ll leave that up to far smarter people than I to discuss.

EDIT: I replied to your post Winnielinnie so you can fix my mistakes in the interpretation.

EDIT2: I just saw a thread about how separate IPs is not possible so, striking that comment (sorry). *sigh*

I was being tongue-in-cheek.

They cited security reasons for the removal of the Rsync Service in SCALE. Then later, it’s offered as an app, which makes it more secure.

Yet NFS gets a free pass? By default it has unhindered access to the entire filesystem. (No need to mount/bind anything in order to create an NFS share). By default it has no authentication nor encryption. (Anyone on the local network can mount the NFS share without the need to authenticate with a username/password.) The best security offered, without the convoluted Kerberos, is to employ an “Allow List” of permitted hosts or IP addresses. Guess what? The Rsync Service also has that safeguard.

So I have still yet to understand how the Rsync Service was deemed too insecure and must be relegated to an app with mountpoints/binds, yet NFS is fine to leave as a built-in service?

(Not to mention that the Rsync Service is apparently not too insecure for enterprise customers on Core 13.0-U6.2. Only for SCALE is it a security issue.)

So, I’ll attempt to not sound like a complete idiot, but I cannot promise anything because this is not my area (this is above my paygrade).

“Individual software security policies” are the issue and is what I think they are talking about. Think: OpenBSD and ‘pledge’.

Reference/example: capsicum(4) (freebsd.org)

Google ported that to Linux but I don’t know more than that.

This isn’t being made because of the GPL.

OpenRsync

GitHub - kristapsdz/openrsync: BSD-licensed implementation of rsync

It’s also part of the kernel.

Only way is to use CLI and call rsync that way. I’ve opened a Feature Request here Rsync Task should allow local 2 local synchronisation ( Local Destination Target ) - Feature Requests - TrueNAS Community Forums

I periodically use external drives via USB to backup files. I don’t always need to replicate a dataset, so it would be handy to be able to set an rsync task up in the GUI instead of running a fancy script I have now.

Easy peasy on

- Debian

- Ubuntu

- FreeBSD

- Mac OS

All of these can import a current TrueNAS ZFS pool. No need for a server.

Not too long ago, I experienced a system failure and had to replace my TrueNAS machine. I didn’t have another machine on which to install my drives and recover the pools. So, I had to order a new machine, wait a while for it to arrive, and only then recover the pools (by-the-way, it was pretty easy and straightforward to recover - Cheers TrueNAS!).

My backups were in order, but it was quite annoying to recover them and reconfigure some stuff. If only I had a local ready-to-use copy…

From my perspective, making a simple rsync copy of some data to a local mount makes a lot of sense. I usually do this with a removable drive so that, if I need to move it elsewhere, it’s easy to do so.

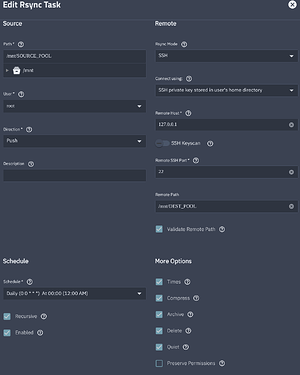

I basically set up an Rsync task using SSH mode for the localhost address. It’s working fine for now.

I created an SSH key for the root user.

ssh-keygen -b 4096

Added the public key as an authorized key.

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

Then, created the Rsync task in the TrueNAS interface.

I could create a cron job, but managing and backing up the general configuration with the web UI seems easier.

The biggest difference I see between a local rsync job and a ZFS snapshot is that the snapshot is a perfect mirror: if I delete a file in my working directory, the snapshot will sync and the file will also vanish from the other pool. With rsync, I can tell it not to delete anything on the backup pool until I remove it manually. When I’m editing video, I keep the current project on a fast NVMe pool; once the job is done and space is tight, I simply delete the raw footage from that pool, but I want the material to stay on the other pool indefinitely.

I was trying to find this also… My use case is:

- I have SSD and HDD pools

- I have 1T OneDrive that is full.

- I’ve synced that OneDrive to SSD and want continue periodically doing that, so that the SSD copy is 1:1 what OneDrive has

- But I would like to rsync the SSD OneDrive to HDD OneDrive so that no files are deleted to keep all the old data that has been removed from OneDrive to make space there.

I don’t think ZFS helps anything here. Or if it does, how?

And is my use case now something really wrong? I don’t think so. I think my use case sounds pretty reasonable and normal, but with the UI I can’t do it. (Without using localhost rsync, which is like, WHY would I need to do that?).

So I’m waiting your smart comments how my use case is wrong and how to make it better and this local target in rsync is just not a thing…

I’ve done this. There are a few extra steps like making a ext4 fs on the usb disk (in my case a 24tb Seagate), making a mount point under /mnt and an entry in fstab using the label. I haven’t tested what happens after a reboot to see if it picks up the drive and mounts it. I might have to have a startup job somewhere. Anyway - running the rsync job is the same as above. I did set the usb drive to power down after 30 minutes.

This is an inexpensive solution and will give me the raw files and dirs as a last ditch recovery method. My nas backs itself up to B2 so I do have an offsite but it would be painfully slow to suck it all back down in a “break glass in case of” moment. Someday there will be another backup nas but I need to get past this initial sticker shock for a while.