I’ve this issue since my main Ubuntu VM (hosting docker containers) has migrated from Bhyve to KVM during my CORE->SCALE migration:

The entire sytem SCALE+VM consistently hogs the entire host memory.

The VM has 24Gb of RAM and has never used it entirely in the past.

Now I get service interruptions because the mem become entirely used up as well as the 4Gb of Swap:

( kernel: systemd-journald[397]: Under memory pressure, flushing caches.

2024-09-20T06:03:58.263127+02:00 host kernel: message repeated 19 times: [ systemd-journald[397]: Under memory pressure, flushing caches.]

2024-09-20T06:03:59.525922+02:00 host kernel: workqueue: drm_fb_helper_damage_work hogged CPU for >10000us 512 times, consider switching to WQ_UNBOUND

2024-09-20T06:04:08.692272+02:00 host kernel: systemd-journald[397]: Under memory pressure, flushing caches.)

Concurrently, the host, SCALE, also use up its 64GB of RAM, though it doesn’t seem to produce any issue AFAIK.

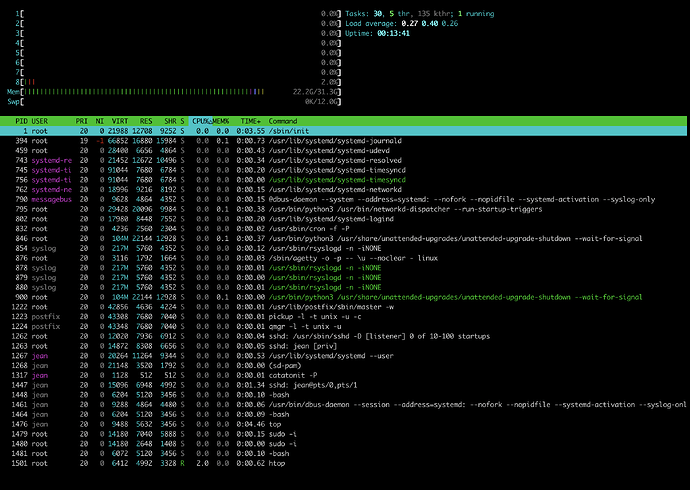

I’m having a hard time identifying where this come from, as shutting down the VM does reclame memory, but not much from (59Gb to 44Gb as indicated by HTOP) and then the RAM usage doesn’t seem to come from anywhere.

I can’t find a process using much ram (K3s is the top one with 0,7% usage, middlewared come first when looking at VIRT mem with 4GB only).

AFAIK only rebooting the SCALE host makes it solves the mem usage issue.

Restarting the VM makes it start with the very high mem usage from startup (about 19Gb where it normally starts using about 8Gb of RAM)

I upgraded TrueNAS Scale to latest patch less than 24h ago, and the RAM was all used up before the next morning.

I can’t really afford NOT to start the VM for 24h to see whether it is the source of the mem leak, but it seems obvious that it is, even though stopping it doesn’t reclaim the memory.

I don’t think a “no VM Truenas Scale” would leak memory like this.

(mine does Cloud sync, Snapshot syncs at night, and the VM doesn’t do much heavy data processing and doesn’t do much either)