Ok, so have asked this recently and need a plan/help.

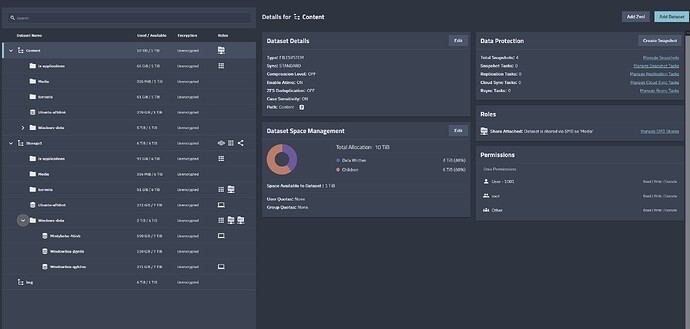

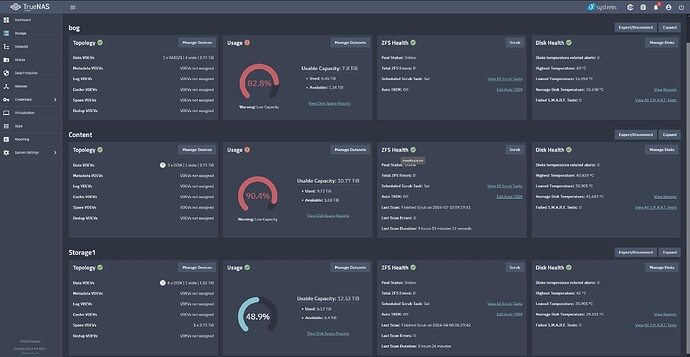

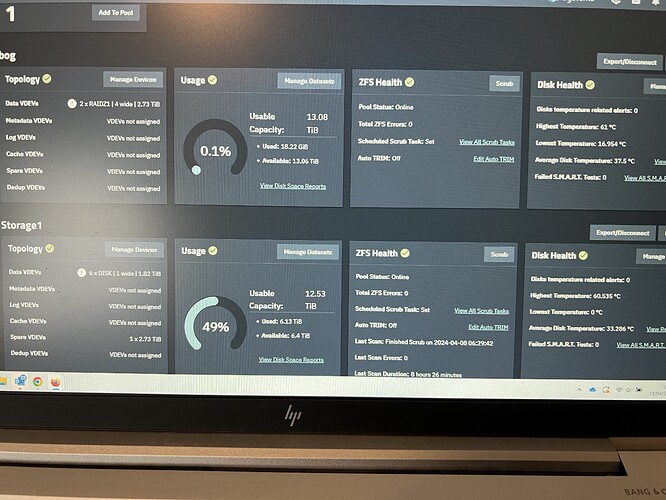

I have a 12TB or thereabouts main pool.

4 drives (2TB each) in the main internal bays and ther rest split with other pools across a 6 drive Jbod enclosure.

The main pool has 6.11TB used.

I’ve moved everything over to a new server, giving me 4 more bays (each with a 3TB sas drive in Raid or 7.8TB capacity total.)

All apps and files etc. are on the old pool and I want to move the data to the new (smaller in total) drives, then export the old pool, rename the new copy so it takes over the role of the old pool, then remove the old pool drives, shove in more and extend the main pool back up to full size again.

Thus, I can free up the old drives and also move all main data from the JBOD to the primary server, clean down the JBOD and use that for data backups etc.

Struggling here with this.

I need a numpty guide on how to perform the tasks.

Taking a snapshot and using ZFS replication just totally fills the new disks and fails the job, so obviously its either the wrong tool or it’s moving data that i was unaware of.

Could it be snapshots? There are “Quite a few” related to the old main pool and would it be safe to clear those down?

Would this remedy matters or would ZFS still try to create the 12TB volume in a 7.8TB hole?

No real option to add more storage to the system bar moving 2 old pool drives to the JBOD. I have extra SAS drives but all the old drives are SATA so worried about mixing and matching…

I’m going to try an rsync and see if that just moves the filesystem only. I have the pool config (main server config) saved to re-import once the drive shennanigans are all sorted…

Cheers.

K.