I think the zpool replace command looks right. But to get a partuuid you will of course need to create a partition (whereas the UI would create this for you).

What does the following command have to say about your pool and faulted FILE?

zpool status -v

Please supply the results in CODE tags, if possible.

If a normal data file lost a block, it would be considered lost if their was no redundancy. The method to recover it would be to erase the faulty file and restore it from backups.

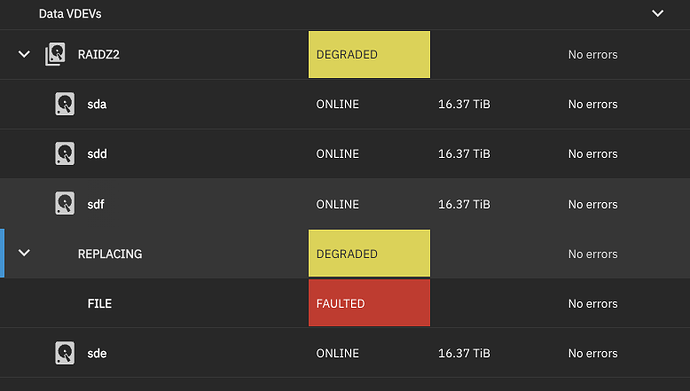

I’ve not seen that “FILE FAULTED” in the GUI before. Perhaps it is how TrueNAS display’s a lost file.

Read back - it is the result of deliberately creating a degraded RAIDZ pool using a sparse file as a parity drive as part of a data migration strategy.

Thanks, creating and getting the partition’s uuid is not a problem to be honest so I am fine with that.

I experimented with a couple of older drives I had somewhere to make sure it works and it did, so I mustard some courage and stepped into the frey.

So far it seems ok, it’s resilvering and replacing the dead sparse file with the 18TB WD Gold I previously had. Once it’s done I’ll hopefully have zraid2 in full redundancy.

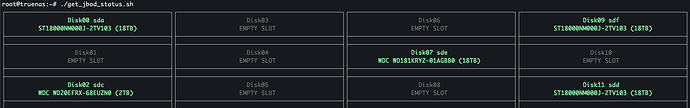

Meanwhile, I wrote a small script to display my JBOD’s configuration/status, I’ll clean it up to make it slightly more presentable and share, I honestly would have expected this to be supported by truenas.

I agree - indeed there is a Feature Request you can vote for asking for this functionality: View Enclosure Screen for non-iX hardware - #9 by Protopia

But your script looks good, though I would like to see more info though than just the device name disk model and size (i.e. as per my comment in the feature request).

P.S. You should also think about implementing @joeschmuck’s Multi-Report script (once it is fixed for EE).

Thanks! I voted it up.

My script is there mostly to help for orientation as I had a very hard time understanding where was each drive.

It already highlights faulty drives, I emulated it here:

(And added Serial number as per your suggestion!)

Honestly, it’s a pretty (s)crappy script, drawing this in a shell is ugly. It should be at the GUI and it should be easily configurable by anyone who is at least semi-competent.

Additionally, even if we DON’T get any visualization, I’d expect SES support to mark drives as faulty, it’s a good start and better than nothing. It’s pretty bad to pull out the wrong drive after a failure.

Joe’s Multi-Report script displays, sets and stores configuration values - you could use that as inspiration. Set a width and height in drives for your enclosure and whether the drives are horizontal or vertical and display boxes accordingly.

I note that empty slots still have serial numbers.

Thanks! I’ll check it out

And yes - I forgot to reset them for the empty slots ![]()

I’ll tell you what would be a cool extra idea for your script (but quite a bit of work).

Once you have implemented the configuration store, then you could have a mode where you can run the script in a minimal docker container and it opens up a web server port and displays the same thing in HTML on an auto-refresh.

Actually, rewriting it in python would be probably easier than doing it in bash…

But in any case, as I said - this was my way of visualizing my rack and knowing which drive is where without popping it out and reading the serial number…

TLDR: what is the recommended way to reshuffle drives without shutting down the server?

The drives temperature is slightly too high to my taste, I assume that about 18 hours of resilver doesn’t help the emperature but still… I believe that I can improve it a bit by moving drives around, my drives have very very little write most of the time,

Can I just pull a drive push it back within a few seconds to a new location and wait for a (hopefully quick?) resilver?

Alternatively: Is there a way to “freeze” or “shut down” the pool so I can move it around and then turn it back on? (I don’t want to shut down the server).

Thanks

Hello guys, i have try to follow your manual but my problem is i got this error message in truenas scale:

cannot create ‘poolname’: system is read-only

this chould the error message, i can’t do it again, because i repair my mirror with 1 volume currently.

can anyone help me?

@linux_neuling - You might be better off creating a new thread with what you want to do. Even if you end up referencing this thread as similar to what you want to do.