Sorry, the issue that pops up randomly for me is the “last test age”. I have short tests daily and one long test once a week, so the last test age should never be greater than 1, even if for whatever reason the self-test took 24 hours.

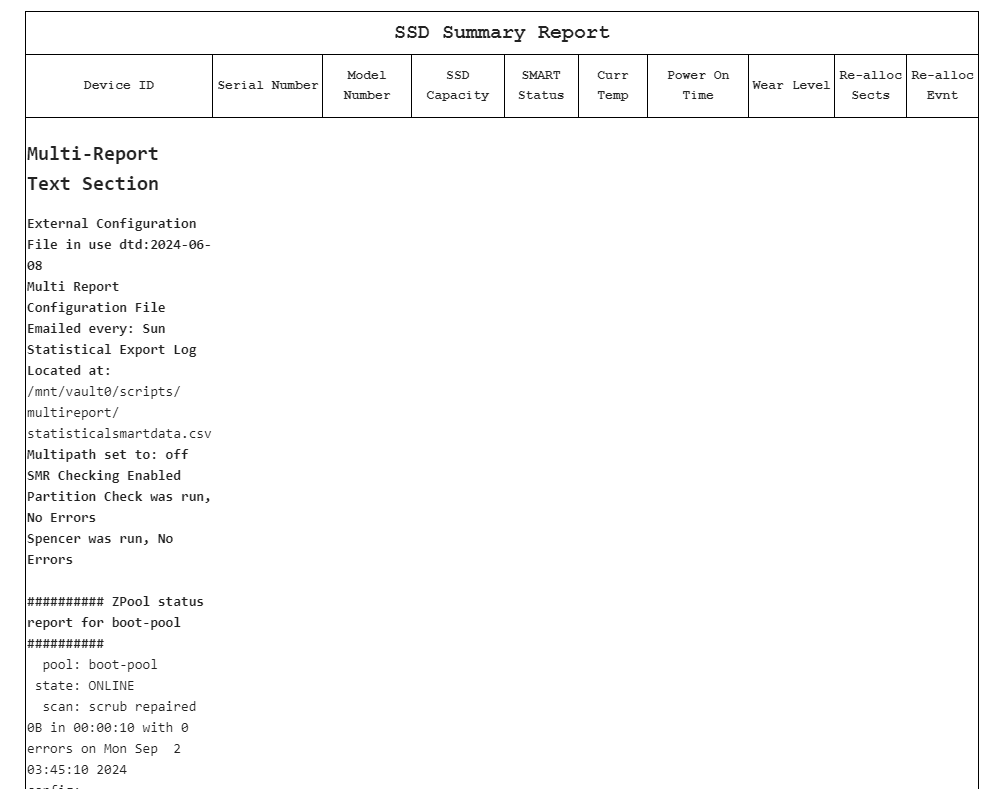

I’ve been happily using Multi-Report v3.0.1 for a while that’s been chugging along nicely, but ever since I’ve updated to v3.0.7 it’s throwing out the following errors:

/mnt/vault0/scripts/multireport/multi_report.sh: line 4815: [[: —: syntax error: operand expected (error token is “-”)

/mnt/vault0/scripts/multireport/multi_report.sh: line 4816: ( 33403000 - — ): syntax error: operand expected (error token is “)”)

I’ve tried a full reinstall and generating a new config file and just can’t get it to play nice. Not only that, it doesn’t give me SSD summaries and messes up the “Multi-Report Text Section”. Any clue what might be causing this?

You likely have a drive that has some odd name, missing data, or could even be as simple as a space in a pool name. I did just fix a white space issue in 3.0.8Beta. Run your script with -dump emailextra and I will get the data I need to figure out what is causing the issue.

If you ever have a problem with my script, please speak up, the sooner the better. If it is something as simple as a configuration change, I will let you know. I could only be so lucky if that were the case all the time.

@BarefootWoodworker

Same for you as well. Run the script with -dump emailextra so I can get some data and I should be able to figure out what is going on.

-Joe, or is it Mark?

I really appreciate the prompt reply and your dedication to TrueNAS. Shall get a dump sent over in a few hours after work.

Should be on its way shortly, good sir!

I got a new, exciting message from CRON arrive in my mailbox earlier today

error: RPC failed; curl 18 HTTP/2 stream 5 was not closed cleanly before end of the underlying connection

error: 5246 bytes of body are still expected

fetch-pack: unexpected disconnect while reading sideband packet

fatal: early EOF

fatal: fetch-pack: invalid index-pack output

I guess the script had a problem downloading an update?

So I looked up that error message on the internet, here is the result:

The error message you’re encountering, “error: RPC failed; curl 18 transfer closed with outstanding read data remaining,” typically indicates an issue with network connectivity or bandwidth limitations during the Git operation .

Also, I just ran the script (to make sure it worked before I posted anything), downloaded just fine and no errors.

Please run the CRON Job again to see if the problem repeats itself.

-Cheers

I also understand that the message implies that curl had experienced a network error.

As expected, it works just fine if I run the cron job manually. Perhaps treating the error from curl as a transient error and either retrying or letting it slide would be a better idea than returning the error back to cron as a fatal script execution error?

Question: Did the script fail to run and generate a report? I ask because even a failure, as I have it scripted, runs in a subshell, so any failure should not crash the script, or I misunderstand the purpose/feature of a subshell.

I already skip the download if github.com is not available (you are not able to reach and talk to github.com) because that is something that is likely to happen.

Yes, a report was produced. So I got two mail messages one after the other. First, the report and next, the failure message from cron.

Sounds like it ran as expected, that is good.

I can’t plan for every internet issue so I plan on no connectivity. The script could not check if there was a newer version and continued to run. I’m glad it worked and I appreciate the feedback.

This is an amazing, comprehensive script. @joeschmuck, I don’t know why I only just discovered it. Thank you for producing it! I can’t imagine the complexity and the time it took.

I have a few minor suggestions for the user guide. My comments in bold:

Config-Backup

- Configuration Backup Enabled (true)

- Set to ‘true’ to enable backups. This section seems to cover both the TrueNAS config and the multi_report config. Not clear which this is.

- Save a local copy of the config-backup file (false)

- Set to ‘true’ will create a copy of the TrueNAS configuration in the path identified below. I’m not sure if ‘local’ here means on the server or on the computer used to access it. Also, I can’t find the ‘path identified below’.

- Day of the week would you like the file attached? (Mon)

- The day of the week to attach the TrueNAS backup file. Options are: Mon, Tue, Wed, Thu, Fri, Sat, Sun, All, or Month.

- Enable sending multi_report_config.txt file (true)

- Attach multi_report_config.txt file to email if ‘true’.

- What day of the week would you like the file attached? (Mon)

- The day of the week to attach the TrueNAS backup file. Options are: Mon, Tue, Wed, Thu, Fri, Sat, Sun, All, or Month. I assume ‘TrueNAS’ here should be ‘multi_report_config.txt’, since we already set it for TrueNAS above.

- Send email of multi_report_config.txt file for any change (true)

- When ‘true’, if the multi_report_config.txt file is changed, the original and new multi_report_config.txt files are attached to the email.

Finally, I have a minor question. Until seeing the wonderful ‘rust’ table, I had never noticed that drives in my main pool have low load cycle count (90-180), but drives in my backup pool are much higher (4000-8000). The backup pool is only accessed once a day (at least by anything I initiate). I would expect the numbers to be opposite. Any idea why the difference?

Improper spindown settings?

Labor of love I guess, and I really wanted something to cover my butt.

Thanks for the feedback, I actually do appreciate it. I know what I’m saying however that does not always translate into others knowing what I am saying.

@Davvo is correct. You may be sleeping the drives and then something comes along and wakes them up, then they go back to sleep again, and something wakes them up. Rinse, repeat. I honestly do not recall the proper setup to sleep the drives, there are a few things that affect it. I’m sure you can do a search to get the answers. With that said, I would recommend you see how many times per day the drives spin up. Is it 24 times, 6 times, once a day? If these are older drives, the high count could have come from previous use. So one step at a time, see how often they spin up/down in a 24 hour period. If you run my little script once a day, then you would have the values.

One last thing, drives are typically rated for 600,000 cycles these days. It use to be 300,000 but a few years back it doubled. So even if it were 24 times a day, that means in 67 years you would hit the limit by warranty for this. Although I (and many others) feel it is good practice to minimize this if possible. This is why many of us prefer to let them run non-stop, heads loaded. I have three drives with over 52800 hours on them, the fourth one died about a year ago. 3 out of 4 isn’t bad. And the head loading count is 323/324 for all of the old drives. Most of these are from powering up/down to clean the system, moving across the country, stuff like that.

I’m not trying to sleep the drives. The HDD Standby setting for all of them is “Always on”. I don’t know what other settings there might be.

I’m pretty sure the drives are all approximately the same age.

I don’t understand how to get the number of times they spin up/down in a day. Is that from the Start/Stop Count column? Those numbers are all about the same, 45-48.

Hi joe, i’m having a strange issue: i sidegraded from Core to Scale, and seems that both of my NVME disks not report anymore the “power on time” correctly.

I admit that at beginning i was thinking about that SMART werent running somehow, because the dayli report always show same power on time → same last test performed in that time (same for disks read/write operation), but looking better on shell i realized that disks are “freezed” with that time, and sometimes counter increase for a minimal amount of hour.

Self-test Log (NVMe Log 0x06)

Self-test status: No self-test in progress

Num Test_Description Status Power_on_Hours Failing_LBA NSID Seg SCT Code

0 Short Completed without error 2728 - - - - -

1 Short Completed without error 2728 - - - - -

2 Short Completed without error 2728 - - - - -

3 Short Completed without error 2727 - - - - -

4 Short Completed without error 2727 - - - - -

5 Short Completed without error 2727 - - - - -

6 Short Completed without error 2727 - - - - -

7 Extended Completed without error 2727 - - - - -

8 Short Completed without error 2726 - - - - -

9 Short Completed without error 2722 - - - - -

10 Extended Completed without error 2721 - - - - -

11 Short Completed without error 2720 - - - - -

12 Short Completed without error 2709 - - - - -

13 Short Completed without error 2685 - - - - -

14 Short Completed without error 2661 - - - - -

15 Short Completed without error 2637 - - - - -

16 Short Completed without error 2614 - - - - -

17 Extended Completed without error 2589 - - - - -

18 Short Completed without error 2565 - - - - -

19 Short Completed without error 2541 - - - - -

Instead, the temperature seems reported correctly.

i have smart test enabled for all disks (inherithed from Core config), tried both your NVME self test script and enabling self test on multi report… same behavior

Those disks are used for the app pool, so are pratically used 24/7 by differents app, seems impossible to me that they can enter in a sleep mode… but can be related somehow to the lower power state that disks are entering from the Scale sidegrade? (from PS-0 8.4800W of Core to PS-4 0.0050W)

@oxyde Sorry you are having problems. Please run the script from the command line and use -dump emailextra to send me the required data I need to figure out what is going on.

It is odd that upgrading from CORE to SCALE would cause this problem and I’m scratching my head trying to figure out why, especially when you say the temperatures are being reported correctly. Very odd problem. The only issue I can think of is the testing command is not being accepted by the NVMe drive.

AFTER you send me the -dump emailextra would you then run the command nvme device-self-test /dev/nvme0 -s 1 (this command is for SCALE only) and a short test should be running. It will last no longer than 2 minutes. After that please run the script again with the dump command line to send me a second email dump.

When you send me the -dump emailextra you will also receive the same email as well. With any luck, the second one will show the test was run. That only means I’m hopeful that the solution should be an easy one.

Thanks in advance for the assistance! ![]()

![]()

![]()

You should have received both dump. Tell me if you need other info or everything else

Got the files, let me digest them. Expect an email reply in a few minutes, once I get my daughter off the phone.