Hello everyone,

I am doing this writeup to get some feedback on my setup in case I missed something some of you more experience TrueNAS folks may see and in the process am hoping what I have done might help others. I will be giving the full specs of what I am running, current state as well as future plans. I have been in the IT industry for 20+ years at this point so have quite a bit of technical knowledge although TrueNAS Scale was very new to me up until June. My r720’s were needing replacing and my Synology NAS was getting to be EOL, enter my new setup / project.

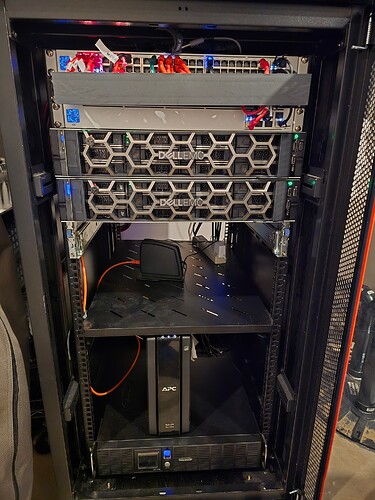

I have two systems on site that are identical and then a 3rd system for offsite backups.

2 x Dell r740xd with the following specs:

2x Intel Xeon Gold 6140

384 GB of RAM (12 x 32GB) – 128GB dedicated to TrueNAS

HBA 330

2 x 1100w PSU (Active/Passive)

Dell BOSS card (ESXi install)

Dell Mellanox ConnectX-4 25Gb SPF28 Dual Port

Dell x520-DA2 (2x10Gb SFP+) + I360 (2x1GbE)

Dell DPWC300 M.2 PCI Adapter (2 x 16GB Intel Optane Drives on this)

4 x Dell G14 0YNRXC MTFDDAK960TDS 960GB SSD (Mirror)

4 x Seagate Exos X22 16TB HDD (Mirror)

The OS on the servers is ESXi, and TrueNAS Scale is installed as a VM on the same BOSS card that ESXi is installed on (Installed on 2 x WD Blue 1TB M2. drives as a RAID1 mirror, part WDS100T3B0B). I followed the guide https://www.truenas.com/blog/yes-you-can-virtualize-freenas/ to do this. The HBA, as you would expect, is passed through to the TrueNAS VM.

The SSD’s serve as a mirrored iSCSI storage served up by TrueNAS for ESXi for the majority of my VM’s to run from (One of the Optane drives acts as a SLOG for this Dataset – note to set this up, you need to pass it through to TrueNAS). I have pfSense running on the ESXi install drive default datastore and set to boot first before TrueNAS as well as Nakivo (backup appliance) so if for some reason TrueNAS is down, I have basic network function and restore capability. The 4 mirrored HDD’s (expanding to 6, maybe) are carved up as mostly SMB shares, an NFS share and one more large iSCSI target as the Nakivo Backup repository for all my VMs and the other Optane drive acts as a SLOG for this, mostly because of the backups). In the coming months, I am planning to ditch the Arlo cameras we have, put some more HDD’s in the server and host my own NVR setup once I can find a mixture of WiFi and PoE cameras that will work with BlueIris or something similar (any feedback or options you can provide on this would be welcomed).

The second server is set up exactly the same except, there is a Nakivo Transporter running from the default datastore in case I ever need to pull a backup from it locally if my main server goes down. All VM’s are also replicas on the second local server. Where this is the prod setup for the entire house, I need redundancy (HomeAssistant, vCenter, Plex, some server OS’s hosting various apps/services and a few other appliances like PowerPanel Remote).

On the main TrueNAS Scale install, I have Periodic Snapshots set up to run twice a day for various retention times depending on the need and then Replication tasks for all of these to replicate twice daily to the second on site server and to the offsite TrueNAS server. The two on site servers are directly connected to each other via the 25Gb Mellanox cards. One port is used for vMotion, the other for TrueNAS and Nakivo to talk and bypass the switch for their backups).

The offsite server is a Dell T440 3.5 x 8 bay server set up the same as the others except it has 256GB of RAM, and has a pair os silver CPUs (may remove one to save power as its not doing much) and does not have the Mellanox cards. I put a x520 in the T440 so when I did my initial data backup to it, things would move along quickly. The T440 connects back to home via a site-to-site OpenVPN server and also acts as a backup VPN server for us when we are travelling (all traffic is forced through it as I don’t trust public Wi-Fi) in case the main server goes down for some reason when we are not home.

As some fun additional knowledge, my switch is a Unifi Pro Max 48 PoE with 4 10GB SFP+ ports (each server takes up a port there) and the third port goes to a 8 Port Unifi Aggregation Switch (two ports on this, go back to each server as I have the ISP coming into the aggregation switch, and the connecting ports are on their own ISP vSwitch).

The Production servers are connected to a CyberPower rack UPS (OR1500LCDRT2U) that has an RMCARD205 in it, the switches and lab server are plugged into a CyberPower CP1500PFCLCD

UPS (I have some PoE AP’s around so if we have a power blip, I like it all to remain up). Because of the UPS’ the SLOG devices do NOT have PLP. The hosts are both set up so that when the power goes out, they will gracefully shutdown the VMs in a specific order when the runtime gets to a certain amount in case I am not home, and then also on power restore, boot back up and turn on the VM’s in a specific order.

I will mention about my pfSense setup… Because I have a VLAN on the aggregation switch for the ISP (its capable of a 10GB connect/speed) and each host has a connect, I can vMotion between the servers when doing updates and do not experience even a blip. This is why I did not set up HA mode (that and the replica, and this is already overkill).

As a side note, I also have a Dell r720xd server that I will be using as a lab server, just haven’t popped it in the rack yet. I do not want to mess with the production stuff too much.

So that is it ![]() Feedback appreciated (I know its overkill, so thanks lol. If you have any questions about this setup, how I did some of it, networking questions, etc, feel free to ask.

Feedback appreciated (I know its overkill, so thanks lol. If you have any questions about this setup, how I did some of it, networking questions, etc, feel free to ask.

I’ve also posted this over on reddit homelands so if you think you have dejavu, you don’t.