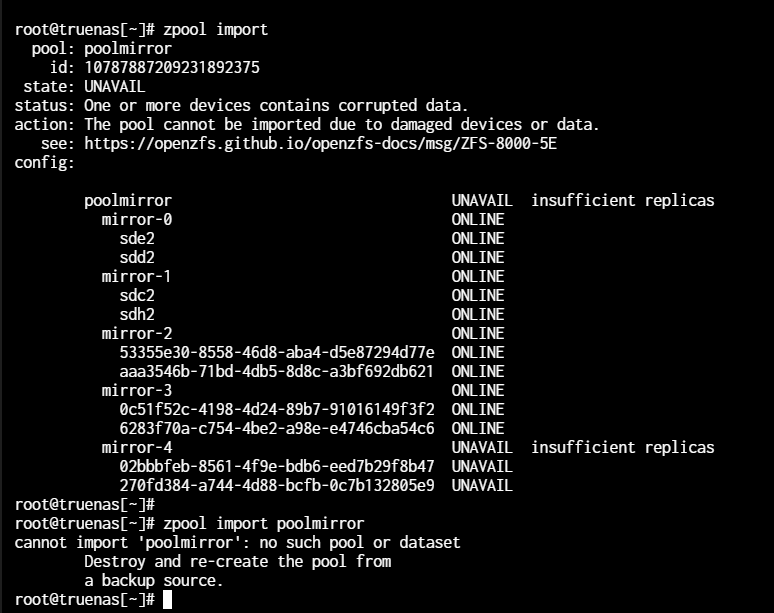

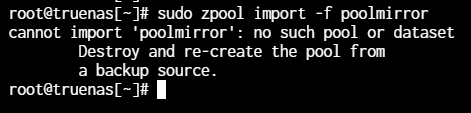

Summary as I see it:

-

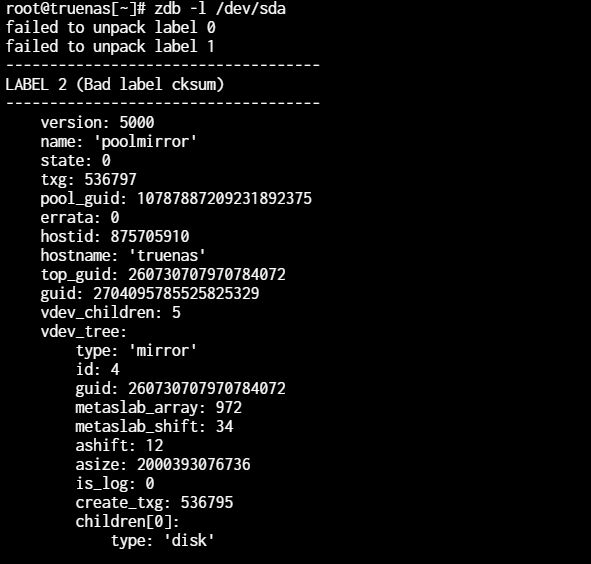

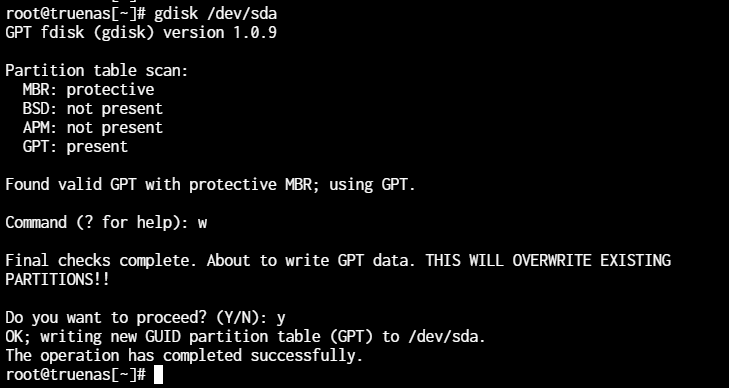

The GPT partition table seems to have entries for the ZFS partitions on /dev/sda and /dev/sdh but seems to have lost the partition types and partuuids.

-

The ZFS labels in /dev/sda1 and /dev/sdh1 appear to be OK, and these give us the partuuids that should exist but AFAICS don’t tell us which partuuid should be given to which partition.

-

The wipefs output does show a magic string at offset 0x200 which I think is the start of the ZFS partition, however I don’t know what it should look like. But for comparison here is the output from one of my disks, so it appears:to be missing all the magic strings you might expect, and I have no idea whether this means the partition contents are gone or not.

sudo wipefs --no-act -J /dev/sda

{

"signatures": [

{

"device": "sda",

"offset": "0x3f000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x3e000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x3d000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x3c000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x3b000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x3a000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x39000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x38000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x37000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x36000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x35000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x34000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x33000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x32000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x31000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x30000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x2f000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x2e000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x2d000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x2c000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x2b000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x2a000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x29000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x28000",

"type": "zfs_member",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x200",

"type": "gpt",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x3a3817d5e00",

"type": "gpt",

"uuid": null,

"label": null

},{

"device": "sda",

"offset": "0x1fe",

"type": "PMBR",

"uuid": null,

"label": null

}

]

}

- We know what the partition type should be and we know what the partuuids are, so if we can work out which way round they should be we can presumably use a utility to put these back in place and then try to import the pool and see if it works.

Perhaps @HoneyBadger will be able to give better and more expert insight. (But it is the weekend and as a TrueNAS employee he may only respond during working hours.)