Hey All,

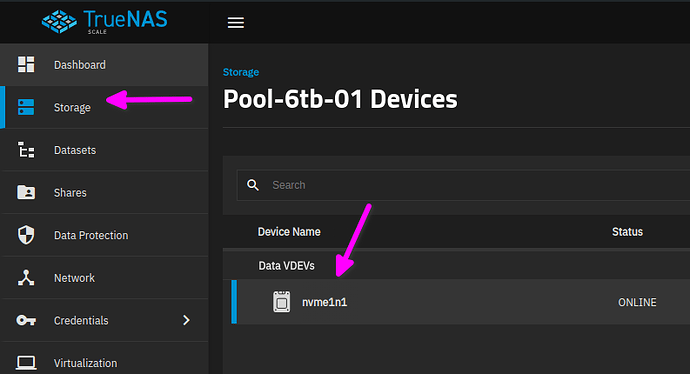

Building out my new TrueNAS Scale box. Sure it is something I am missing, but if not… annoying?

Did a single NVMe to do some speed tests, but now I can not delete the single vDev? (No shares, no nothing associated to it) Also rebooted, same thing…

I just get errors when I try to Offline or Remove it…

If I try to Remove:

Remove device

Error: [EZFS_NOSPC] cannot remove /dev/disk/by-partuuid/32898099-8e54-48f2-8dc9-a9aa0673ecfc: out of space

If I try to Offline:

CallError

[EZFS_NOREPLICAS] cannot offline /dev/disk/by-partuuid/32898099-8e54-48f2-8dc9-a9aa0673ecfc: no valid replicas

More info:

Error: concurrent.futures.process._RemoteTraceback:

"""

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/pool_actions.py", line 69, in __zfs_vdev_operation

with libzfs.ZFS() as zfs:

File "libzfs.pyx", line 529, in libzfs.ZFS.__exit__

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/pool_actions.py", line 74, in __zfs_vdev_operation

op(target, *args)

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/pool_actions.py", line 107, in <lambda>

self.__zfs_vdev_operation(name, label, lambda target: target.offline())

^^^^^^^^^^^^^^^^

File "libzfs.pyx", line 2398, in libzfs.ZFSVdev.offline

libzfs.ZFSException: cannot offline /dev/disk/by-partuuid/32898099-8e54-48f2-8dc9-a9aa0673ecfc: no valid replicas

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib/python3.11/concurrent/futures/process.py", line 256, in _process_worker

r = call_item.fn(*call_item.args, **call_item.kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 112, in main_worker

res = MIDDLEWARE._run(*call_args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 46, in _run

return self._call(name, serviceobj, methodobj, args, job=job)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 34, in _call

with Client(f'ws+unix://{MIDDLEWARE_RUN_DIR}/middlewared-internal.sock', py_exceptions=True) as c:

File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 40, in _call

return methodobj(*params)

^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 191, in nf

return func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/pool_actions.py", line 107, in offline

self.__zfs_vdev_operation(name, label, lambda target: target.offline())

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/pool_actions.py", line 76, in __zfs_vdev_operation

raise CallError(str(e), e.code)

middlewared.service_exception.CallError: [EZFS_NOREPLICAS] cannot offline /dev/disk/by-partuuid/32898099-8e54-48f2-8dc9-a9aa0673ecfc: no valid replicas

"""

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 198, in call_method

result = await self.middleware.call_with_audit(message['method'], serviceobj, methodobj, params, self)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1466, in call_with_audit

result = await self._call(method, serviceobj, methodobj, params, app=app,

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1417, in _call

return await methodobj(*prepared_call.args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 187, in nf

return await func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 47, in nf

res = await f(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/pool_/pool_disk_operations.py", line 101, in offline

await self.middleware.call('zfs.pool.offline', pool['name'], found[1]['guid'])

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1564, in call

return await self._call(

^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1425, in _call

return await self._call_worker(name, *prepared_call.args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1431, in _call_worker

return await self.run_in_proc(main_worker, name, args, job)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1337, in run_in_proc

return await self.run_in_executor(self.__procpool, method, *args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1321, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

middlewared.service_exception.CallError: [EZFS_NOREPLICAS] cannot offline /dev/disk/by-partuuid/32898099-8e54-48f2-8dc9-a9aa0673ecfc: no valid replicas

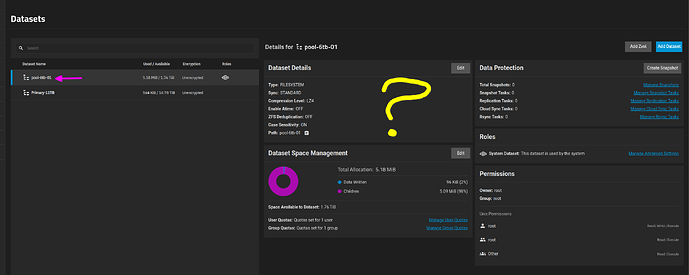

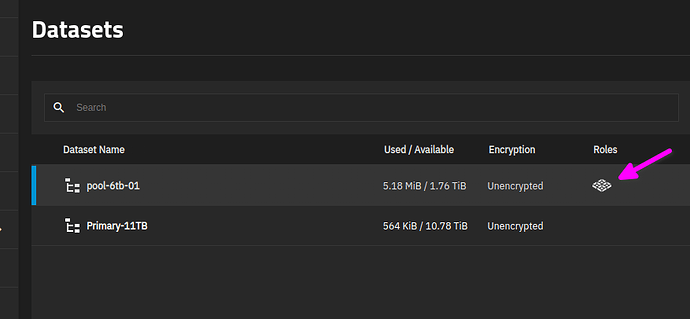

So Figure it is because it has a DataSet still on it, but on that I see no option to remove the dataset? Edit doesnt show me any delete or remove options?