I think I am having the same issue. I already noticed it on Cobia 23.10.2 and hoped it would go away after upgrading to Dragonfish 24.04.1.1.

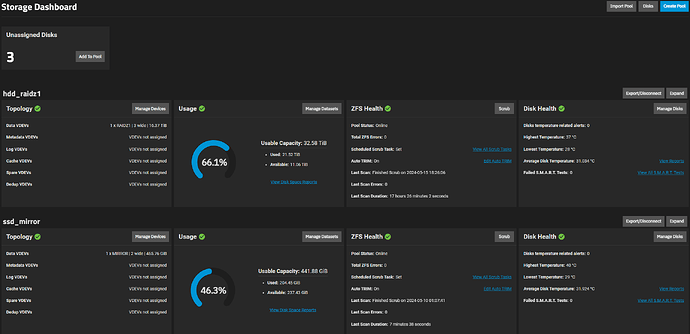

My NAS has two pools (3x18TB HDDs + 2x500GB SSDs).

The pools are healthy as shown by zpool status:

# zpool status

pool: boot-pool

state: ONLINE

status: Some supported and requested features are not enabled on the pool.

The pool can still be used, but some features are unavailable.

action: Enable all features using 'zpool upgrade'. Once this is done,

the pool may no longer be accessible by software that does not support

the features. See zpool-features(7) for details.

scan: scrub repaired 0B in 00:00:39 with 0 errors on Sat Jun 1 03:45:41 2024

config:

NAME STATE READ WRITE CKSUM

boot-pool ONLINE 0 0 0

nvme0n1p3 ONLINE 0 0 0

errors: No known data errors

pool: hdd_raidz1

state: ONLINE

scan: scrub repaired 0B in 17:26:02 with 0 errors on Wed May 15 18:26:06 2024

config:

NAME STATE READ WRITE CKSUM

hdd_raidz1 ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

ata-ST18000NM003D-3DL103_ZVTB4R5Q ONLINE 0 0 0

ata-TOSHIBA_MG09ACA18TE_6260A3SCFG0H ONLINE 0 0 0

ata-TOSHIBA_MG09ACA18TE_6260A1VKFG0H ONLINE 0 0 0

errors: No known data errors

pool: ssd_mirror

state: ONLINE

scan: scrub repaired 0B in 00:07:38 with 0 errors on Fri May 10 01:07:41 2024

config:

NAME STATE READ WRITE CKSUM

ssd_mirror ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

fb3269db-3bcc-431c-9714-a6ad79ea3cf1 ONLINE 0 0 0

a7b3ea94-9044-42ea-bc18-97caa4390d1b ONLINE 0 0 0

errors: No known data errors

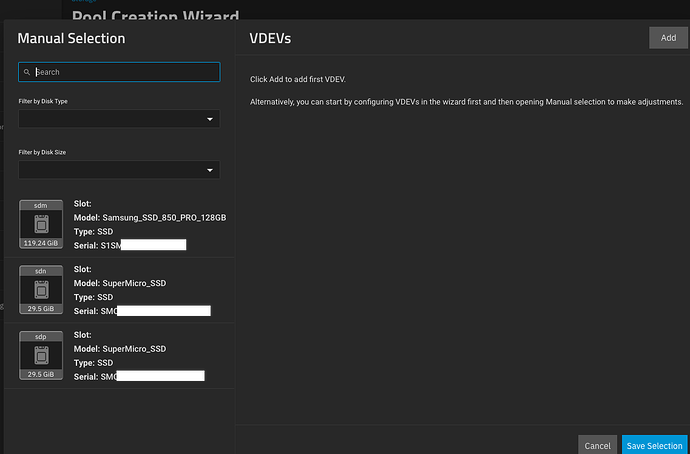

I also find the output of lsblk -o name,model,serial,partuuid,fstype,size normal-looking:

# lsblk -o name,model,serial,partuuid,fstype,size

NAME MODEL SERIAL PARTUUID FSTYPE SIZE

sda CT500MX500SSD1 2104E4EC9620 465.8G

|-sda1 1b25b5b6-61c6-443a-82e8-7cc99f10e0f4 6G

`-sda2 a7b3ea94-9044-42ea-bc18-97caa4390d1b zfs_member 459.8G

sdb ST18000NM003D-3DL103 ZVTB4R5Q zfs_member 16.4T

sdc CT500MX500SSD1 1843E1D3333B 465.8G

|-sdc1 856debac-b14c-4999-8468-d3fdfcc1b5a9 6G

`-sdc2 fb3269db-3bcc-431c-9714-a6ad79ea3cf1 zfs_member 459.8G

sdd TOSHIBA MG09ACA18TE 6260A1VKFG0H zfs_member 16.4T

sde TOSHIBA MG09ACA18TE 6260A3SCFG0H zfs_member 16.4T

zd0 60G

zd16 60G

zd32 100G

zd48 100G

nvme0n1 Patriot M.2 P300 128GB P300ADBB2303082206 119.2G

|-nvme0n1p1 6fa3c15e-82d0-4157-ac41-b23f3aee4ea6 1M

|-nvme0n1p2 c50ddbbf-59e5-483d-9cc4-e85e9ce80f2d vfat 512M

|-nvme0n1p3 d761a7ec-041b-4ad3-b105-71aa31df6ead zfs_member 102.7G

`-nvme0n1p4 86edab8d-f33d-4ba6-bf24-dc5887eae886 16G

`-nvme0n1p4 swap 16G

Any idea why GUI could show the drives that way?