I know…no such thing but I need to create it as I need the best write speed I can get.

I have 11 x 12TB disks. I want to create 5 disks in a stripe and then mirror that with 1 spare. I can’t figure it out.

I am running SCALE and hoping that someone can give me a step by step.

I have tried to create 2 stripes but then can’t find the option to mirror them.

I have tried to create 2 sets of mirrors (2 drives per) but no option to then stripe them.

I have to be missing something but can’t find it. Please help.

Multiple data vdevs in a pool are striped automatically.

You can create 5x2-Way mirror vdevs and they will already be striped. You can then use the remaining disk as a spare.

Ty…but I don’t know the steps.

- Create pool.

- Choose Layout - STRIPE

- Press Add on top right.

- Drag 5 disks into the window on the right.

- Press Add on top right again

- Drag the next 5 disks.

- Return to previous screen

- Add the spare disk

- Create the pool

The issue is that it shows me I have a 120TB Array. But that means I have one giant stripe. I wanted 2 stripes of 60TB mirrored.

Sorry for being a pain but totally lost on this interface.

You’re using stripe layout, so you’re getting a stripe.

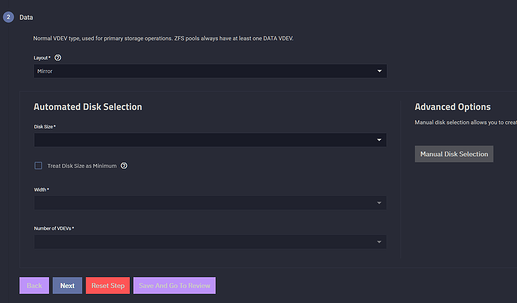

Layout: Mirror

Width: 2

Number of VDEVs: 5

Unfortunately I don’t have spare disks to put together a proper walkthrough, let me know if you need any further clarification here.

I see now…just a different way for me.

- Create a new pool

- Choose Mirror

- Manual disk layout.

- press add to create vDev

- Drag 2 disks into it.

- Click Add (top right) again.

- Drag 2 disks into it.

- Repeat to create numerous vDev’s

So in my case I did this 5x (press the add button on the manual disk selection screen).

Thanks so much!!!

Let’s start from first principles…

You say that you want the best write speed possible. But this depends on:

-

Whether you want best write speeds for relatively small quantities of data at a time (say a few GB) or best write speed for large quantities of data; and

-

Whether this is transactional data or not i.e. whether the app that is sending this data needs to know when it is safely stored on disk (because something else will depend on it) or whether (in the event of a crash or power failure) you can lose the last data sent because you can resend it.

The reasons that this is important:

-

For a few GB of data at a time, the fastest write as seen by e.g. a client PC will be writing asynchronously, because the writes are stored in memory, and transferred to disk later. Writes are effectively limited to network speed.

-

For bulk writes (which exceed the amount that can be stored in memory), writes start to be limited by how fast they can be written to disk. Asynchronous writes are still faster because synchronous writes get written twice, once to a ZIL, and a second time to main data. But either way these will be limited to HDD speeds.

-

If you need transactional security i.e. synchronous writes, then you should definitely be thinking about an NVMe or SSD SLOG (mirrored) so that the ZIL writes are done here, and only the main data writes are made to HDD.

-

I know that the traditional thinking is that mirrors are faster than RAIDZ2 for writes, but I am actually not sure how true that is for a fixed number of drives.

In essence, the maximum write capacity is calculated from the number of drives x write capacity per drive.

With mirrors you will actually have the net write capacity of 5x drives.

With RAIDZ3 you will have the net write capacity of 8x drives. Yes, there is some small CPU overhead for calculating the parity blocks, but this should be more than offset by having 3 extra drives of write capacity. (And of course you get 60% more useable disk space.)

So, personally I would create a mirror set (5x mirror pairs, one spare) and test what the write performance is. And then I would reconfigure it as a 11x RAIDZ3 (no spare because we have 3x redundancy and can wait for a physical replacement) and test the write performance again. (And by testing, I mean writing at least a TB of data.)

If you need synchronous writes, then you definitely need an NVMe mirror pair SLOG. If not, then make sure that you are enabling asynchronous writes.

I think you should have been able to do it in one step by specific if the width of 2 and vdevs of 5.

The key is you want a stripe of mirrors, not a mirror of stripes.

In ZFS redundancy comes from the VDev layer, not the pool layer, striping is effected by spreading writes amongst all available vdevs (the mirrors) and then the reads follow that.