–revised post—

It seems i was mistaken about truenas not having a kernel that supports this scenario. Apologies for not thinking to test dragonfish first.

I tried installing 24.04.0 (24.10 was my first time installing truenas) i found this scenario works perfectly in dragon fish first release.

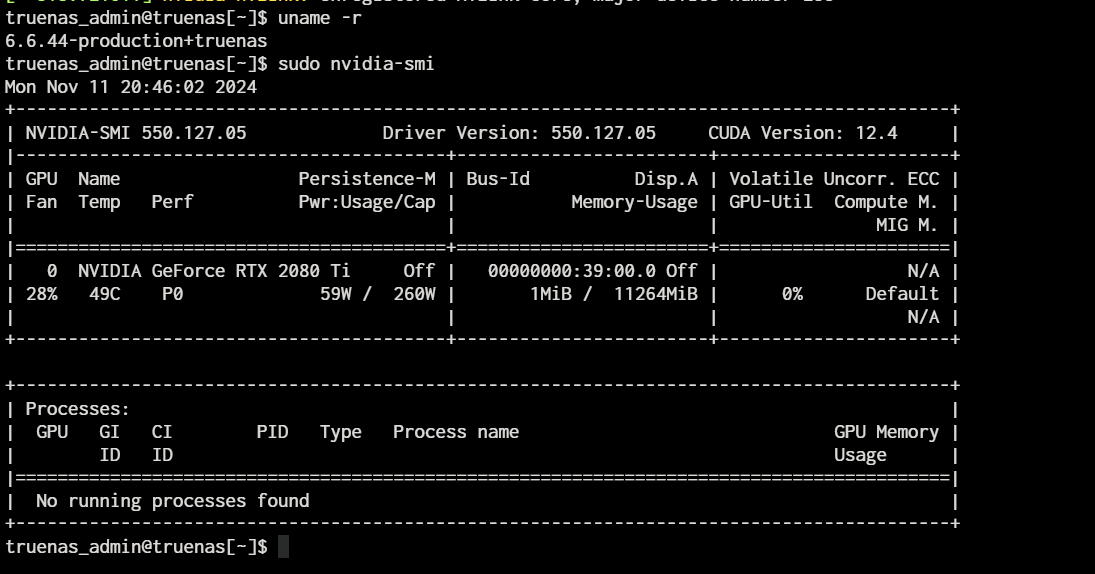

- 6.6.20-production+truenas where it works

- 6.6.44-production+truenas where it doesn’t work

tl;dr on dragonfish my USB4 connect GPU works 100% fine (i can query it with nvidia-smi) on electric eel it does not.

On 24.10.1 nvidia driver fails to install due to the kernel module not loading:

[ 294.025596] VFIO - User Level meta-driver version: 0.3

[ 294.280088] nvidia-nvlink: Nvlink Core is being initialized, major device number 235

[ 294.285531] nvidia 0000:0f:00.0: Unable to change power state from D3cold to D0, device inaccessible

[ 294.285628] nvidia 0000:0f:00.0: vgaarb: VGA decodes changed: olddecodes=io+mem,decodes=none:owns=none

[ 294.285652] NVRM: The NVIDIA GPU 0000:0f:00.0

NVRM: (PCI ID: 10de:1e07) installed in this system has

NVRM: fallen off the bus and is not responding to commands.

[ 294.285706] nvidia: probe of 0000:0f:00.0 failed with error -1

[ 294.285719] NVRM: The NVIDIA probe routine failed for 1 device(s).

[ 294.285720] NVRM: None of the NVIDIA devices were initialized.

[ 294.285928] nvidia-nvlink: Unregistered Nvlink Core, major device number 235

I moved post to feature requests as this is still a feature request to make this a supported test scenario, this would have trapped the regression.

—original post—

Adding of full USB4 support would be useful inclusion, but is highly dependent on kernel versions. I am willing to help figure out what, if any, LTS kernel versions this works on, i am willing to compile my own kernel versions if required to assist.

What scenarios is this useful:

- Connecting USB4 connected GPUs to assist in VMs running AI tasks

- Connecting additional high speed network interfaces (PCIE tunneling)

- Use of software connection manager for 40Gb interconnects between servers (USB4 P2P connections)

I understand this is niche, not asking for anyone to break a leg getting this in, just include the support for the scenarios as upstream kernel features and fixes come to LTS Kernels.

Sub feature request to this would be to include intel-tbtools package.