TrueNAS 25.10 now uses the NVIDIA open GPU kernel modules with the 570.172.08 driver. This enables TrueNAS to make use of NVIDIA Blackwell GPUs - the RTX 50-series and RTX PRO Blackwell cards - which many users have requested support for.

The NVIDIA 50-series Blackwell cards require the use of the new open GPU kernel module, but several of NVIDIA’s older generations of GPUs - including the Maxwell, Pascal, and Volta generations - lack the GPU System Processor (GSP) module on their silicon in order to leverage the open kernel module, and thus will no longer function. This includes the GTX 700-series, 900-GTX series, GTX 10-series, the Quadro M-series and P-series, and Tesla M-series and P-series cards.

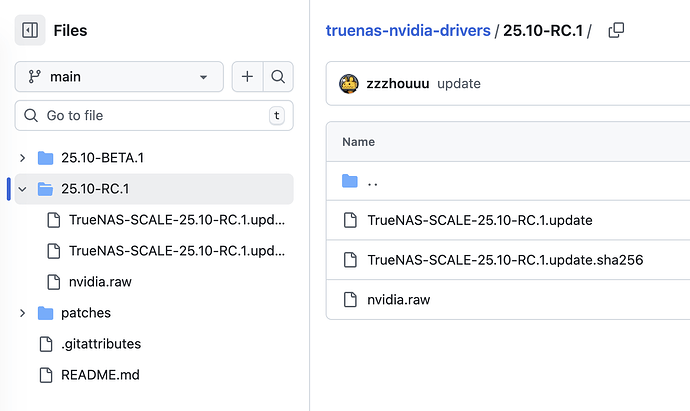

I modified the official repository’s scale-build to switch back to the proprietary driver in order to support these soon-to-be-deprecated cards, and uploaded the compiled driver to truenas-nvidia-drivers.

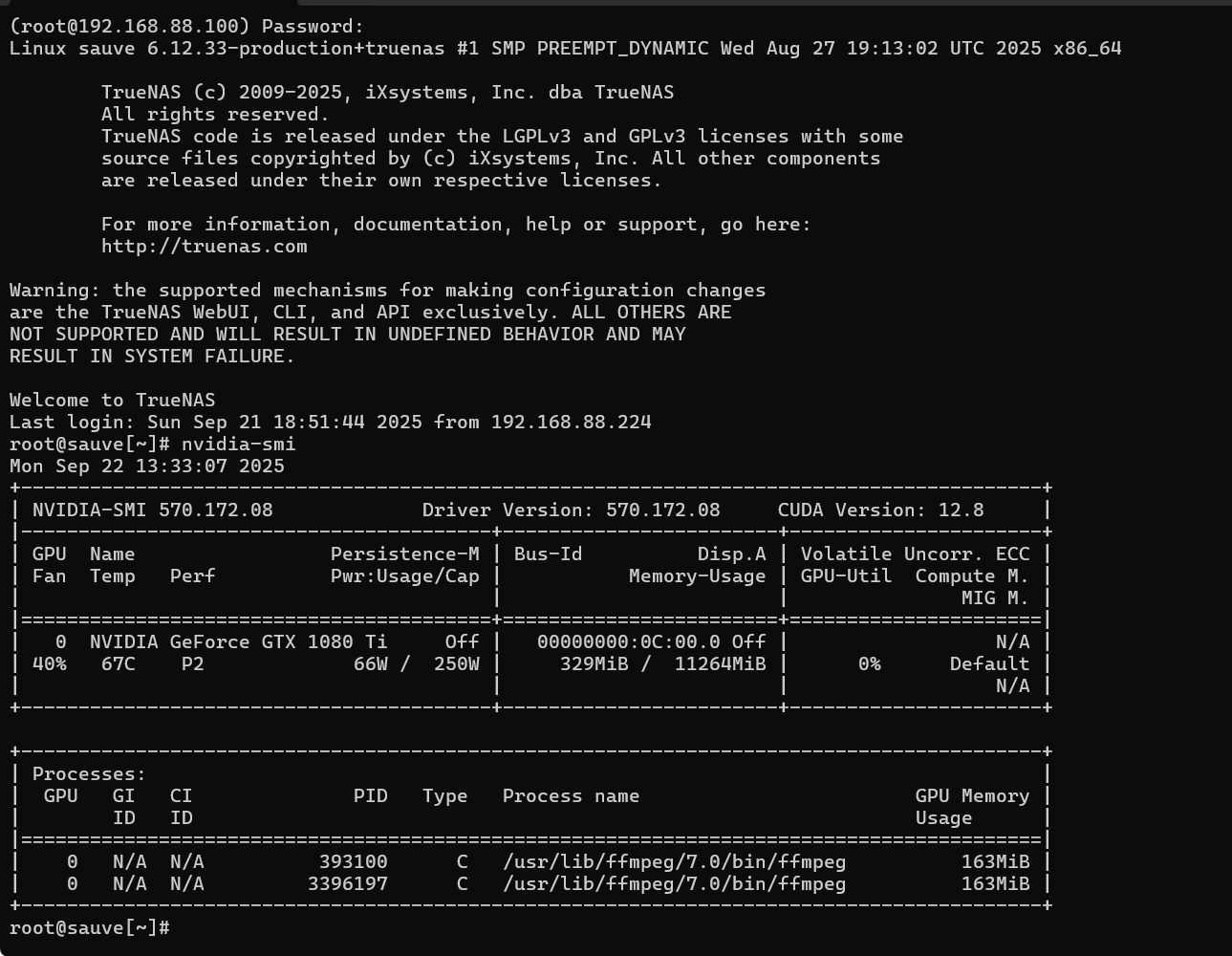

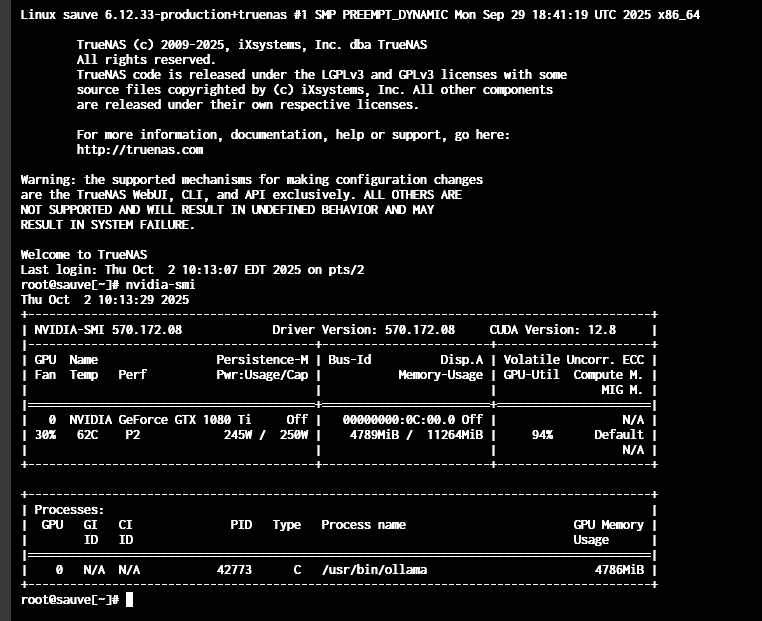

running TrueNAS 25.10 beta with an NVIDIA GPU can test this driver and share feedback on how it performs.

My machine has a Tesla P4 installed. It handles real-time transcoding in Plex and Jellyfin, runs Immich’s machine-learning features (transcoding, smart search, face recognition), and Beszel’s monitoring (power draw, utilization, VRAM) all at once—everything runs smoothly.

nvidia-kernel-module-change-in-truenas-25-10-what-this-means-for-you