Hello,

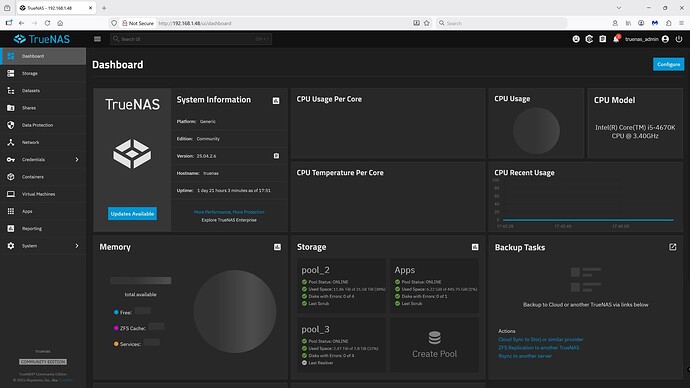

I started to get glitches when trying to stream movies from my pool_1, so I logged into my TrueNAS to see what’s going on. pool_1 has diasappeared from the Storage tab. (Pools 2 & 3 are still present) If I go to Storage>Disks all my disks are listed but those in pool_1 have (exported) next to them. If I click on Import Pool, pool_1 is listed, but the import always fails.

In the shell, if I try it says pool_1 is online and all 4 disks are online with no obvious indication of errors. If I try <sudo zpool import pool_1 -f> it says cannot import ‘pool_1’ : I/O error.

I think this means my data is still safe and my exported disks are not faulty, but how can I get pool_1 back?

All 4 disks in pool_1 are attached directly to the motherboard via SATA cables and there don’t appears to be any obvious hardware faults.

When I attempt to import via the GUI I get the following text:-

concurrent.futures.process.RemoteTraceback:

“”"

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs/pool_actions.py", line 233, in import_pool

zfs.import_pool(found, pool_name, properties, missing_log=missing_log, any_host=any_host)

File “libzfs.pyx”, line 1374, in libzfs.ZFS.import_pool

File “libzfs.pyx”, line 1402, in libzfs.ZFS.__import_pool

libzfs.ZFSException: cannot import ‘pool_1’ as ‘pool_1’: I/O error

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “/usr/lib/python3.11/concurrent/futures/process.py”, line 261, in _process_worker

r = call_item.fn(*call_item.args, **call_item.kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/worker.py”, line 116, in main_worker

res = MIDDLEWARE._run(*call_args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/worker.py”, line 47, in _run

return self.call(name, serviceobj, methodobj, args, job=job)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/worker.py”, line 41, in call

return methodobj(*params)

^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/schema/processor.py”, line 178, in nf

return func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs/pool_actions.py", line 213, in import_pool

with libzfs.ZFS() as zfs:

File “libzfs.pyx”, line 534, in libzfs.ZFS.exit

File “/usr/lib/python3/dist-packages/middlewared/plugins/zfs/pool_actions.py”, line 237, in import_pool

raise CallError(f’Failed to import {pool_name!r} pool: {e}', e.code)

middlewared.service_exception.CallError: [EZFS_IO] Failed to import ‘pool_1’ pool: cannot import ‘pool_1’ as ‘pool_1’: I/O error

“”"

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File “/usr/lib/python3/dist-packages/middlewared/job.py”, line 515, in run

await self.future

File “/usr/lib/python3/dist-packages/middlewared/job.py”, line 560, in _run_body

rv = await self.method(*args)

^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/schema/processor.py”, line 174, in nf

return await func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/schema/processor.py”, line 48, in nf

res = await f(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/pool/import_pool.py", line 118, in import_pool

await self.middleware.call(‘zfs.pool.import_pool’, guid, opts, any_host, use_cachefile, new_name)

File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 1005, in call

return await self._call(

^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 728, in _call

return await self._call_worker(name, *prepared_call.args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 734, in _call_worker

return await self.run_in_proc(main_worker, name, args, job)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 640, in run_in_proc

return await self.run_in_executor(self.__procpool, method, *args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 624, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

middlewared.service_exception.CallError: [EZFS_IO] Failed to import ‘pool_1’ pool: cannot import ‘pool_1’ as ‘pool_1’: I/O error

Help!!!