Hi there,

I feel like this is going to be like finding a needle in a haystack, but I’m going to give it a go anyways.

I’ve been working weeks on trying to troubleshoot this, from making PCAPs to trying to get smbd to get me som actually useful logging.

So we’re running TrueNAS SCALE (latest version) on a purpose-built system for a small company. It’s not an IX system, since at the time the company was very much a startup and wanted to save money by going custom.

This system has worked fine for the past 1.5 year. It’s a small system with 3x4TB NVMe drives totalling around 5TB of useable storage.

We’re using SMB combined with Azure AD DS as authentication mechanism, where we tie to ADDS through a VPN. For a while I suspected this VPN and even replaced it for something I deemed more reliable then what we had, but that doesn’t seem to make any difference.

All shares use their AD credentials to authenticate clients.

All shares have the same configuration, aside from some shares being only made accessible to certain security groups.

We have one share that’s accessible to all authenticated users. And somehow, ever since the latest SCALE update it magically ‘dies’ once a day. People just stop being able to connect to it and get timeouts, where the other shares just continue to work fine. This problem can be resolved by restarting the SMB service or TrueNAS itself after which it functions for 1 or 2 days until it startsmisbehaving again. This doesn’t happen on a set time, it’s a random time during the day.

When it ‘errors’ there is no error message coming from TrueNAS itself, no unhealthy AD connection, nothing.

I’ve been trying to set the SMB service logging to be more verbose, but the only thing that’s yielding me is audit logging and frankly I’m just trying to figure out what is causing this behavior. I’m hoping someone here might be able to give me some guidance in gaining insights into this.

All help is appreciated!

Best regards,

JustSem

Hi and welcome to the forums.

Sorry to hear about your issue.

Could you post screenshots of your dataset ACL and also your share ACL.

So it’s just this one share that provides access to domain users thats the issue, is that correct?

PS: What version are you running?

Hey, thanks for your reply!

And I’m sorry for the confusion, so let me clarify a few things:

- I’m running the latest version (ElectricEel-24.10.2)

- We have 5 shares, all basic SMB shares which are tied to the Azure ADDS. There’s just one of these shares that is constantly being problematic.

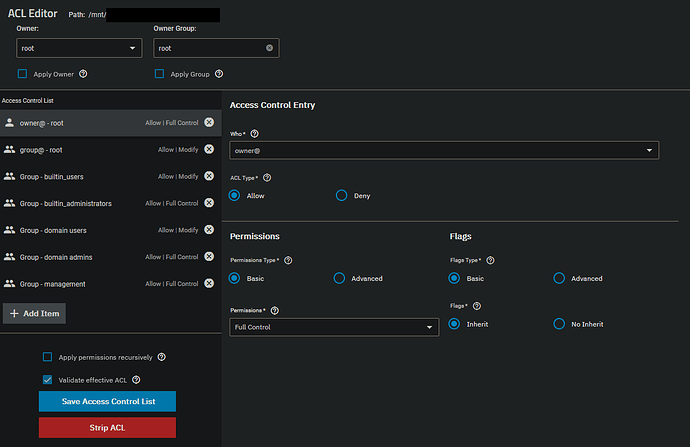

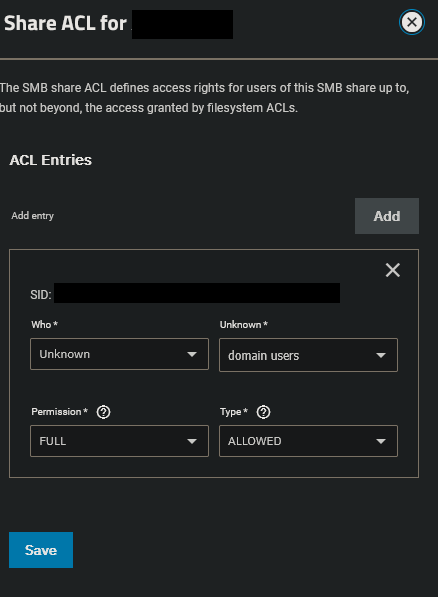

The two screenshots below are for the problematic share

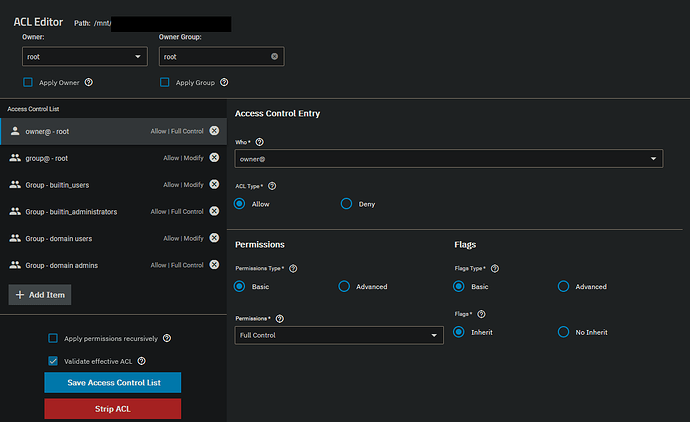

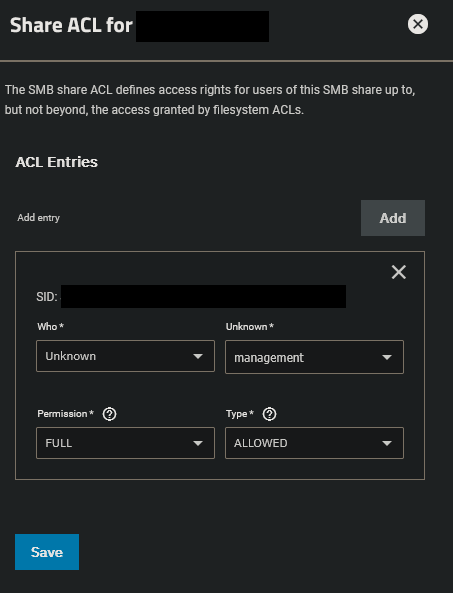

The two screenshots below are for a normal functioning share

Yeah nothing obvious there to me but it’s defo an odd one.

The only thing I can offer is to say I’ve never experience this issue however I generally don’t mess around with share ACLs and just manage access via dataset permissions. Would you be willing to change the share ACL back to default to see if that improves things in an attempt to narrow down the issue?

How much RAM does this server have?

This server is running on 64GB (DDR5). Which should be plenty.

There is a VM running on it, but that has only got 8GB allocated to it.

I can change the ACLs back to default, I’ll give that a go. Though I’m not really sure that a change like that will fix the issue.

I presume CPU is equally capable and never runs that high?

Can you post the output of testparm -s

Exactly, CPU load is never really high. Maybe briefly on peak moments but those don’t seem to coincide with the share that stops working.

The GeneralShare is the malfunctioning one, for reference

Load smb config files from /etc/smb4.conf

Unknown parameter encountered: "ads dns update"

Ignoring unknown parameter "ads dns update"

lpcfg_do_global_parameter: WARNING: The "lanman auth" option is deprecated

lpcfg_do_global_parameter: WARNING: The "client lanman auth" option is deprecated

Loaded services file OK.

Weak crypto is allowed by GnuTLS (e.g. NTLM as a compatibility fallback)

Server role: ROLE_DOMAIN_MEMBER

# Global parameters

[global]

allow trusted domains = No

bind interfaces only = Yes

disable spoolss = Yes

dns proxy = No

domain master = No

interfaces = 127.0.0.1 <redacted> <redacted-ipv6>

kerberos method = secrets and keytab

load printers = No

local master = No

logging = file

map to guest = Bad User

max log size = 5120

ntlm auth = ntlmv1-permitted

passdb backend = tdbsam:/var/run/samba-cache/private/passdb.tdb

preferred master = No

printcap name = /dev/null

realm = <redacted>

registry shares = Yes

security = ADS

server multi channel support = No

server role = member server

server string = TrueNAS Server

template homedir = /var/empty

template shell = /bin/sh

winbind cache time = 7200

winbind enum groups = Yes

winbind enum users = Yes

winbind max domain connections = 10

winbind use default domain = Yes

workgroup = AAD

idmap config * : range = 90000001 - 100000000

idmap config aad : range = 100000001 - 200000000

idmap config aad : backend = rid

rpc_server:mdssvc = disabled

rpc_daemon:mdssd = disabled

fruit:zero_file_id = False

fruit:nfs_aces = False

idmap config * : backend = tdb

create mask = 0664

directory mask = 0775

[GeneralShare]

ea support = No

path = /mnt/<redacted>/general

posix locking = No

read only = No

smbd max xattr size = 2097152

vfs objects = fruit streams_xattr shadow_copy_zfs ixnas zfs_core io_uring

tn:vuid =

fruit:time machine max size = 0

fruit:time machine = False

fruit:resource = stream

fruit:metadata = stream

nfs4:chown = True

tn:home = False

tn:path_suffix =

tn:purpose = DEFAULT_SHARE

[Engineering]

ea support = No

path = /mnt/<redacted>/engineering

posix locking = No

read only = No

smbd max xattr size = 2097152

vfs objects = fruit streams_xattr shadow_copy_zfs ixnas zfs_core io_uring

tn:vuid =

fruit:time machine max size = 0

fruit:time machine = False

fruit:resource = stream

fruit:metadata = stream

nfs4:chown = True

tn:home = False

tn:path_suffix =

tn:purpose = DEFAULT_SHARE

[Management]

ea support = No

path = /mnt/<redacted>/management

posix locking = No

read only = No

smbd max xattr size = 2097152

vfs objects = fruit streams_xattr shadow_copy_zfs ixnas zfs_core io_uring

tn:vuid =

fruit:time machine max size = 0

fruit:time machine = False

fruit:resource = stream

fruit:metadata = stream

nfs4:chown = True

tn:home = False

tn:path_suffix =

tn:purpose = DEFAULT_SHARE

Was this server updated from previous versions of TrueNAS SCALE and even perhaps CORE?

Did you ever use specific auxiliary parameters?

Yeah, it’s been running SCALE since somewhen in 2022 I believe.

It hasn’t ever ran CORE. I just regulary update the server when a new version of SCALE comes out.

As for the auxiliary parameters: In the past I’ve had an issue with a share where there was a specific connectivity problem from users connecting from remote sites. In the end this seemed to be more of a windows specific issue when going through IPSec, after which I removed the aux parameters again.

Ok just comparing your output with mine and they are somewhat different. Might be worth recreating the share and sticking with the defaults to remove any potential variables.