Correct.

Scale is not virtualized. The main network adapter is bridged.

netstat -ar on Core:

Internet:

Destination Gateway Flags Netif Expire

default minrouter.home UGS em0

10.8.0.1 link#4 UH tun0

10.8.0.20 link#4 UHS lo0

localhost lo0 UHS lo0

192.168.0.0/24 link#1 U em0

192.168.0.20 link#1 UHS lo0

and ifconfig on Core:

em0: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 1500

options=81249b<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,VLAN_HWCSUM,LRO,WOL_MAGIC,VLAN_HWFILTER>

ether dc:4a:3e:73:bd:d6

inet 192.168.0.20 netmask 0xffffff00 broadcast 192.168.0.255

media: Ethernet autoselect (1000baseT <full-duplex>)

status: active

nd6 options=1<PERFORMNUD>

lo0: flags=8049<UP,LOOPBACK,RUNNING,MULTICAST> metric 0 mtu 16384

options=680003<RXCSUM,TXCSUM,LINKSTATE,RXCSUM_IPV6,TXCSUM_IPV6>

inet6 ::1 prefixlen 128

inet6 fe80::1%lo0 prefixlen 64 scopeid 0x2

inet 127.0.0.1 netmask 0xff000000

groups: lo

nd6 options=21<PERFORMNUD,AUTO_LINKLOCAL>

pflog0: flags=0<> metric 0 mtu 33160

groups: pflog

tun0: flags=8051<UP,POINTOPOINT,RUNNING,MULTICAST> metric 0 mtu 1500

options=80000<LINKSTATE>

inet 10.8.0.20 --> 10.8.0.1 netmask 0xffffff00

groups: tun

nd6 options=1<PERFORMNUD>

Opened by PID 1518

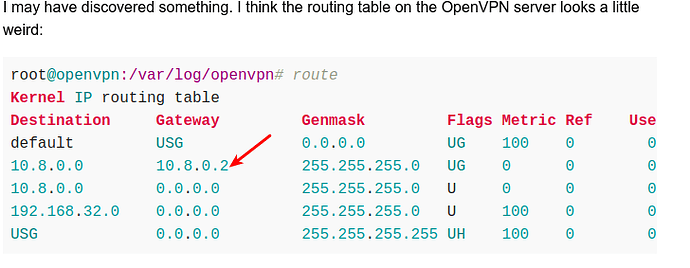

routetable from ovpn server:

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

default USG 0.0.0.0 UG 100 0 0 ens3

10.8.0.0 10.8.0.2 255.255.255.0 UG 0 0 0 tun0

10.8.0.0 0.0.0.0 255.255.255.0 U 0 0 0 tun0

192.168.32.0 0.0.0.0 255.255.255.0 U 100 0 0 ens3

USG 0.0.0.0 255.255.255.255 UH 100 0 0 ens3

ip a on ovpn server:

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host noprefixroute

valid_lft forever preferred_lft forever

2: ens3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:a0:98:0b:79:0a brd ff:ff:ff:ff:ff:ff

altname enp0s3

inet 192.168.32.42/24 metric 100 brd 192.168.32.255 scope global dynamic ens3

valid_lft 53767sec preferred_lft 53767sec

inet6 fe80::2a0:98ff:fe0b:790a/64 scope link

valid_lft forever preferred_lft forever

3: tun0: <POINTOPOINT,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN group default qlen 500

link/none

inet 10.8.0.1/24 scope global tun0

valid_lft forever preferred_lft forever

inet6 fe80::ef7d:6372:39b3:3945/64 scope link stable-privacy

valid_lft forever preferred_lft forever

If 10.8.0.2 is used, it must be something that openvpn does and/or needs on the server. There is no client with that IP. Is it incorrect and should be removed? That was my feeling.

I don´t think I should have to change my OpenVPN server config, as it has worked until I did tha pool upgrade on the Scale. The Core has a certificate installed, so there is no “additional machines”. Am i understanding this correctly?