TrueNAS Scale has every so often recently randomly lost access to the mirrored SSDs that host the OS.

I have swapped between SATA drives cables, SATA controllers (onboard/LSI 9305-16i), made sure the two drives run on separate SATA power cables, checked SMART data on the boot pool drives (all good, as are the other drives in the other pools), and now I can only assume it’s to do with the Dragonfish update, as the previous Cobia (and all the way back to Angelfish) versions had not had issues for a very long time.

The entire system fails, and the only way to get it back up and running is to full power off and power on, not even a reboot will work.

AFAICT, the logs seem to indicate a failing drive,but I have doubts as to how accurate the logs are.

Mainly, the drive it reports in logs and the one shown in the console (pikvm still kept the last displayed frame which was how i checked) are two different drives, the one in logs being part of the data pool, while the one in the console being of the boot pool, but only one of the two.

Additionally, all of the disks in the system have recently ran and passed an extended offline test.

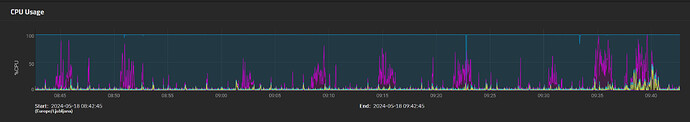

Interestingly, with some associative data gathering, I did notice that it also seemed to coincide with a sudden high IOwait in the CPU reporting graph.

As seen from the image, the first one was when it was connected to the onboard sata controller, the second crash via the LSI HBA card, and the third back on the onboard.

My (admittedly amateur) guess is that the reason the IOwait doesn’t happen on the second one is to do with the fact that the HBA is handling the writing/reading task, rather than the CPU. Though, this is just guesswork from what I recalled and pieced together from reading this link from the archived forums regarding why I needed an HBA.

So, I return to my only logical answer I can make. That, all things having run in the system config for as long as it has ( 2 years or so), the only new variable is the OS update, which is probably the cause of the issue.