I’ve got a couple of 118 GB P1600x and a couple of pools that will need a SLOG device (VM hosting + DB traffic). I just couldn’t bear to waste a single drive completely on a SLOG device when I could use what I have more efficiently and have redundancy.

I also didn’t find a good guide for SCALE on how to do this so I pieced together a procedure that seems to work fine out of the many guides on how to partition the boot-pool in SCALE plus adapting the scripts I have from the CORE setup I had done.

Given that the pools are sitting behind a pair of 10 Gb/s NICs, I judged 16 GB should be more than enough (5 sec * 1.2 GB/s = 6 GB) and I thought I’d use the extra space to prep for a future jump to 40 Gb/s (it is highly unlikely that there will be synchronous traffic coming in to my storage at line speed for that long).

The two P1600xs are sitting on nvme0n1 and nvme1n1 devices respectively. I want to carve out the partitions for two SLOG mirrors, one for each pool. Here is the procedure I had used against a couple of test pools:

// delete all prev partitions + initialise disks with GPT

sudo sgdisk -og /dev/nvme0n1

sudo sgdisk -og /dev/nvme1n1

// create ZFS partitions of size 16G for the first SLOG mirror

sudo sgdisk -n 0:0:+16G -t 0:BF01 /dev/nvme0n1

sudo sgdisk -n 0:0:+16G -t 0:BF01 /dev/nvme1n1

// make kernel aware of new partitions

sudo partprobe

// work out the PARTUUID for these new partitions

sudo blkid /dev/nvme0n1p1

sudo blkid /dev/nvme1n1p1

// take the output of the previous two directives and plug the values into the command to add the new SLOG mirror, i.e.

sudo zpool add vm-nand log mirror /dev/disk/by-partuuid/ce3e51ea-cf36-4f3e-9ae0-f3d7a19a48b1 /dev/disk/by-partuuid/d36a5367-91e4-40f1-b1d5-dfe2539c9ce5

// now repeat for the second SLOG mirror

sudo sgdisk -n 0:0:+16G -t 0:BF01 /dev/nvme0n1

sudo sgdisk -n 0:0:+16G -t 0:BF01 /dev/nvme1n1

sudo partprobe

sudo blkid /dev/nvme0n1p2

sudo blkid /dev/nvme1n1p2

sudo zpool add vm-optane log mirror /dev/disk/by-partuuid/5e201121-3b9d-483a-a49d-61920d153bc2 /dev/disk/by-partuuid/ec2dcb95-6a75-4f83-99be-0d45eb99d949

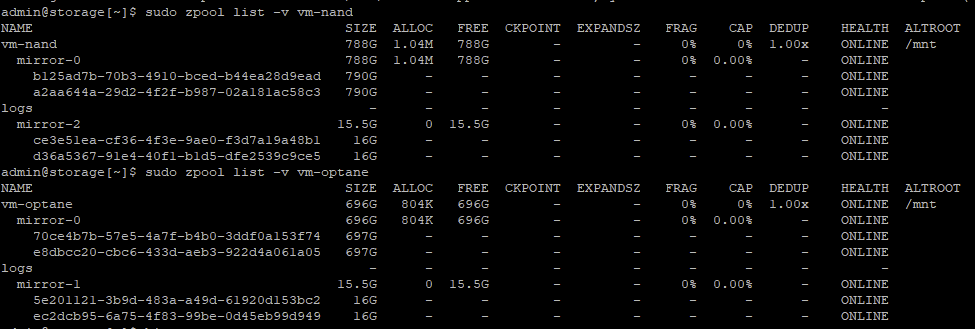

The end result should be something like this:

Aside from the usual caution of doing this against an appliance OS (you’re basically on your own in case anything goes awry), the end result looks promising.

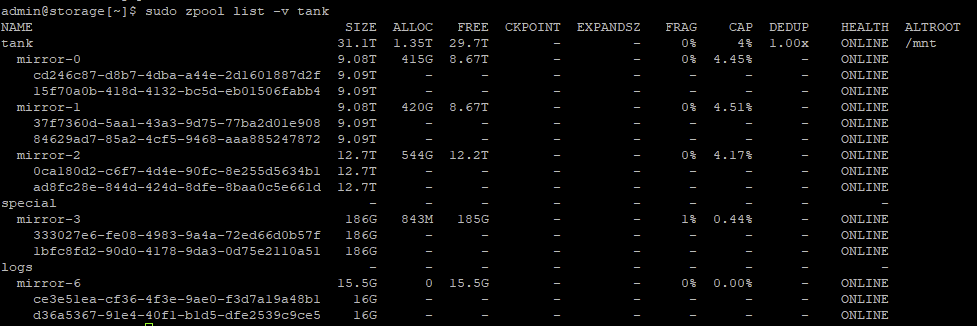

The UI, however, is rightfully confused:

Any suggestions on how to improve this procedure or how to fix the UI?

EDIT: Should you need to remove the log mirror vdev from a pool, it’s best to do this through CLI:

sudo zpool remove vm-nand mirror-2

sudo zpool remove vm-optane mirror-1

Doing this through the UI throws an error dialog if you try to remove the log vdev, but ultimately does seem to remove the log vdev.