Hi everyone, as the title says, I am trying to pass the iGPU of an intel i5 13400 to a VM but when I try to isolate it inside the advanced settings I can’t find it. I can only see the nvidia GPU I have which is used by docker containers.

Any idea?

Thanks

Mind providing the following output?

lspci | grep -i vga

If you don’t see your igpu there, you might have to go onto motherboard bios settings & enable the igpu.

Usually when you connect a dedicated graphics card the motherboard disables igpu & somewhere there’ll be something like ‘igpu’ or ‘multi monitor’ or whatever naming convention your flavour of mobo vendor decided is to obfuscated the setting under.

*Edit: corrected a typo in the command, it is ‘-i’ not ‘-t’.

…huh - any chance your igpu successfully passes through to your vm without isolation? Because I’ve tried nothing & I’m all out of ideas. It doesn’t always actually need to be isolated to work.

edit, maybe dummy HDMI dongle?

1 last idea - I remember on my proxmox system when I was slicing up my iGPU for VMs I would HAVE to have at least a dummy hdmi dongle on boot else things would break. Sounds stupid, but are you willing to see if there any changes after having connected it to a monitor (may have to do so before boot & leave it if you don’t have a dummy plug).

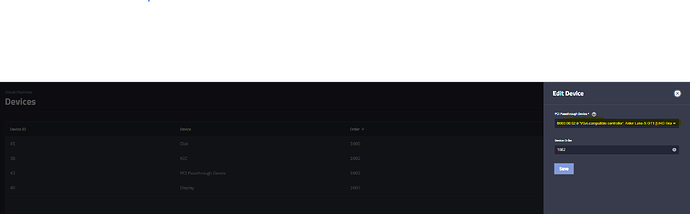

the problem is that I don’t see it in the devices to passthrough ![]()

100% try plugging in a monitor or dummy dongle & see if it shows. (May have to boot with it plugged in & leave it that way).

You’re right! maybe I can try with a dummy plug

@Fleshmauler I’ve tried to plug-in a dummy HDMI into HDMI port which is connect to the iGPU.

I can see the iGPU if I try to add a pcie under devices in the VM settings.

Though if I try to select it under the settings of the VM, I can’t see find any iGPU:

The result is that I tried to add the gpu as passthough pcie but when I start the VM the screen is black/blank.

Any idea?

Any chance installing the drivers & then passing it through make a difference? I remember struggling with driver error 34 or some such nonsense when I was passing through to a windows vm, but I can’t remember what I did to make it just work.

Also, any chance the igpu now shows up for gpu isolation?

I mean, we got progress now, at least it is showing up at all.

@amigo3271 What you’ll need/want to do here is see if you can specify the GPU initialization order in your BIOS/UEFI. You want the TrueNAS console to show up and claim the NVIDIA card as the primary GPU - it can share between Docker containers and the host OS without any conflicts. This would hopefully leave the iGPU available for isolation and passthrough to your VM.

do you mean to install drivers inside the VM?

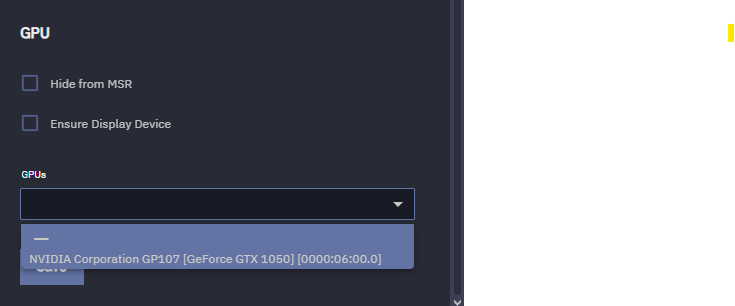

Nope, in the GPU isolation section I can only find the nvidia GPU ![]()

Yes I think I can somehow specify that in the BIOS… I will check and I let you know ![]() thanks

thanks

Yes - though I remember it being a nightmare of chicken vs egg where drivers wouldn’t install until gpu was detected, and gpu wouldn’t be detected until drivers installed. I just can’t remember what change I made to make it all click together.