Yes, starting with the B550, and then B650 and X670 (= 2* B650).

There are x8x4x4 adapters which hold two M.2 and a half-height x8 card in a x16 slot. Perfect here.

Yes, starting with the B550, and then B650 and X670 (= 2* B650).

There are x8x4x4 adapters which hold two M.2 and a half-height x8 card in a x16 slot. Perfect here.

Thanks for your input, it is much appreciated. You are absolutely not wrong but the issue with the LSI cards is often, that they consume a considerable amount of energy. Also, I’ve had quirks (on desktop platforms) where I had to tape off the SMBus pins (5 and 6) on the PCIe connector of the LSI card to make the system boot. Also, I’d have to mount the riser and LSI card in the case somewhere plus I’m a bit afraid of introducing signal integrity issues due to cheap risers, tbh. That’s why I’m not a big fan of this solution. Although I have to admit, that I haven’t thought about that path for this particular system yet.

solnet-array-test-v3.sh gives me solid results for reads and I can clearly see from the results which drives are connected to the onboard controller (sda, sdb, sdc, sde) and which to the M.2 cards (sdd, sdf, sdg, sdh, sdi, sdj, sdk, sdl):

Serial Parall % of

Disk Disk Size MB/sec MB/sec Serial

------- ---------- ------ ------ ------

sda 480103MB 496 471 95

sdb 480103MB 500 544 109 ++FAST++

sdc 480103MB 507 534 105

sdd 480103MB 493 414 84 --SLOW--

sde 480103MB 516 543 105

sdf 480103MB 502 411 82 --SLOW--

sdg 480103MB 500 406 81 --SLOW--

sdh 480103MB 519 450 87 --SLOW--

sdi 480103MB 499 400 80 --SLOW--

sdj 480103MB 505 433 86 --SLOW--

sdk 480103MB 507 414 82 --SLOW--

sdl 480103MB 499 401 80 --SLOW--

Next I’ll entirely remove 1 drive from each M.2 card to see whether they can catch up with the onboard SATA.

So, six drives on onboard SATA makes things slow, 4 drives on each of the ASM1166 cards isn’t too great (but still better than six drives on onboard SATA)

A tool like solnet-array-test-v3.sh for write testing would be great. During write benchmarks with CrystalDiskMark, I only see around 100 MB/s of throughput on the SSDs, so bandwidth can’t be the bottleneck here. Maybe in that case it really is just the weak ASMedia SATA controllers. Maybe this is the point where an LSI card would perform better. ALTHOUGH, in the i5-8400T system from the initial post, an ASM1164 (which seems to be almost the same as ASM1166), i get DOUBLE the write performance. So something leaves me scratching my head…

I found only one and that’s apparently not sold anymore, unfortunately. Were you more lucky than me?

Well, Gigabyte lists it under Enterprise/Workstation.

It comes with server features like no sound ( ![]() ) and a Aspeed® AST2500 management controller withGIGABYTE Management Console (AMI MegaRAC SP-X) web interface.

) and a Aspeed® AST2500 management controller withGIGABYTE Management Console (AMI MegaRAC SP-X) web interface.

FWIW I’m using an old gigabyte x570 board with one of my NAS boxes at home plus a PLX based 4 slot m.2 x4 16x card so I am not casting shade.

Im just saying a workstation board based on the desktop grade platform does not have the PCIE connectivity of a real server platform ![]()

yeah well it was more or less kind of an impulse buy. I got mine just before prices skyrocketed, for around 50€ and since that I’ve played around with it and thought it would make a wonderful successor to my current TrueNAS SAN, which is based on said Fujitsu Esprimo P758. Main advantage for me was the ability to put in two SLOG devices and more SATA drives for higher capacity and more performance (so I thought) as well as the more powerful CPU for maybe higher compression and/or dedup (already ditched that idea) + BMC is a very nice to have.

Also, one of my main goals always is low power. At 55-60W idle it is not the lowest power system but given the grunt it has, the amount of disks and the total bang/buck ratio for the investment, it overall seemed good enough for me. More modern or some server platforms even may be lower power but usually also way more expensive to buy.

I was naive enough to think that the 6 onboard SATA ports would get 100% promised bandwidth concurrently, that bifurcation overhead doesn’t exist and that ASM1166 and onboard SATA will perform as good as any other SATA controller.

If I can find a (kind of) low power, low profile LSI card + a x16 to x8/M.2/M.2 riser card I might follow that route. Low profile, because apparently one can fit the LP LSI card in line with the riser into a normal full profile PCIe slot.

Actually, it is free. That doesn’t mean that attaching extra stuff is going to magically scale linearly, but bifurcation is just a fancy word for supporting multiple root port configurations on a single, large physical slot.

Ehh fair enough. I’d say that in my experience (in practice) it’s not been free. But this isn’t a hill I’d like to die on lol

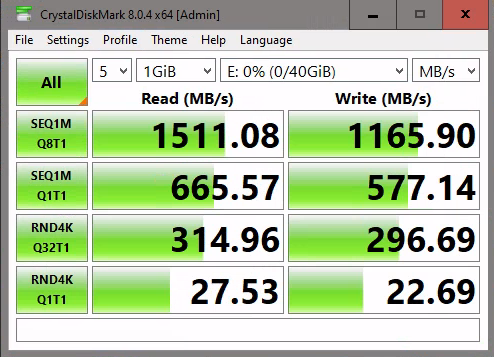

welp, i threw in a LSI SAS3008 based PCIe 3.0 x8 controller with IT firmware (some Fujitsu branded doodad which I crossflashed at some point in time) and all I get with 8 disks is this:

My wimpy 8400T with its 5 onboard intel AHCI SATA ports + 3 on a cheap aliexpress ASM1164 card is still way faster in writes.

The write-benchmark isn’t saturating the SSD or link bandwidth at all, it only does around 220MB/s per disk for the SEQ1MQ8T1 test, so I’d suspect at this point that it isn’t PCIe bandwidth, which is limiting. The LSI card should have enough grunt as well, so I’m once again stranded.

Is the LSI card running in full PCIE 3.0x8 mode? You can check by doing lspci -vv

Look under “LnkSta”

In this example, I have an 8x card running at 4x in my X570 system. Just because it’s 8x physically doesnt mean it’s 8x electrically. Speed 8GT/s corresponds to PCIe Gen3

LnkCap: Port #0, Speed 8GT/s, Width x8, ASPM not supported

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk+

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 8GT/s, Width x4 (downgraded)

If it’s not the above, the next logical place to look would be the network car, it pre-dates even the X520. You have a PCIE 2.0 card which was launched in 2009.

https://www.intel.com/content/www/us/en/products/sku/41282/intel-82599es-10-gigabit-ethernet-controller/specifications.html

You mentioned being concerned about power draw. I’m sure that thing is pretty inefficient anyway.

I actually got two of those ![]() , one literally just before the price tripled.

, one literally just before the price tripled.

One replaced an ASRock > AM1B-ITX with an AMD 5350 Prozessor.

The other replaced a Asus Consumer Board with a Xeon Processor from a time that combination was still possible. I had another one of those and as that one had failed recently, I replaced it, with the MC12.

I liked the features of the MC12 and ECC, course, it was a cheap upgrade to play around with, but suspected there hat to be a catch somewhere, so maybe it is the B550 Chipset and its PCie 3.0 x4 connection to the CPU.

Edit: Maybe try Core on the new build, if that is not to much hassle to set up?

It is a genuine server board… build on a platform intended for desktops, so it may not have the best implementation of each and every server trick that a Xeon Scalable or EPYC plateform could pull—but it is not really meant to.

Key point here is that you cannot argue against a socketed server motherboard that comes under 100 € NEW (as in “new old stock”).

Yeah it says

LnkCap: Port #0, Speed 8GT/s, Width x8, ASPM L0s, Exit Latency L0s <2us

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk+

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 8GT/s, Width x8

TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

NIC says

LnkCap: Port #1, Speed 5GT/s, Width x8, ASPM L0s, Exit Latency L0s <1us

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp-

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk+

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 5GT/s, Width x4 (downgraded)

TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

BUT the server I’m doing the benchmark from has the same card (on an x8 link though). Seeing the read results, it is clearly capable of putting through 1,5GByte/s and I don’t see how writes could be affected differently by the NIC than reads. But I’ll try a different card anyway, just to be sure.

Regarding power draw: it is not that bad. I don’t remember exactly, but I think it was a little less than 10W including SFP+ transceivers.

especially including BMC. that’s actually wild.

BROTHER, with CORE it was even weirder, I actually started with that, because I was using Core before all the time and everything worked well for me. I’d get horrible performance, way slower, like around 200MB/s for the read test which now does 1,5GByte/s out of the box on SCALE.

UNLESS I did a benchmark within a VM on that TrueNAS Core installation at the very same time with the remote benchmark over iSCSI. In that case, I’d get “decent” results. Absolutely wild, just as if something was sleeping and needed to be put into some different performance state/level by some trigger.

btw, I got 3 of them as well LMAO

one for around 50€ and the other two for around 70€

YO

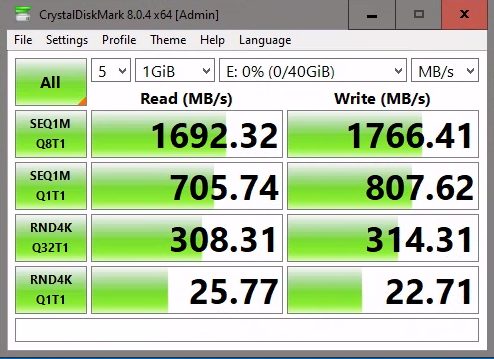

swapped the 82599ES for a dualport PCIe 2.0 x8 BCM57810 which ist JUST as ancient and boom.

funnily enough, the server where the benchmark is running on (VM on top of ESXi) still has an 82599ES installed lmao

I hate drivers and firmware.

Currently I still have the LSI card and the Intel SSDs in it. I’ll now revert the system to its original state with the ASM1166 modules, Optanes and Samsung PM863 SSDs, but with the BCM57810 NIC and see how things go.

Little bit of a different topic but what affordable, low power, more modern 10G (maybe 10/25G) card could I buy? I heard the ConnectX-3 are good but I’ve also read issues about energy efficiency due to lack or bugs(?) in ASPM(iirc?).

Glad we got that sorted. Cheers.

None, really. Something odd must be going on, because the X520/82899 works well and has been around forever. Fun fact, the last update to the datasheet is dated from November. Yeah, last month.

Sure, the X710 is a thing, but I’m not sure those are lower power - they do have more features that few people use. From there, it’s 25 GbE and up.

I’m a big fan of ConnectX4-lx cards, even for 10 gigabit.

[NVIDIA Mellanox ConnectX-4 Lx Ethernet Adapter | NVIDIA] pb-connectx-4-lx-en-card.pdf

NVIDIA doesn’t say what the power draw is of the card, but the X520/82899 are a decade (2009 vs 2019) behind in process nodes in comparison so “it’ll be less”. The spec sheet in STH’s review says 7.4watts.

Mellanox ConnectX-4 Lx Mini-Review Ubiquitous 25GbE - ServeTheHome

I have ConnectX4 or ConnectX4-LX cards in 3 SCALE boxes and they have worked flawlessly. They sell for less than $50 on ebay pretty regularly, and I’ve seen as low as $20s. Cant speak for this seller, just an example.

![]()

![]()

![]() MRT0D DELL MELLANOX CX4121C CONNECTX-4LX 25GB DUAL PORT ADAPTER 0MRT0D | eBay

MRT0D DELL MELLANOX CX4121C CONNECTX-4LX 25GB DUAL PORT ADAPTER 0MRT0D | eBay

idk maybe weird combination of things, maybe old firmware on the NIC (haven’t even checked). opting for more modern cards is probably the way to go anyway.