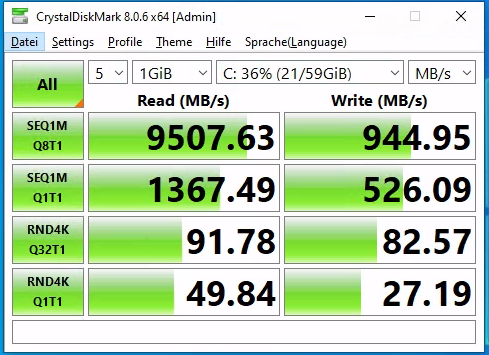

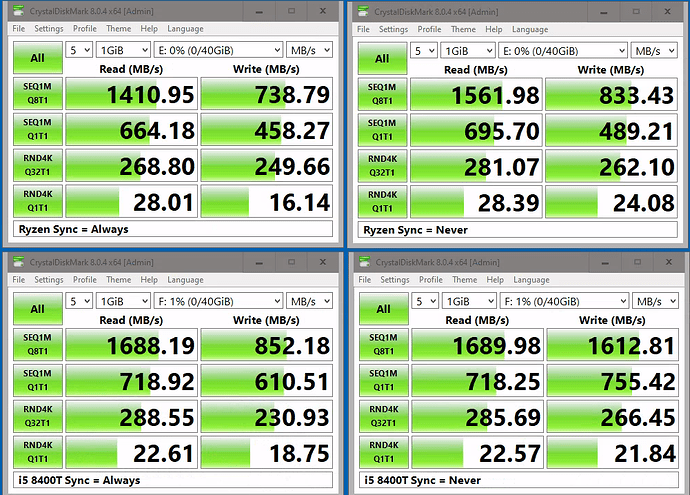

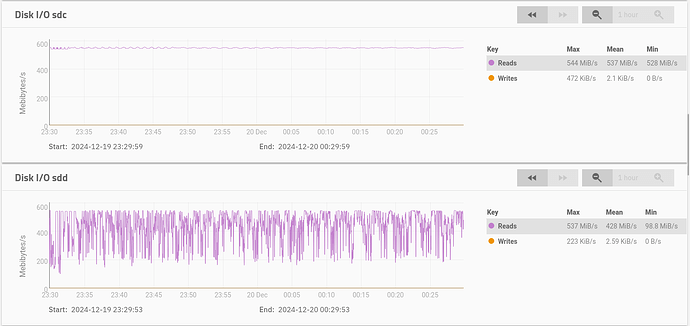

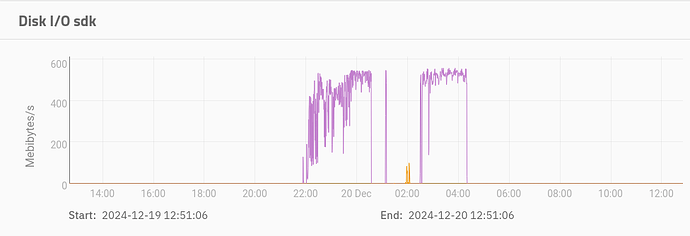

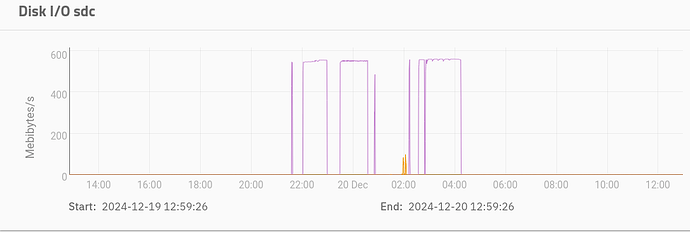

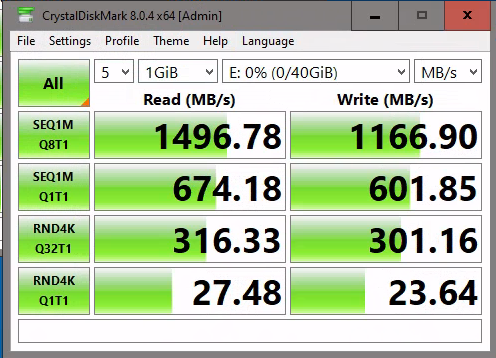

thanks, script has been running for two hours now, still going. what I have found out so far, is that 5 of 12 drives are giving me slow and inconsistent read speeds. Example of a disk which behaves OK and one of the 5 which behave weirdly:

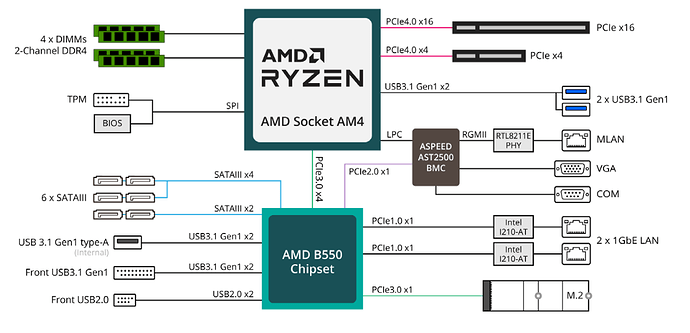

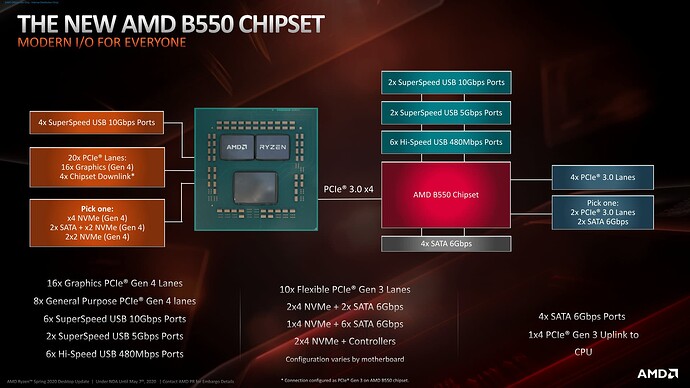

Oddly enough, 3 of them are on the ASM1166 M.2 cards, but the other two are on the onboard B550-backed SATA ports.

Looking at the block diagram of the motherboard, I can see that there is a 3.0 x4 link, which imho should be enough for 6 SATA3 drives, assuming that I’m not using the onboard LAN for anything and the onboard M.2 just for booting. I’m assuming that the chipset is acting as a PCIe switch, thus being able to assign all the bandwidth to the SATA drives, if needed.

Disk Bytes Transferred Seconds %ofAvg

------- ----------------- ------- ------

sda 1920383410176 3411 98

sdb 1920383410176 3410 98

sdc 1920383410176 3409 98

sdd 1920383410176 5122 147 --SLOW--

sde 1920383410176 3409 98

sdf 1920383410176 4880 140 --SLOW--

sdg 1920383410176 3406 98

sdh 1920383410176 4855 139 --SLOW--

sdi 1920383410176 3411 98

sdj 1920383410176 3406 98

sdk 1920383410176 5246 150 --SLOW--

sdl 1920383410176 4860 139 --SLOW--

nvme2n1 58977157120 22 1 ++FAST++

nvme0n1 58977157120 6 0 ++FAST++

NVMe disks are only used if i’m doing sync writes. since this is about reads and async writes (sync=disabled), the NVMe disks imo don’t matter for this.

the smart details for sdd, sdf, sdh, sdk and sdl look clean to me, they all look like this (sdf) and they’re all about the same age and wear level:

martctl 7.4 2023-08-01 r5530 [x86_64-linux-6.6.44-production+truenas] (local build)

Copyright (C) 2002-23, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Model Family: Samsung based SSDs

Device Model: SAMSUNG MZ7LM1T9HCJM-00003

Serial Number: deleted

LU WWN Device Id: deleted

Firmware Version: GXT3003Q

User Capacity: 1,920,383,410,176 bytes [1.92 TB]

Sector Size: 512 bytes logical/physical

Rotation Rate: Solid State Device

TRIM Command: Available, deterministic, zeroed

Device is: In smartctl database 7.3/5528

ATA Version is: ACS-2, ATA8-ACS T13/1699-D revision 4c

SATA Version is: SATA 3.1, 6.0 Gb/s (current: 6.0 Gb/s)

Local Time is: Fri Dec 20 00:23:52 2024 CET

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

AAM feature is: Unavailable

APM feature is: Unavailable

Rd look-ahead is: Enabled

Write cache is: Enabled

DSN feature is: Unavailable

ATA Security is: Disabled, frozen [SEC2]

Wt Cache Reorder: Enabled

=== START OF READ SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

General SMART Values:

Offline data collection status: (0x02) Offline data collection activity

was completed without error.

Auto Offline Data Collection: Disabled.

Self-test execution status: ( 0) The previous self-test routine completed

without error or no self-test has ever

been run.

Total time to complete Offline

data collection: ( 6000) seconds.

Offline data collection

capabilities: (0x53) SMART execute Offline immediate.

Auto Offline data collection on/off support.

Suspend Offline collection upon new

command.

No Offline surface scan supported.

Self-test supported.

No Conveyance Self-test supported.

Selective Self-test supported.

SMART capabilities: (0x0003) Saves SMART data before entering

power-saving mode.

Supports SMART auto save timer.

Error logging capability: (0x01) Error logging supported.

General Purpose Logging supported.

Short self-test routine

recommended polling time: ( 2) minutes.

Extended self-test routine

recommended polling time: ( 100) minutes.

SCT capabilities: (0x003d) SCT Status supported.

SCT Error Recovery Control supported.

SCT Feature Control supported.

SCT Data Table supported.

SMART Attributes Data Structure revision number: 1

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAGS VALUE WORST THRESH FAIL RAW_VALUE

5 Reallocated_Sector_Ct PO--CK 100 100 010 - 0

9 Power_On_Hours -O--CK 091 091 000 - 45111

12 Power_Cycle_Count -O--CK 099 099 000 - 33

177 Wear_Leveling_Count PO--C- 097 097 005 - 220

179 Used_Rsvd_Blk_Cnt_Tot PO--C- 100 100 010 - 0

180 Unused_Rsvd_Blk_Cnt_Tot PO--C- 100 100 010 - 9682

181 Program_Fail_Cnt_Total -O--CK 100 100 010 - 0

182 Erase_Fail_Count_Total -O--CK 100 100 010 - 0

183 Runtime_Bad_Block PO--C- 100 100 010 - 0

184 End-to-End_Error PO--CK 100 100 097 - 0

187 Uncorrectable_Error_Cnt -O--CK 100 100 000 - 0

190 Airflow_Temperature_Cel -O--CK 045 044 000 - 55

195 ECC_Error_Rate -O-RC- 200 200 000 - 0

197 Current_Pending_Sector -O--CK 100 100 000 - 0

199 CRC_Error_Count -OSRCK 100 100 000 - 0

202 Exception_Mode_Status PO--CK 100 100 010 - 0

235 POR_Recovery_Count -O--C- 099 099 000 - 16

241 Total_LBAs_Written -O--CK 099 099 000 - 352246306774

242 Total_LBAs_Read -O--CK 099 099 000 - 2145768260254

243 SATA_Downshift_Ct -O--CK 100 100 000 - 0

244 Thermal_Throttle_St -O--CK 100 100 000 - 0

245 Timed_Workld_Media_Wear -O--CK 100 100 000 - 65535

246 Timed_Workld_RdWr_Ratio -O--CK 100 100 000 - 65535

247 Timed_Workld_Timer -O--CK 100 100 000 - 65535

251 NAND_Writes -O--CK 100 100 000 - 924262626312

||||||_ K auto-keep

|||||__ C event count

||||___ R error rate

|||____ S speed/performance

||_____ O updated online

|______ P prefailure warning

General Purpose Log Directory Version 1

SMART Log Directory Version 1 [multi-sector log support]

Address Access R/W Size Description

0x00 GPL,SL R/O 1 Log Directory

0x01 SL R/O 1 Summary SMART error log

0x02 SL R/O 1 Comprehensive SMART error log

0x03 GPL R/O 1 Ext. Comprehensive SMART error log

0x06 SL R/O 1 SMART self-test log

0x07 GPL R/O 1 Extended self-test log

0x09 SL R/W 1 Selective self-test log

0x10 GPL R/O 1 NCQ Command Error log

0x11 GPL R/O 1 SATA Phy Event Counters log

0x13 GPL R/O 1 SATA NCQ Send and Receive log

0x30 GPL,SL R/O 9 IDENTIFY DEVICE data log

0x80-0x9f GPL,SL R/W 16 Host vendor specific log

0xe0 GPL,SL R/W 1 SCT Command/Status

0xe1 GPL,SL R/W 1 SCT Data Transfer

SMART Extended Comprehensive Error Log Version: 1 (1 sectors)

No Errors Logged

SMART Error Log Version: 1

No Errors Logged

SMART Extended Self-test Log Version: 1 (1 sectors)

No self-tests have been logged. [To run self-tests, use: smartctl -t]

SMART Self-test log structure revision number 1

No self-tests have been logged. [To run self-tests, use: smartctl -t]

SMART Selective self-test log data structure revision number 1

SPAN MIN_LBA MAX_LBA CURRENT_TEST_STATUS

1 0 0 Not_testing

2 0 0 Not_testing

3 0 0 Not_testing

4 0 0 Not_testing

5 0 0 Not_testing

255 0 65535 Read_scanning was completed without error

Selective self-test flags (0x0):

After scanning selected spans, do NOT read-scan remainder of disk.

If Selective self-test is pending on power-up, resume after 0 minute delay.

SCT Status Version: 3

SCT Version (vendor specific): 256 (0x0100)

Device State: SCT command executing in background (5)

Current Temperature: 55 Celsius

Power Cycle Min/Max Temperature: 26/56 Celsius

Lifetime Min/Max Temperature: 0/70 Celsius

Under/Over Temperature Limit Count: 4294967295/4294967295

SCT Temperature History Version: 3 (Unknown, should be 2)

Temperature Sampling Period: 1 minute

Temperature Logging Interval: 10 minutes

Min/Max recommended Temperature: 0/70 Celsius

Min/Max Temperature Limit: 0/70 Celsius

Temperature History Size (Index): 128 (17)

Index Estimated Time Temperature Celsius

18 2024-12-19 03:10 ? -

... ..(108 skipped). .. -

127 2024-12-19 21:20 ? -

0 2024-12-19 21:30 26 *******

1 2024-12-19 21:40 35 ****************

2 2024-12-19 21:50 43 ************************

3 2024-12-19 22:00 38 *******************

4 2024-12-19 22:10 43 ************************

5 2024-12-19 22:20 50 *******************************

6 2024-12-19 22:30 53 **********************************

7 2024-12-19 22:40 53 **********************************

8 2024-12-19 22:50 52 *********************************

9 2024-12-19 23:00 50 *******************************

10 2024-12-19 23:10 48 *****************************

11 2024-12-19 23:20 46 ***************************

12 2024-12-19 23:30 45 **************************

13 2024-12-19 23:40 50 *******************************

14 2024-12-19 23:50 54 ***********************************

15 2024-12-20 00:00 56 *************************************

16 2024-12-20 00:10 56 *************************************

17 2024-12-20 00:20 55 ************************************

SCT Error Recovery Control:

Read: Disabled

Write: Disabled

Device Statistics (GP/SMART Log 0x04) not supported

Pending Defects log (GP Log 0x0c) not supported

SATA Phy Event Counters (GP Log 0x11)

ID Size Value Description

0x0001 2 0 Command failed due to ICRC error

0x0002 2 0 R_ERR response for data FIS

0x0003 2 0 R_ERR response for device-to-host data FIS

0x0004 2 0 R_ERR response for host-to-device data FIS

0x0005 2 0 R_ERR response for non-data FIS

0x0006 2 0 R_ERR response for device-to-host non-data FIS

0x0007 2 0 R_ERR response for host-to-device non-data FIS

0x0008 2 0 Device-to-host non-data FIS retries

0x0009 2 2 Transition from drive PhyRdy to drive PhyNRdy

0x000a 2 2 Device-to-host register FISes sent due to a COMRESET

0x000b 2 0 CRC errors within host-to-device FIS

0x000d 2 0 Non-CRC errors within host-to-device FIS

0x000f 2 0 R_ERR response for host-to-device data FIS, CRC

0x0010 2 0 R_ERR response for host-to-device data FIS, non-CRC

0x0012 2 0 R_ERR response for host-to-device non-data FIS, CRC

0x0013 2 0 R_ERR response for host-to-device non-data FIS, non-CRC

now remains the question, why these 5 disks are slower.

i’ll probably move them around between ports and see whether the slowness moves with them or not.

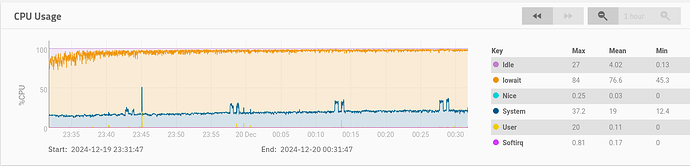

CPU is mostly iowait-ing, btw: