Hello it has been so long since I posted that the forums moved and FreeNas is called TrueNAS. I forgot my username on the old forum but I didn’t post enough to be remembered. I did post a couple hopefully helpful How To posts that hopfully help some folks in 2014 or so. lol

I am still running FreeNAS from 2 or 3 years ago and wanted to do a much needed upgrade. I am about to travel around a lot and I am taking my NAS with me. In my truck / camper. Living my dream! Currently I have low capacity spinning rust in a Full tower case I was able to cram drive caddies into. A raven V2 case I think it is. I wanted to upgrade to a smaller case and hopefully lower power consumption by running more SATA SSDs then spinning rust. I also will need to move this thing around frequently so SSDs are much lighter. I will be sacrificing storage space. However, I am not using as much as I thought I would when I made my current NAS build in 2015 or so.

Purpose of server is to serve up media with TrueNAS scale stable. Other things; Home Automation, NVR as a VM, ISCI block storage for Steam storage. Home lab stuff with VMs. Running Linux systems to test them out. Running Docker tools etc. My new case will have 4 Drive bays that can take Full 3.5 rusts or the smaller SSDs (with adapters). The mobo I chose has a spot for an Nvme drive to use for TrueNAS scale boot. No redundancy on the boot drive. I don’t need 99% up time. In fact I will probably power the NAS off frequently when it is not in use. This NAS will be on the move.

After reading this forum for several days and looking up many options. Here is my build. Comments and Advice are helpful!! Thank you in advance! I will try my best not to ramble and just give relevant facts. This will be my 3rd Free/TrueNAS build.

Case:

45Drives HL4 With Power Supply and backplane. The data cables I choose are SATA. SFF8643 4x 7 pin SATA. (100% full price)

Motherboard

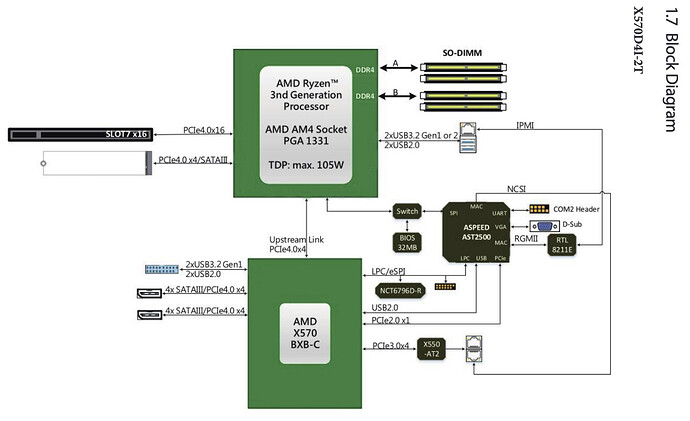

AsRock Rack X570D4I-2T (Open box special!)

CPU

RYZEN 5 PRO 5650GE (I WILL report if it ECC yes or ECC no as the forums were not terribly clear. Found one used!)

CPU cooler

Noctua NH-l9i Low Profile 37mm CPU Cooler with Heatsink PWM Intel LGA 1151 1150

(Someone was selling a used one!! Nice!)

RAM

NEMIX RAM 32GB (1X32GB) DDR4 2666MHZ PC4-21300 2Rx8 1.2V CL19 260-PIN ECC SODIMM Compatible with Samsung M474A4G43MB1-CTD ECC Unbuffered (so it’s not samsung, hopefully a decent clone… good price)

Network

The mobo comes with 2 10 gig copper Nics (rj-45) but I got short, very short cable runs. I am moving into a tiny trailer (I want to be Chris Farley and live in a VAN down by the RIVER!)

Microtek 10 gig 4 port switch, SFP+ . 3x SFP+ to RJ-45 adapters

Main PC (gaming and work… high amount of data transfers) has a 2 port 10 gig Chelso card SFP+

I have some unifi switches that are just 1gig but they are not doing the bulk of the data xfers. I will be getting rid of those soon.

Lastly I have a Blackhawk router that is a bit outdated? It has an SFP or SFP+ port? only 2.5 gig but it might connect to the NAS quickly? Another thing to test. For the road I will probably find a much more energy efficient method for wifi.

Storage (on current NAS ~ approximately ~)

5x 3TB WD Red drives 5600

3x 4TB WD Red drives 5600

2x 128GB Sata SSDs for cache. I hope they are the right kind, I may not put this into the new build? I have not notice a difference in using them. I have watched 4 hour lectures on ZFS and still fail to completely understand when those cashes become helpful? Perhaps the way I use my NAS just doesn’t benefit??

3x 4TB HGST Enterprise storage drives that spin faster, 7200 maybe?

1x Sata Dom for boot.

Please note this is all being downsized and migrated, I also have a ton of 1 TB Sata SSDs I may start with that? Or I might buy 4 2TB sata SSDs. I would love help to decide? Price per TB I have not quite looked into yet cause I can migrate my pools that direction. I have some time before I hit the road nomad like.

I am considering taking up that PCIe gen 4 x16 slot with a 4x Nvme card. I am fairly certain the Asrock supports bifurcation of the Pcie. I hope I don’t have to buy a card with PLX chip for that? I read the mainboard manual and it looks to support bifurcation. 4x4x4x4. Perhaps one of you know? I know this board wasn’t entirely recommended, but it seem like for a home lab? I think it might be the solution.

I will start with some old spinning rust and Sata SSDs cause that is what I got laying about (enough of same sizes). I will try out some Nvme I have got laying around too and a dummy card to see if bifrucation is supported without the UEFI/BIOS doing some odd raid config thing. That pool will mainly be iscsi block for steam. The manual is a little confusing re Nvme.

I am running an old supermicro board x10 series I think? E3 Xeon. It is running fine. I just have to get the weight, size, and power consumption down. Easy to load on a dolly, if it even ends up weighing that much after getting rid of most of the WD Reds. 32 gig of ECC DDR3 while my new mainboard will need DDR4 so I had to buy a single stick. Again hope to keep power consumption down.

I have liked what 45drives is doing for a long time but way to large for my needs. Soo I splurged.

The smaller form factor really appealed to me and some bling too! (custom front panel logo). I am still using PC cases from the 90s folks! I rebuild and rebuild even in cases that cut my hands up , so a case like this? I think it will be well worth. It will be a welcome change! My servers have always gone in better cases than my PC builds. They are all “sleeper” PCs. lol ATX thank goodness has been ATX 4ever!

Well be kind. I would love suggestions? Cost is not a huge concern for the system but 1200 - 1400 USD for an SM board was a bit much or I could find what I already have for a decent price but it is micro-ATX.

I have always wanted to do an Mini-ITX build so this is a chance to give that a go. Dare I say it? The case will be sexy AND functional. It does look to have great airflow if I manage my cables well. I hope the cooler has enough room? It i not very tall…

Thank you for coming to my Ted Talk!

Now… How do you set up TrueNAS permissions again??? lol I am already starting to loose sleep over them. ![]()