Hello all

(I have two questions, presented right at the end for anyone who wants to skip over my lengthy background explanation).

I am successfully running an official trueNAS “app”, namely Frigate. It’s an NVR assisted by AI in the form of a Coral edgePTU.

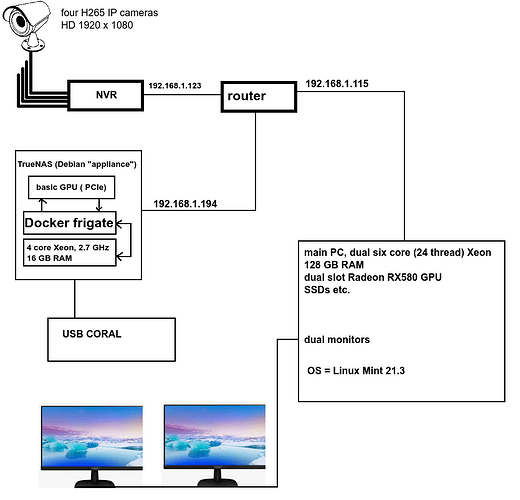

This block diagram illustrates my setup, and the info in my signature provides additional details regarding my NAS hardware:

It’s all working; however …

On my main PC I want to watch video clips produced by Frigate but, due to confusion, I think that the people who have tried to help me and who know lots about Frigate might believe that I want to do decoding/transcoding on my TrueNAS box using the basic GPU.

I don’t want to do that. I want to use the significant resources of my main PC to convert/process and ultimately watch the video clips.

It turns out that the video recordings created by Frigate get stored to a mount and I can watch those MP4 videos using (e.g.) VLC or ffplay etc.

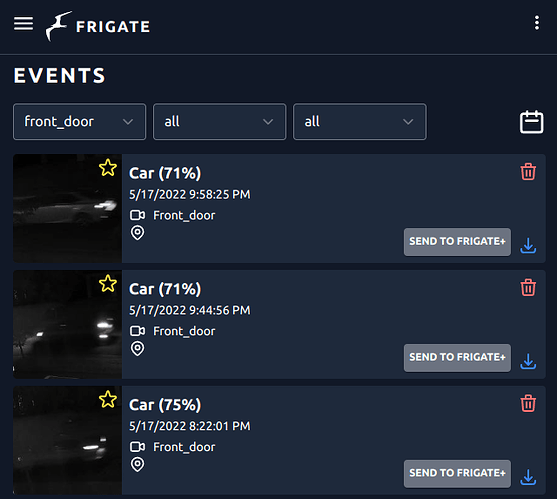

It also turns out that the video clip “events”, which are video thumbnails I suppose, live in a different part of the UI. They are used to summarise movement triggering and object recognition, and are supposed to be played back in the browser via “HLS” which is an entirely different mechanism.

The idea is that you get a timeline populated with stills from the video recording and also these stream thumbnails, and you click on either for better detail.

In the webUI served out by frigate, the jpeg thumbnails show up when I click on them, but I can’t do any in-browser playing of the event clips.

I am running a set of up-to-date browsers and I have tested lots of H265 videos & HLS streaming from/inside test web pages and I can render the video easily so I don’t see why I cannot play back these event clips.

(1) When frigate serves its NVR video data to me, those video streams are encoded as HLS and the rendering I want to do - the decoding, or transcoding from H265 to H264 - it can be done on my main machine, can’t it, using the Radeon RX580?

(2) I don’t have to do all the transcoder H265->H264 “heavy lifting” inside my trueNAS box at all, do I?

There are many settings in the config and I am struggling to overcome this hurdle, whilst at the same time making slow progress elsewhere. if yo can help answer my two questions I will be very pleased

Thanks!

EB