Hello community, I joined the truenas community about a year ago, my server configurations are:

Truenas Scale: Dragonfish-24.04.2

Processor: Xeon E5-2690 v4

Memory: 128Gb ECC

Motherboard: X99-QD4

1 Pool Mirror: 2x1Tb(NVME KingSpec),

1 Pool Mirror: 2x 3Tb (WD Red),

1 Pool Stripe: 2x7Tb (IronWolf),

1x6Tb (USB Seagate EDD),

1x3Tb (WD Red),

1x1Tb (Samsung),

1x1Tb(USB WD)

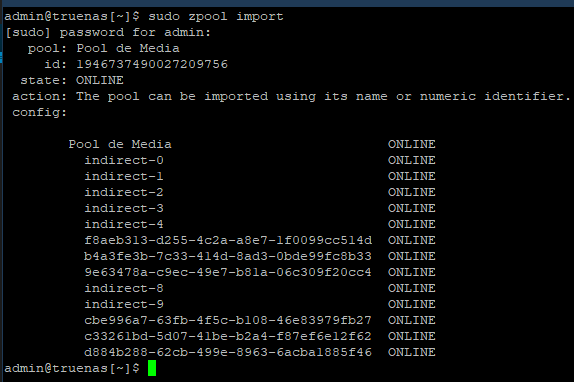

I am aware that stripe is not a recommended configuration, but I do not store anything of utmost importance in the stripe pool, only movies and series, which I can download again, but unfortunately I put a 1 Tb disk in it, which caused this problem I believe, after I restarted the server the stripe pool appears as if some disks in the pool had been exported, I tried to perform some procedures in the forum, and I only receive this message.

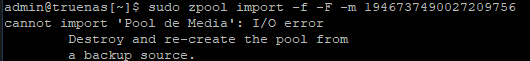

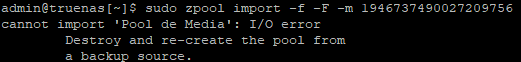

So I exported the pool definitively through the truenas UI, and tried to run the same procedures, and I get the same error message.

I ran smartctl on all disks in the pool, only the 1tb disk showed a pending bad sector.

Can anyone tell me if I have any chance of recovery? I have no problem losing the data, I just didn’t want to download everything again.

Sample of the commands executed

zpool import

zpool import -f -F -m

zpool import -fFX

It takes a long time to execute, I left it for 3 days and it didn’t finish

zpool status -v

I left it for about 6 hours and it didn’t finish

zpool import -f -F -n

It doesn’t show any return

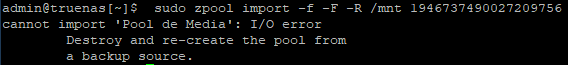

zpool import -f -F -R /mnt

I tried to perform the import through the truenas UI is: