Background:

After having finished setting up : NAS initiated rsync “pull scripts” from my main linux server to my "Primary Supermicro NAS.

I am now trying a : Manual “Data Protection” → Replication task.

I’m “PUSH’ing” my Backup/Linux-server dataset to my "Secondary (Backup) EPYC NAS (no HT enabled), and G-zip9 compression override on dest. dataset.

I was starting out looking at the TN documentation page, but it was a bit “cryptic”.

Then i trued to follow a “Lawrence PUSH backup YT video”, but I failed miserably …

The example video(s) uses root as ssh & replication users, and that fails on a clean non upgraded Dragonfish system. My guess is that “they” seem to use Core “upgraded” systems.

I ended up followinng this IX YT video:

With the following change note(s)

Don't use https:// as the remote nas URL , gives "self signed" certificate issues ... Use http://

And remember to click use sudo for zfs commands in BOTH ssh connection windows , and on replication task

Replication PUSH from Primary to Secondary is working

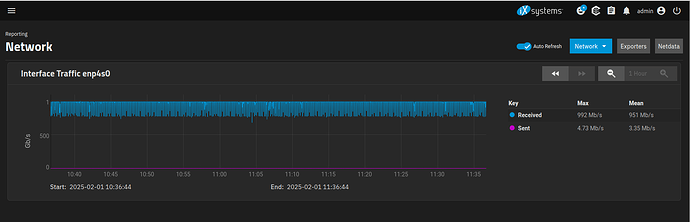

I am now running a manual replication task with SSH (encrypted) as transport method.

I just have 1Gb interfaces on my EPYC Secondary NAS, and i’m getting (IMHO) a decent xfer speed.

I tried a SSH+NETCAT xfer but got some “enable tty for password” error.

And at that point i just dropped the netcat, and used pure ssh.

Question:

With the speed i’m getting ~900Mb+ , and a cpu utilization of around (12% Pri) and (26% Sec).

Would it be worth going for (spending time on) SSH + NETCAT ?

Well besides the: “I won’t let the system win” feeling , that might make me do it anyway … ![]()

The Backup NAS will at some point in time be moved to the Summerhouse:

Home ↔ Sommerhouse connection is a :

pFS ovpn L2L connection , and i have around 250Mb bw …

I have not tested for “Hours of sustained” xfer throtteling from the ISP yet …