Ok so i editted the jailmaker docker config script, placed it in the docker dataset location

editted the script to use the bridge instead of macvlan.

I then followed the steps in this video guide to setup bridge but i used br1 instead his br0. because the script uses br1

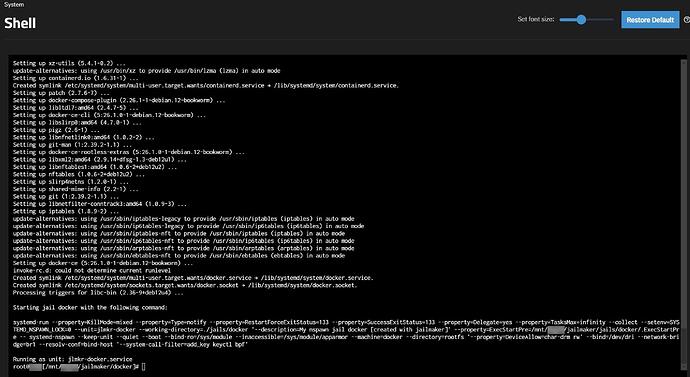

after i had done that, i then went to truenas shell to run the command

jlmkr create --start --config /mnt/xxxxxx/jailmaker/docker/config docker

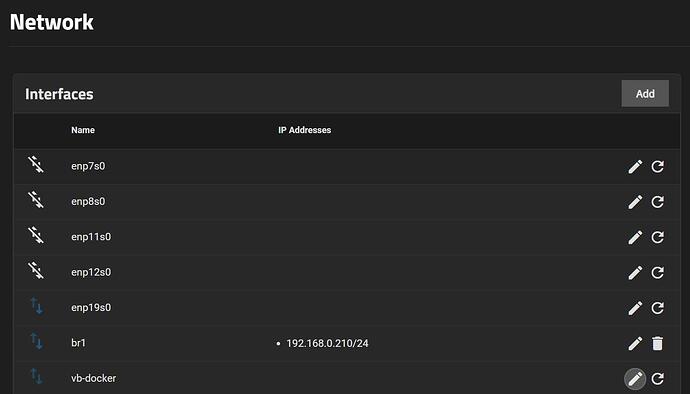

then i went to networks to check on that and i noticed a vb-docker. what da heck is that? i checked the script it made no mention of that.

anyway br1 is indicating it works. Basically what that is, is an active bridge setup to work for that physical nic which is the sfp+ fiber connection connecting the truenas to my switch.

supposedly this means bridge is working for jailmaker and docker at this point? ![]()

Anyway so no, i did not have to connect truenas to monitor via hdmi and attach keyboard. Since i did the shell through truenas web ui.

Note: i did noticed that, when i was following the youtube step by step to switch over to bridge, it kept constantly resetting back to the default. No idea why. The troubleshoot section said if you had a vm it may do that, but i didn’t. Anyway kept repeating the steps but faster and managed to save the setting permanently. And it stopped trying to roll back. Just thought i would mention this quirk. Anyway the jailmaker setup description for this was less risky than i thought it would be, as long as you are super careful when doing this. But even then i had already saved my truenas settings before i even attempted, just in case