Good morning! First-time TrueNAS future user… Also, sorry for the long post, but as we learned from Reacher, “details matter” ![]()

I am planning on repurposing my current workstation used for WFH home work into a TrueNAS box since I’ve just ordered my replacement parts. I’m well aware that this is going to be a complete and total waste of resources, but since I own them already and will be preventing E-Waste, it’s both cheaper and a better option than buying new lower spec components.

I will also be using this to replace some of the roles that a couple of my SBCs are running now (Rock 5B and RPi 5) via docker, though, those won’t consume much in the way of resources. This will be replacing my NAS that has made it to “I’m not dead yet! - You will be in a moment” Netgear ReadyNas Pro 4 that I rescued from a client’s site when it was replaced a decade ago for going EoL/EoS.

The components will be as follows:

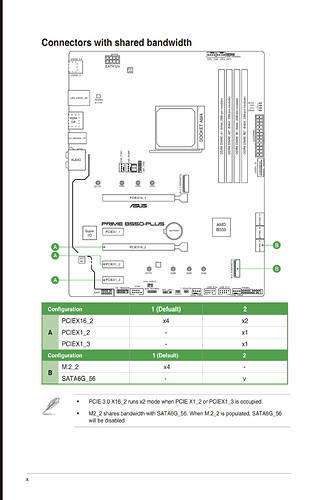

- Motherboard - Asus Prime B550-Plus - 2x M.2 NVMe, 6x SATA

- RAM - 32GB DDR 4 Unbuffered 3600 MHz

- CPU - Ryzen 9 5900X

- NIC - Dual-port Intel X540-T2 10GBps RJ45

- Storage Pool - 4x Seagate Exos X14 12 TB drives (7200 RPM Spinning)

- Boot - 2x Samsung Evo 250 GB 2.5" SATA SSD

- Slog - 2x Samsung M.2 NVMe 250GB

Note about the hardware, I work at a mid-sized MSP and we have hundreds of the NVMe and 2.5" SSDs in our reclamation bone yard that were removed from OEM systems and replaced with larger SSDs for client deployments. For all intents and purposes, they’re both free and nearly unused. I get that the capacity is WAY overkill for their purposes, but, free is free ![]()

My thought process, which is the part that I’m hoping to be challenged and educated on is as follows. Since I have all of the hardware except for the Exos drives, I thought I’d use 2 of the MB’s 6x SATA ports for a striped OS pool on the 2.5" SSDs, use the 2x M.2 NVMe drives as a SLOG to reduce latency on the spinning pool, then use the last 4x SATA ports for the Exos drives. This will obviously prevent future expansion, but since I’m going from a 4x 4TB RAID 5 NAS to a 4x 12 TB NAS, and my current NAS is only 60% full, I think I’ll have a long time to consider future expansion, though, I do plan to re-rip my BD collection from 265 HVEC to lossless since I’ll have the capacity, but I should still have lots of breathing room to rethink my life choices.

Where I’m still on the fence is regarding the consideration of using all of the on-board M.2/SATA connections vs offloading the SATA to an inexpensive LSI HBA like the SAS3008 for ~$100 USD. Will using all of the on-board I/O ports end up saturating the pipes on the MB and become a performance issue that spending $100 more would prevent? If so, is this likely to be an issue outside of the initial data transfer in day to day Multi-media/backup use? Realistically, the hardest I see this getting hit is when my desktop is running a backup while 2 devices are streaming via Jellyfin and 2 devices may be syncing to Immich, which is a relatively minor load.

If I do end up determining that the HBA is the way to go, I’ll have to lose either the 10Gbps NIC, or I’ll have to lose the GPU. I’d probably remove the GPU first since I don’t currently have plans for HW acceleration uses, but I am unsure if the device will boot/post properly without it since the CPU does NOT have an integrated GPU (More testing required).

The last thing I’m on the fence about, the CPU and Mobo do support ECC memory, and I know the recommendation is roughly 1GB of RAM per 1TB of storage for ARC, currently I’ll be sitting right on the cusp of that with 32 GB of RAM and ~32-34TB usable in the storage pool (assuming it’s RAID 5 type overhead). Is ECC RAM critically important enough to spend the money to find compatible RAM and swapping out to ECC DDR4? It has to be ECC Unbuffered according to Asus’s Tech Spects page (PRIME B550-PLUS - Tech Specs|Motherboards|ASUS USA)