My hardware is:

- TrueNAS SCALE 25.04.2.1-2,

- Ryzen 7 5700X,

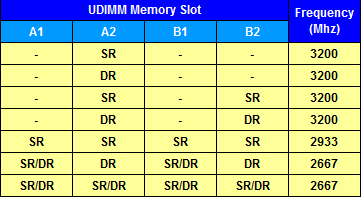

- RAM X-Star Spark Shark DDR4 16GB 3200Mhz X4,

- ASRock B450 PRO4 R2.0,

- PSU Endorfy Supremo FM5 750W 80+ Gold.

- For HDDs it’s a mix of segate stuff and ironwolf. There’s one 128GB ssd for boot and one Kingston KC3000. Both the boot ssd and the kingston nvme were added AFTER the crashes started to see if the previous hardware was the real issue. In total, 5 HDDs, 1 nvme and 1 sata ssd.

- GPUs: NVIDIA rtx 3060ti

- HBAs/Storage: LSI 9211-8i flashed to IT, also added AFTER the issues started.

- NICs: Intel IGC 2.5G (enp5s0) + Realtek r8169 (enp9s0), 2.5G is only used. It’s not getting hit hard at all.

I’ve had my system crash on and off for the past 2-3 months now. It all started happening more constantly after I upgraded to 25.04 and installed 3 LCX containers. One for my website hosting, another for game servers and one more to test stuff inside it. The crashes aren’t normal, they ramp the fans to 100% and the machine reboots after a few seconds. It’s almost if I’d hit the reset switch on the machine. It also doesn’t happen all the time, it can run weeks without any issues, and then start crashing every 2-3 hours for a few days, then it can run stable for some more time and so on. A notable thing I can notice, when running the LCX container for the game servers, I have that wired up to MinIO, whenever I’d hit MinIO with a very large job, a 200gb backup and another for 70gb, or even just one backup job, the whole machine would crash within 2-30 minutes of the tasks starting.

The logs, they don’t surface anything that would say what’s the real cause of the crash. I’ve found this in my logs: pci 0000:01:00.0: VF BAR ... can't assign; no space then later No. 2 try to assign unassigned res and assigned gpio_generic: module verification failed: signature and/or required key missing - tainting kernel. Shutdowns cause systemd-journald: ... system.journal corrupted or uncleanly shut down, renaming and replacing.. I’ve tried extending the oops period to see if that would catch any errors, and those above logs are the only thing that would surface. Temps are not an issue, they are stable, with the hottest disk being at about 48-54C.

As for what I tried to fix this:

- Got a new HBA to test if it was the SATA links on the motherboard or the controller, didn’t change anything.

- Updated BIOS to latest version, didn’t change anything.

- Slowed down SATA links to 3gb to see if it’s stability related, didn’t do anything.

- Disabled the LCX containers and over half of my containers to see if that would stop it, it didn’t.

- Removed one drive from my raidz1 pool to see if that is a power spike related issue, didn’t do anything. Still crashes.

- Limited ZFS arc to 10gb so my RAM doesn’t fill up, didn’t change anything.

I’ve done these commands too to see if anything would improve, nothing changed: - sudo sysctl -w vm.compaction_proactiveness=0

- sudo sysctl -w vm.watermark_boost_factor=0

- sudo sysctl -w vm.min_free_kbytes=524288

- echo 10737418240 | sudo tee /sys/module/zfs/parameters/zfs_arc_max

- echo never | sudo tee /sys/kernel/mm/transparent_hugepage/enabled

None of these commands did anything. I’m puzzled by what’s causing this. The very next logical step would be to check RAM with memtest, though I had never experienced RAM failing in this way if it really is RAM. I’d need some advice on how to proceed with this and what to test. I found some posts from some time ago that had crashes coming from RAM, but the weirdest thing is, the system can be stable for weeks, then randomly one day it will crash and from there it will crash every 1-2 hours, slowly extending that window. Currently the crash happens every 8-16 hours.