Hi,

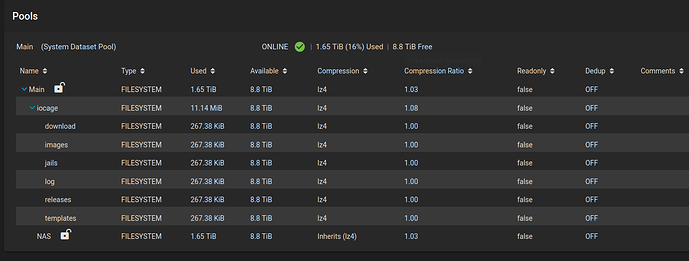

TrueNas Core: TrueNAS-13.0-U6.1

Recently I have been getting the following errors backing up, it tends to happen at the same time early in the morning.

cannot destroy ‘backupcloud/backup’: dataset is busy.

[2024/05/02 06:28:42] INFO [Thread-11] [zettarepl.paramiko.replication_task__task_1] Connected (version 2.0, client OpenSSH_8.2p1)

[2024/05/02 06:28:42] INFO [Thread-11] [zettarepl.paramiko.replication_task__task_1] Authentication (publickey) successful!

[2024/05/02 06:28:43] INFO [replication_task__task_1] [zettarepl.replication.pre_retention] Pre-retention destroying snapshots:

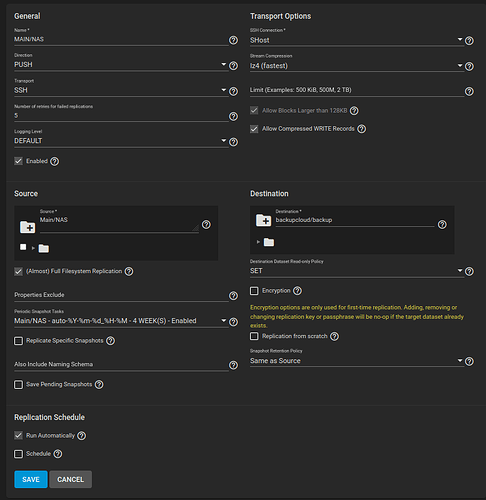

[2024/05/02 06:28:43] INFO [replication_task__task_1] [zettarepl.replication.run] For replication task ‘task_1’: doing push from ‘Main/NAS’ to ‘backupcloud/backup’ of snapshot=‘auto-2024-05-02_06-27’ incremental_base=None receive_resume_token=None encryption=False

[2024/05/02 10:03:40] ERROR [replication_task__task_1.dataset_size_observer] [zettarepl.replication.dataset_size_observer] Unhandled exception in dataset size observer

Traceback (most recent call last):

File “/usr/local/lib/python3.9/site-packages/zettarepl/replication/dataset_size_observer.py”, line 41, in _run

self._run_once()

File “/usr/local/lib/python3.9/site-packages/zettarepl/replication/dataset_size_observer.py”, line 51, in _run_once

dst_used = get_property(self.dst_shell, self.dst_dataset, “used”, int)

… 136 more lines …

lambda: run_replication_task_part(replication_task, source_dataset, src_context, dst_context,

File “/usr/local/lib/python3.9/site-packages/zettarepl/replication/run.py”, line 274, in run_replication_task_part

resumed = resume_replications(step_templates, observer)

File “/usr/local/lib/python3.9/site-packages/zettarepl/replication/run.py”, line 481, in resume_replications

step_template.dst_context.shell.exec([“zfs”, “recv”, “-A”, dst_dataset])

File “/usr/local/lib/python3.9/site-packages/zettarepl/transport/interface.py”, line 92, in exec

return self.exec_async(args, encoding, stdout).wait(timeout)

File “/usr/local/lib/python3.9/site-packages/zettarepl/transport/base_ssh.py”, line 63, in wait

raise ExecException(exitcode, stdout)

zettarepl.transport.interface.ExecException: cannot destroy ‘backupcloud/backup’: dataset is busy

The destination is a Ubuntu server with ‘ZFS utils-linux’ installed and has been working fine in my old house. Setup using these notes:

Recently I have installed Starlink and have just linked this issue to the morning reboots, I guess it is doing Starlink updates, it does not seem to be able to resume. The time-frames match. While I wait for Fiber to arrive, is there anything I can adjust on the task to resolve this error? I have tried replication from scratch also, to no avail