When I create a replication task with a source dataset optimized for SMB (Case Sensitivity, and Atime off), the resulting destination dataset always ends up with Case Sensitivity and Atime turned on.

My goal is to backup datasets to another pool and, should the initial pool fail, move the dataset back when the initial pool is restored. Unfortunately the replicated datasets have different properties, so restoring a dataset would require a replication task to a dummy dataset and a mv operation to an existing dataset with the correct case sensitivity.

Am I doing something wrong? Is this a bug? It’s entirely possible that I have a fundamental misunderstanding of replication tasks.

Hi and welcome to the forums.

When configuring replication are you creating a new dataset on the receive side?

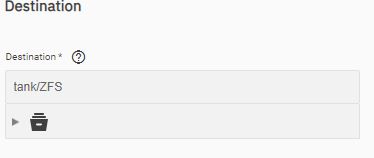

For example if you wanted to send the dataset ‘Stuff’ you’d send it to pool/Stuff. The /Stuff needs to be entered manually in the replication setup to create the dataset on the receive side and honour all the attributes of the sending dataset.

Hard to explain but hope that makes sense.

PS: what options are you selecting in the replication config as you have options around maintaining dataset properties or not.

Thanks for the quick reply.

I had been setting the Destination to a location which didn’t exist (this created the dataset with properties which differed from Source).

I just tried creating the Destination dataset manually and it does allow me to specify whatever properties I want (they aren’t overridden when a dataset is replicated there).

My issue now is figuring out how to do this when the Source dataset is encrypted. The replication task keeps failing, even when I create the destination dataset with encryption turned on, and give it the same key that unlocks the source.

As for what options I was selecting, I was just sticking to the simplified wizard. I suspect that Full Filesystem Replication would get me what I want, but I’m concerned(perhaps incorrectly) that there would be some performance penalty (like having the transfer the entire dataset and not just the change since the last replication task).

In the replication setup have you tried manually entering a dataset that does not already exist on the backup system so in my example tank/ZFS/Stuff and let it try to do the rest?

After a lot more testing, this appears to be an issue with what’s being displayed in Dataset Details for locked datasets.

All of my initial testing had been done with encrypted datasets, and I never bothered unlocking them. Unlocking the destination dataset changes what’s displayed in Dataset Details to be what was expected.

This is what I was doing initially, and it allowed me to transfer encrypted datasets without issue.

Per my other reply, this whole issue seems to be related to Dataset Details displaying incorrect properties for locked datasets. Unlocking the destination dataset reveals the correct properties.

Thanks again for your help; I’d spent hours googling this, and you got me on the right path in a matter of minutes.

1 Like