Following up on this here…

My main server is on the current Dragonfish stable, while the cold backup still is on Cobia 23.10.1.

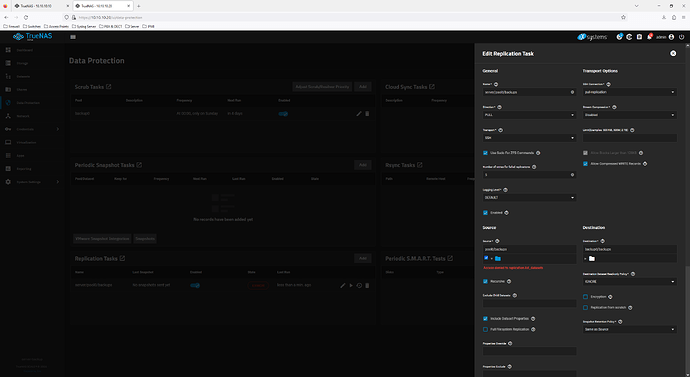

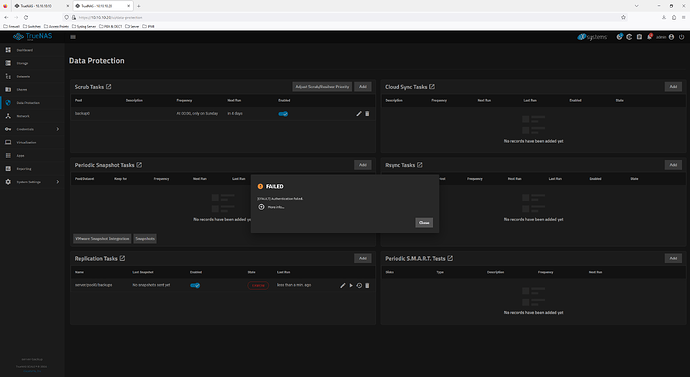

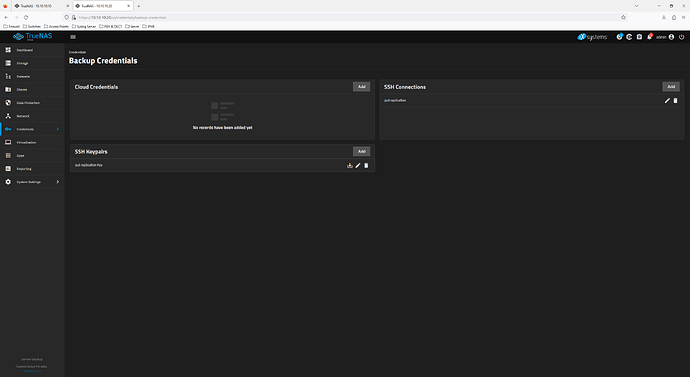

Trying to either use an existing pull replication (backup pulling from main) or setting a new one up will fail because of the self-signed certificate.

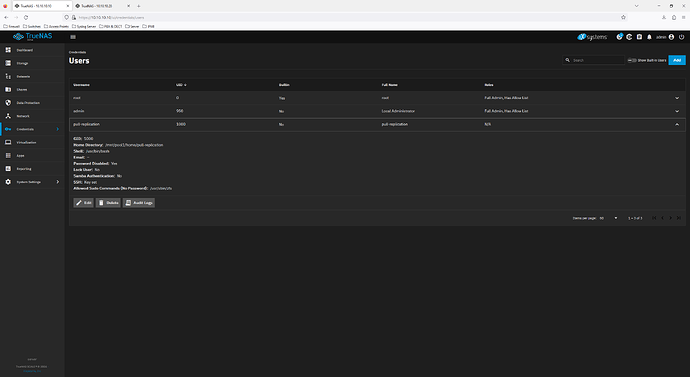

Both systems have been moved over from CORE to Bluefish a while ago, and then moved on. So, they started with root, but have been added the admin user, according to the documentation hub. Suddenly, replications would fail, and I had to roll back the backup server to 23.10.1 to have the main on newer releases and have at least push replication from main to backup.

However, I’d like to have replication working both ways without keeping both systems on 23.10.1 or earlier… Is there an option to make the system accept a self-signed certificate? It used to just do it…

Thanks!

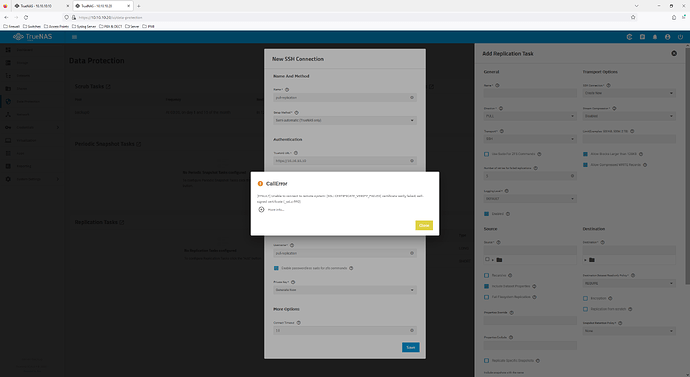

Error details:

error

CallError

[EFAULT] Unable to connect to remote system: [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: self-signed certificate (_ssl.c:992)

remove_circle_outline

More info...

Error: Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/plugins/keychain.py", line 602, in remote_ssh_semiautomatic_setup

client = Client(os.path.join(re.sub("^http", "ws", data["url"]), "websocket"))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/client/client.py", line 289, in __init__

self._ws.connect()

File "/usr/lib/python3/dist-packages/middlewared/client/client.py", line 72, in connect

self.socket = connect(self.url, sockopt, proxy_info(), None)[0]

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/websocket/_http.py", line 136, in connect

sock = _ssl_socket(sock, options.sslopt, hostname)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/websocket/_http.py", line 271, in _ssl_socket

sock = _wrap_sni_socket(sock, sslopt, hostname, check_hostname)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/websocket/_http.py", line 247, in _wrap_sni_socket

return context.wrap_socket(

^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.11/ssl.py", line 517, in wrap_socket

return self.sslsocket_class._create(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.11/ssl.py", line 1075, in _create

self.do_handshake()

File "/usr/lib/python3.11/ssl.py", line 1346, in do_handshake

self._sslobj.do_handshake()

ssl.SSLCertVerificationError: [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: self-signed certificate (_ssl.c:992)

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 201, in call_method

result = await self.middleware._call(message['method'], serviceobj, methodobj, params, app=self)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1342, in _call

return await methodobj(*prepared_call.args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 177, in nf

return await func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 44, in nf

res = await f(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/keychain_/ssh_connections.py", line 97, in setup_ssh_connection

resp = await self.middleware.call(

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1399, in call

return await self._call(

^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1353, in _call

return await self.run_in_executor(prepared_call.executor, methodobj, *prepared_call.args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1251, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.11/concurrent/futures/thread.py", line 58, in run

result = self.fn(*self.args, **self.kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 181, in nf

return func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 50, in nf

res = f(*args, **kwargs)

^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/keychain.py", line 604, in remote_ssh_semiautomatic_setup

raise CallError(f"Unable to connect to remote system: {e}")

middlewared.service_exception.CallError: [EFAULT] Unable to connect to remote system: [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: self-signed certificate (_ssl.c:992)