Hey all,

Currently in the processing of setting up my TrueNas system and testing every nook and cranny whilst waiting on drives but can’t seem to figure out this weird issue:

If a folder is copied between datasets server-side via SMB the transfer time is ridiculously slow (6-11 times slower!) compared to an intial SMB copy from a client or a Midnight Commander or CLI “cp” copy either cached or uncached. If the intial SMB copy from client has been cached, copying again will also write extremely slowly via SMB.

I’ve got a bunch of disk usage graphs from transferring the exact same 7GB folder below:

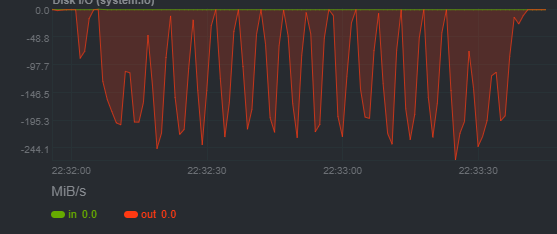

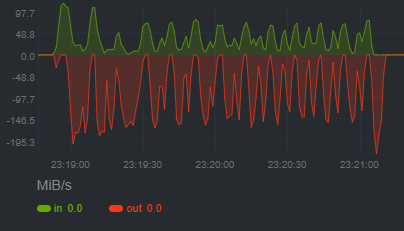

SMB copy from client to server (first time, so the folder is uncached), 1min 40

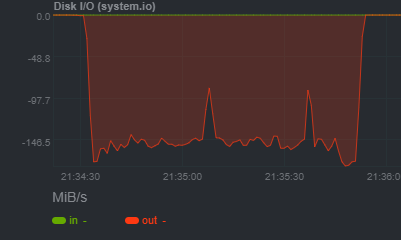

SMB copy from client to server (second time, so the folder is cached. Confirmed by no network activity), 15mins

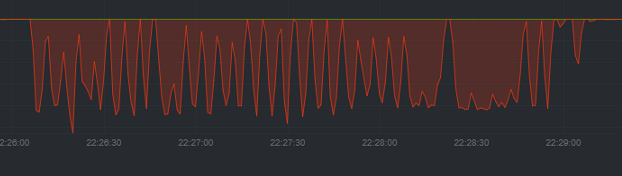

Midnight Commander server-side copy uncached, 2mins 40

Midnight Command server-side copy cached, 1min 20

–

Below data is copying different collection of 15.2GB folder from above:

SMB copy from client to server (first time, so the folder is uncached), 3mins 5

SMB copy from client to server (second time, so the folder is cached, confirmed with no network activity), Ran for 8 mins before cancelling

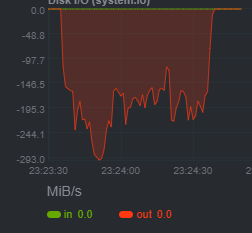

SMB server-side copy uncached, 26mins

SMB server-side copy cached, 24 mins

NAS Specs:

- ElectricEel-24.10.1

- i7 7700 w/ iGPU

- 32GB DDR4 2400mhz non-ECC

- 160gb SSD Boot pool - Motherboard SATA

- Mirrored 1TB pool (system dataset pool)

- 12TB Exos X16 (CMR) - Motherboard SATA

- 1TB WD Red WD10EFRX (CMR) - Motherboard SATA

- Corsair RM650x

SMB Client is Windows 10 AMD CPU gaming PC

Misc:

- It seems it slows down mainly when hitting tiny files (5-800KB), dropping to 5-15MB/s

- Pool datasets are key encrypted so no block cloning available

- 128KB record size (Tried 1MB but same story)

- SMB setup with NFSv4 perms with restricted mode

- Dedupe off

- LZ4 compression

- All drive SMART is great, Scrubs come back fine too

- 1Gigabit motherboard NIC and SMB client runs full speed during iperf3 and first time, uncached, SMB writes or any reads

I am currently running badblocks on another 12TB Exos (hence the crazy pool layout currently) so can replace the 1TB soon and see if it’s the odd mismatch causing issues but seeing as it works fine through MC and initial SMB writes I am not hopeful.

Any help would be greatly appreciated and I’m more than happy to try out any suggestions! This is my first foray into Linux so please be verbose ![]()