looking for how to revert setting ZFS cache usage back to only half, or forcing it to flush without a reboot, im running 64G of ram, but i want alot for VM’s. however, im being told i only have 2.6G available to create a VM. its all being hogged up by the ZFS cache, i preferred the old way on my system, or i would like it to leave much more on the table for my usage.

would the zfs_arc_max set to 34359738368 be what i should do, and still be stable.

yes, yes, yes, i know it takes away from some cache functions, little system, as a backup system, dont care if it means the local backups sent .3% slower over 2.5G network.

When you do create the VM the cache will release.

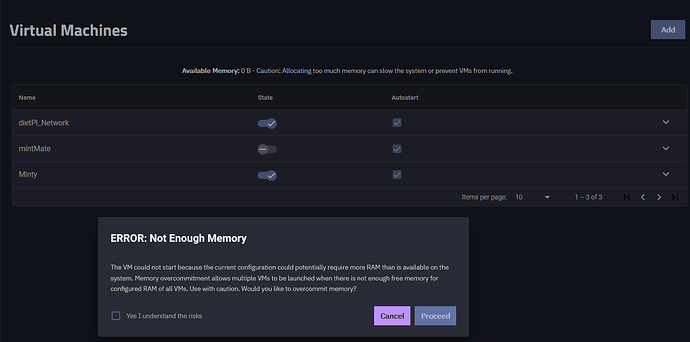

this time it gave me a option for force a vm startup, last time it just told me to get stuffed and i know i heard the drives cackle from across the room.

still, i know its wastefull, i do like the overhead option of more available ram like them old days (puts teeth back in).

I believe that’s what you should do, if you want to limit the arc to 32GiB

edit: think i found a better solution, yeah, i dont know when to just stop and breathe, will add to or edit this post once ive tested more. but the direction ive headed into a full flush is related to these 2 lines as su in shell, ill be working on testing and a script for my uses which i will share in this thread if its not locked.

echo 0 > /sys/module/zfs/parameters/zfs_arc_shrinker_limit

echo 3 > /proc/sys/vm/drop_caches

i dont think its recommended to have this do a frequent flush, but to find a way to do it every few days when everything is idle or i must have a VM spin up (working on testing with ARK cluster servers). so ill look into that, for the time being, this has worked for me.

my original post_

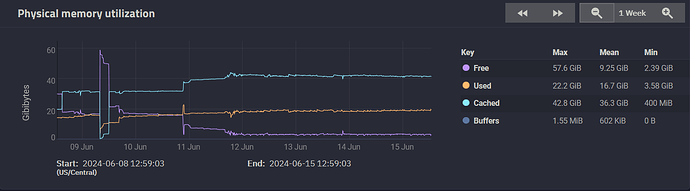

i want to post a follow up, same topic and issue, i have the above settings enabled, and it did work for a couple of days, then it decided it was going to ignore my setting and go back up to max, im currently in the proc of reinstalling my main system OS, because it really really needed it, so this is on a side burner. ok, to the point. im going to post some screenshots of what it decided to do against my wishes. and the result of an issue it causes. to see if anyone has any idea on issuing a simple command in cron or whatever to tell the cache to dump and stick to its lane, i dont need it holding data, just use cache it can when transferring files. after that task is done, i want to release what im 96% sure it will never need to touch again. yes, i can reboot, but thats not how always on appliances should behave donchathink.

would this be more of a bug i should report

when trying to start mintMate above, i need to have VM’s as the priority. (please dont bother saying goto proxmox for hypervisor stuff, im on truenas, and thats where i want the help please.

I got the following on post init command for similar reasons:

echo 34359738368 >> /sys/module/zfs/parameters/zfs_arc_max

haven’t had it randomly roll back to default in the last 20 days or so of uptime. Give it a try?

Only thing I could think of would be to shrink arc size to something very small & then back to the value that you want it to stay at as a cron. If not mistaken this’d flush:

echo 32 > /sys/module/zfs/parameters/zfs_arc_max

echo 34359738368 > /sys/module/zfs/parameters/zfs_arc_max

yep, thatrs the exact line i have in “post init” commands (ive added to cron nightly just now to hopefully remind it that im the one that determines what it gets), but as you see, it ignored it, i made a followup to do a full wipe of cache, but i still want to keep a little more active than flushing it to zero, especially if an rsync is running, i just want to to frequently flush stale data.

im going to add your reply as a solution because it is the main line that helps the limiting and i forgot to post that. ![]()

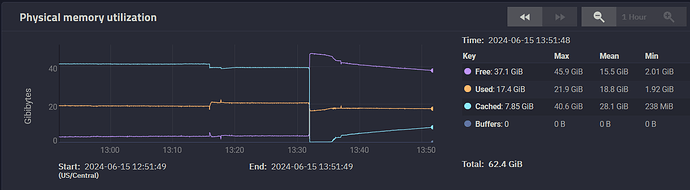

this is the result after issuing the above additional echo commands then forcing a sync from my qnap, exactly what i needed

Really confused on why it would ignore it though; sanity check that it is being set correctly?

cat /sys/module/zfs/parameters/zfs_arc_max

I’m curious on what it’d return when it goes wonky & what it is returning when it is working properly… This could be a valid bug report if zfs_arc_max is set indeed correctly but isn’t being followed.

i honestly have no idea, it did work flawlessly for about 2 days, then it said, “yeah, nah, dont care what you want” in an almost windows sam type of voice. i just hope some of my investigating helps anyone else with these same issues and desires to have control per their needs.

on a comedic level, i kinda now want to rig up a pi to send samples of errors to me like “OZZY MAN” … “yeah na, your pool is now in destination …!”

42949672960 was the result of that cat, its just flat out ignoring the command, guess its bug report time.

there it goes, i followed your followup to my followup to your reply of my reply, and issued the commands to force it way down, then back to a limit max. it now shows 34359738368.

ill have to look in a bit and see if something else is at play, and if ill need to report and deal with the jira stuff, i really hate jira.

welp, time to bookmark this page in my breakfix folder.

**Edited out the needless since you already got it back to what it should be

…Uhhh what else… make sure you got the right pathway in your post init script? Maybe you have a typo?

LOL

…did you ever end up submitting a bug report? If so mind linking it? I just had my ARC size randomly change beyond the 32gb I set. Only thing I don’t recognize was the following looking back on logs:

Jun 19 00:00:00 truenas syslog-ng[5583]: Reliable disk-buffer state loaded; filename='/audit/syslog-ng-00000.rqf', number_of_messages='0'

Jun 19 00:00:00 truenas syslog-ng[5583]: Reliable disk-buffer state loaded; filename='/audit/syslog-ng-00001.rqf', number_of_messages='0'

Jun 19 00:00:00 truenas syslog-ng[5583]: Configuration reload request received, reloading configuration;