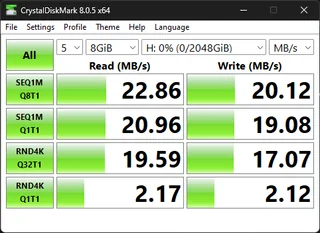

I’ve hit a wall with this issue and cannot seem to get any read/write speeds above 25mbps.

I have tried removing compression and disabling sync. I’ve been attempted striping with raid 0 and mirrored raid 1. No matter the raid configuration the speed never changes. Currently I’m running raidz1.

Specs:

Processor: Intel(R) Xeon(R) CPU E3-1230 v6 @ 3.50GHz

Memory: 32 GB 2400 mhz (2x 16GB)

NIC: Intel X520-DA2 10GB sfp+

Hard Drives: 4x HGST 12gbps SAS HDD - HUS726040ALS211 - raidz1

HBA: Dell HBA330+

Tests:

SMB: (Transfer speeds via SCP and SFTP are about the same)

Fio:

testfile: (g=0): rw=write, bs=(R) 1024KiB-1024KiB, (W) 1024KiB-1024KiB, (T) 1024KiB-1024KiB, ioengine=psync, iodepth=1

fio-3.33

Starting 1 process

testfile: Laying out IO file (1 file / 102400MiB)

Jobs: 1 (f=1): [W(1)][100.0%][w=513MiB/s][w=513 IOPS][eta 00m:00s]

testfile: (groupid=0, jobs=1): err= 0: pid=9925: Wed Oct 9 12:12:33 2024

write: IOPS=473, BW=474MiB/s (497MB/s)(27.8GiB/60055msec); 0 zone resets

bw ( KiB/s): min=333824, max=550912, per=100.00%, avg=485324.01, stdev=47828.97, samples=120

iops : min= 326, max= 538, avg=473.86, stdev=46.75, samples=120

cpu : usr=1.41%, sys=10.00%, ctx=29630, majf=0, minf=37

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,28458,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

DD Write:

4194304000 bytes (4.2 GB, 3.9 GiB) copied, 0.645907 s, 6.5 GB/s

DD Read:

4194304000 bytes (4.2 GB, 3.9 GiB) copied, 0.389345 s, 10.8 GB/s

Iperf3 running 4 parallel streams:

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 2.74 GBytes 2.35 Gbits/sec 129 sender

[ 5] 0.00-10.00 sec 2.73 GBytes 2.35 Gbits/sec receiver

[ 7] 0.00-10.00 sec 2.74 GBytes 2.35 Gbits/sec 135 sender

[ 7] 0.00-10.00 sec 2.73 GBytes 2.35 Gbits/sec receiver

[ 9] 0.00-10.00 sec 2.74 GBytes 2.35 Gbits/sec 124 sender

[ 9] 0.00-10.00 sec 2.73 GBytes 2.35 Gbits/sec receiver

[ 11] 0.00-10.00 sec 2.74 GBytes 2.35 Gbits/sec 142 sender

[ 11] 0.00-10.00 sec 2.73 GBytes 2.35 Gbits/sec receiver

[SUM] 0.00-10.00 sec 10.9 GBytes 9.40 Gbits/sec 530 sender

[SUM] 0.00-10.00 sec 10.9 GBytes 9.38 Gbits/sec receiver