Hi there,

i got a Site-to-Site Connection going between my main Scale system and my remote one. Synching via replication tasks is working. The issue is, I get terrible performance. If have a 50mbit upload, but only get 10mbit at best.

The connection speed of both systems is NOT the issue, since i can indeed upload / download at both location from Dropbox via Cloud Synch at 50mbit at the sending and with 350mbit at the receiving side.

It therefore must be a problem with the systems woking together. The are talking to each other via Tailscale, tough, as I understand it, the traffic does not flow through their servers, so this shouldnt be a bottleneck, should it be?

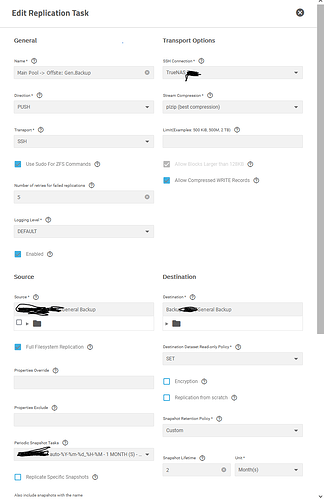

Following you will find a screenshot with the settings i have for the replication tasks. I already tried different settings such as disbling compression or ssh encryption… without any difference.

Any input would be greatly appreciated.

Best regards

BillyElliot

I don’t use ZFS replication, but I have a lot of general communications experience.

-

You appear to be using multiple (2) levels of encryption - SSH (which is encrypted), and VPN (which is also encrypted). You should consider whether you need the VPN for this given that it is already SSH encrypted.

-

You should try using a faster compression algorithm or no compression since you are not maxing out your bandwidth and compression therefore gives you less benefit.

-

I have read in a community post that you should use SSH+NETCAT which is faster.

-

I read in the Oracle documentation that with ZFS replication “I/O is limited to a maximum of 200 MB/s per project level replication”.

Other than that, I am sorry I cannot help further.

Thank you for taking your time to help me!

Well, I now opened up the SSH port of my Truenas machine to the internet, and did an ssh transfer withouth VPN and sill nothing really. It starts and then stops after a few seconds. But the task still runs on the pulling truenas machine with no connection timeout or anything.

I did a iperf3 test between my system and the remote one, and got 30mbit peak, at least 3 times faster.

And via Tailscale I am at 10mbit, the reason for that might be the latency, since I have like 1500-14000ms latency… I learned there is a difference between direct connection and DERP Servers inbetween, but did not figure out yet, how to change this behavior.

Issue still persists though…

Latency is mainly an issue with a poorly designed protocol which requires acknowledgements for every packet.

Latency of 1.5secs-14secs is crazy though. You certainly cannot expect to get good throughput (regardless of the speed of the link and regardless of the protocol) with latency like that.

Alright.

I had quite a lot of bad luck… Yesterday when I was testing transfer without VPN with SSH encryption only and with direct connection to my sending NAS, my ISP had troubles too. Today I tried it again and without Tailscale with direct SSH connection I can easly saturate my 50mbit Upload with max compression.

So it only was an issue with Tailscale not connecting directly but via a DERP server and adding insane amount of latency.

Now i just want to get behind tailscale and for it to connect directly instead of via a DERP server, so I dont have to leave my NAS exposed with its SSH port.

Anyway, thank you for your time and effort!

Best regards from germany

BillyElliot

Hrmph!!!

I would say that my answer about latency was the helpful one that should be marked as the solution. LOL.

1 Like

SSH + Netcat is the way to go. Also, do not underestimate your ISPs ability to detect “server” traffic (which may be against the TOS) and throttle accordingly. My connection speeds within the Comcast network are well below the upload limits suggested by DSL reports and similar speed tests. despite running inside a Wireguard tunnel.

My speeds are so slow that the double encryption (SSH and Wireguard) is only undesirable because it adds more overhead that has to be transferred.