Hi all.

I would like to share some benchmarks with the community. To get your opinions, or perhaps to encourage improvements.

The objective of the benchmark is to compare SMB and NFS performance on Dragonfish-24.04.2, with macOS clients (Sonoma 14.5, Intel).

The TrueNAS system (non-virtualized) consists of:

- Supermicro X10SLM±F

- 32 GB RAM (ECC)

- Intel Xeon Processor E3-1275L v3

- Intel Ethernet Adapter X540-T1 (with

jumbo frameson the server, and also on the Mac 10Gbe card) - LSI SAS 9210-8i

For storage:

- 7x WUH721818AL, striped: empty, no data.

- 2 datasets,

nfs(unix persmissions) ysmb_acl(acl permissions) - With the following configuration (all default except the following):

# zfs get -r -s local all pool

NAME PROPERTY VALUE SOURCE

pool compression lz4 local

pool atime off local

pool aclmode discard local

pool aclinherit discard local

pool primarycache none local

pool acltype posix local

pool/nfs compression off local

pool/nfs snapdir hidden local

pool/nfs aclmode discard local

pool/nfs aclinherit discard local

pool/nfs xattr sa local

pool/nfs copies 1 local

pool/nfs acltype posix local

pool/nfs org.freenas:description local

pool/nfs org.truenas:managedby 192.168.1.10 local

pool/smb_acl compression off local

pool/smb_acl aclmode restricted local

pool/smb_acl aclinherit passthrough local

pool/smb_acl xattr sa local

pool/smb_acl copies 1 local

pool/smb_acl acltype nfsv4 local

pool/smb_acl org.freenas:description local

pool/smb_acl org.truenas:managedby 192.168.1.10 local

Before the benchmarks, I disabled ARC using:

zfs set primarycache=none pool

zpool export pool

zpool import pool

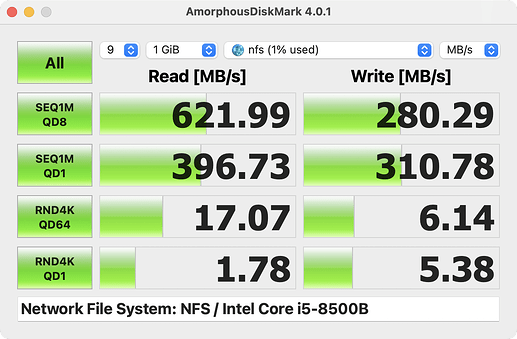

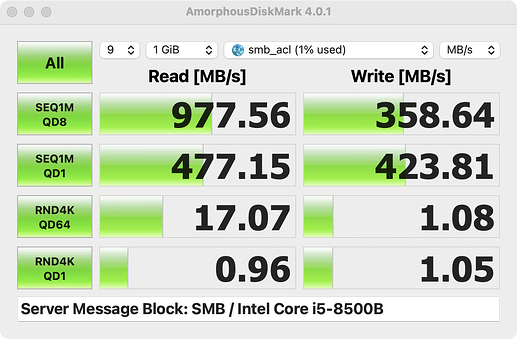

The benchmark software is AmorphousDiskMark set to 1GiB and 9 passes.

The results:

NFS:

SMB:

Basically, SMB is slightly better in SEQ than NFS, but much worse in RND.

For completeness, the server configuration is all default except for the following:

-

SMB:

[√] Enable Apple SMB2/3 Protocol Extensions. And in the share, purpose configured asDefault share parameters. -

NFS:

[√] Allow non-root mount.

And, these are the mount options on macOS:

% smbutil statshares -a

==================================================================================================

SHARE ATTRIBUTE TYPE VALUE

==================================================================================================

smb_acl

SERVER_NAME truenas._smb._tcp.local

USER_ID 501

SMB_NEGOTIATE SMBV_NEG_SMB1_ENABLED

SMB_NEGOTIATE SMBV_NEG_SMB2_ENABLED

SMB_NEGOTIATE SMBV_NEG_SMB3_ENABLED

SMB_VERSION SMB_3.1.1

SMB_ENCRYPT_ALGORITHMS AES_128_CCM_ENABLED

SMB_ENCRYPT_ALGORITHMS AES_128_GCM_ENABLED

SMB_ENCRYPT_ALGORITHMS AES_256_CCM_ENABLED

SMB_ENCRYPT_ALGORITHMS AES_256_GCM_ENABLED

SMB_CURR_ENCRYPT_ALGORITHM OFF

SMB_SIGN_ALGORITHMS AES_128_CMAC_ENABLED

SMB_SIGN_ALGORITHMS AES_128_GMAC_ENABLED

SMB_CURR_SIGN_ALGORITHM AES_128_GMAC

SMB_SHARE_TYPE DISK

SIGNING_SUPPORTED TRUE

EXTENDED_SECURITY_SUPPORTED TRUE

UNIX_SUPPORT TRUE

LARGE_FILE_SUPPORTED TRUE

OS_X_SERVER TRUE

FILE_IDS_SUPPORTED TRUE

DFS_SUPPORTED TRUE

FILE_LEASING_SUPPORTED TRUE

MULTI_CREDIT_SUPPORTED TRUE

SESSION_RECONNECT_TIME 0:0

SESSION_RECONNECT_COUNT 0

% nfsstat -m

/Users/vicmarto/nfs from 10.0.1.150:/mnt/pool/nfs

-- Original mount options:

General mount flags: 0x0

NFS parameters: vers=3,rsize=131072,wsize=131072,readahead=128,dsize=131072

File system locations:

/mnt/pool/nfs @ 10.0.1.150 (10.0.1.150)

-- Current mount parameters:

General mount flags: 0x4000000 multilabel

NFS parameters: vers=3,tcp,port=2049,nomntudp,hard,nointr,noresvport,negnamecache,callumnt,locks,quota,rsize=131072,wsize=131072,readahead=128,dsize=131072,rdirplus,nodumbtimer,timeo=10,maxgroups=16,acregmin=5,acregmax=60,acdirmin=5,acdirmax=60,acrootdirmin=5,acrootdirmax=60,nomutejukebox,nonfc,sec=sys

File system locations:

/mnt/pool/nfs @ 10.0.1.150 (10.0.1.150)

Status flags: 0x0

The ZFS file system is quite difficult to benchmark, I’ve probably made some procedural error. If so, I am open to learn! ![]()