If you’re wondering “why do the writes to my TrueNAS system start off really fast, but slow down after a few seconds?” then this post may help elaborate a little bit on the nature of the OpenZFS “Write Throttle” - the mechanism by which TrueNAS adjusts the incoming rate so that your system is still able to respond to remote requests in a timely manner while not overwhelming your pool disks.

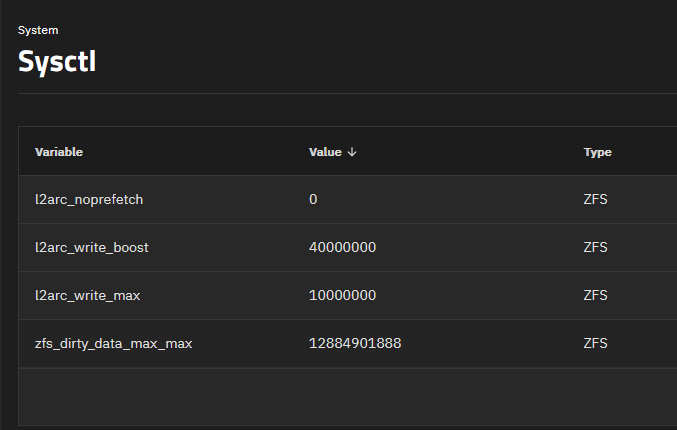

The below comments should be considered for users who have made no changes to their tunables. I’ll talk about those later, possibly in a separate resource - we’re sticking with the defaults for this exercise.

(Be Ye Warned; Here Thar Be Generalizations.)

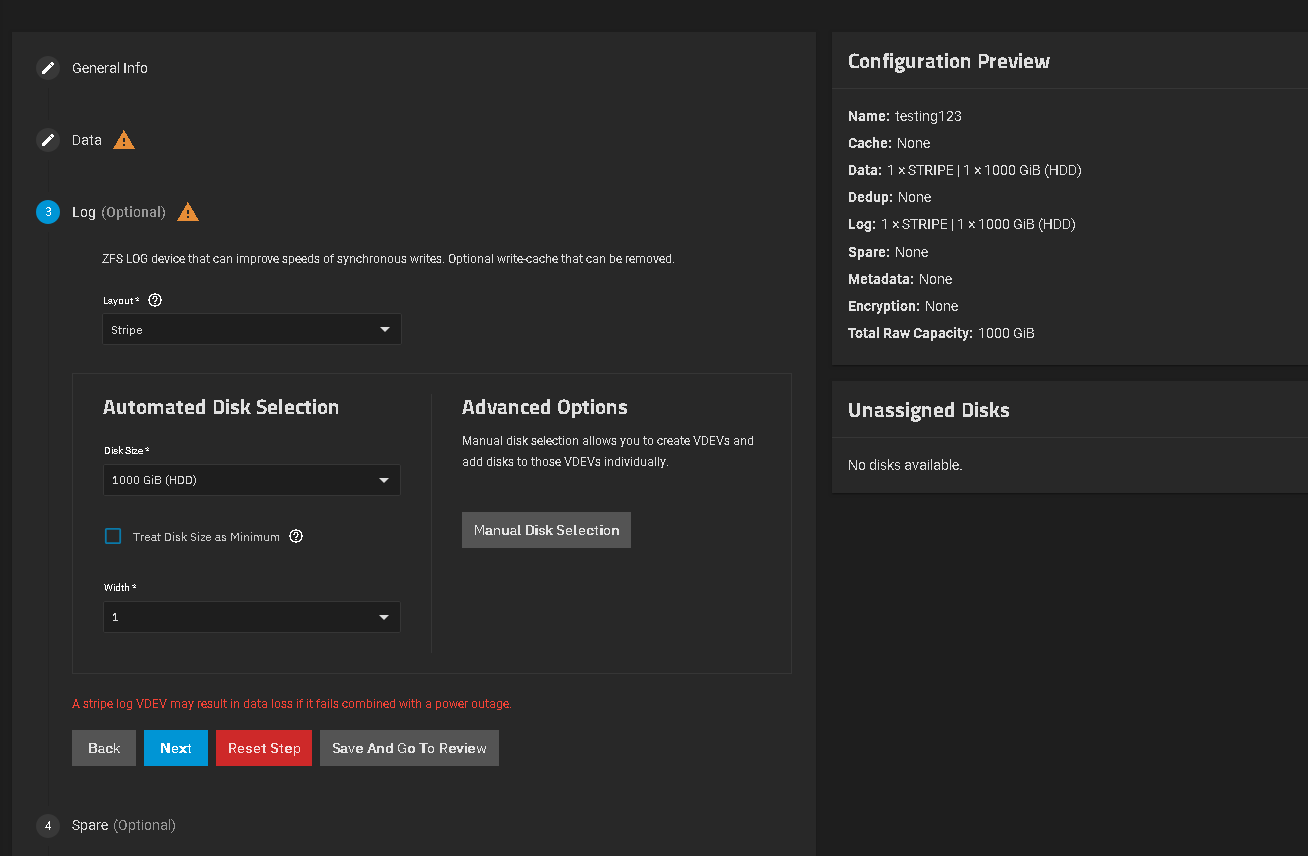

The maximum amount of “dirty data” - data stored in RAM or SLOG, but not committed to the pool - is 10% of your system RAM or 4GB , whichever is smaller. So even a system with, say, 192GB of RAM, will still by default have a 4GB cap on how much SLOG it can effectively use.

ZFS will start quiescing and closing a transaction group when you have either 20% of your maximum dirty data pending (820MB with the 4GB default max) or after 5 seconds have passed .

The write throttle starts to kick in at 60% of your maximum dirty data or 2.4GB . The curve is exponential, and the midpoint is defaulted to 500µs or 2000 IOPS - the early stages of the throttle are applying nanoseconds of delay, whereas getting yourself close to 100% full will add literally tens of milliseconds of artificial slowness. But because it’s a curve and not a static On/Off, you’ll equalize your latency-vs-throughput numbers at around the natural capabilities of your vdevs.

Let’s say you have a theoretical 8Gbps network link (shout out to my FC users) simply because that divides quite nicely into 1GB/s of writes that can come flying into the array. There’s a dozen spinning disks set up in two 6-disk RAIDZ2 vdevs, and it’s a nice pristine array. Each drive has lots of free space and ZFS will happily serialize all the writes, letting your disks write at a nice steady 100MB/s each. Four disks in each vdev, two vdevs - 800MB/s total vdev speed.

The first second of writes comes flying in - 1GB of dirty data is now on the system. ZFS has already forced a transaction group due to the 64MB/20% tripwire; let’s say it’s started writing immediately. But your drives can only drain 800MB of that 1GB in one second. There’s 200MB left.

Another second of writes shows up - 1.2GB in the queue. ZFS writes another 800MB to the disks - 400MB left.

See where I’m going here?

After twelve seconds of sustained writes, the amount of outstanding dirty data hits the 60% limit to start throttling, and your network speed drops. Maybe it’s 990MB/s at first. But you’ll see it slow down, down, down, and then equalize at a number roughly equal to the 800MB/s your disks are capable of.

That’s what happens when your disks are shiny, clean, and pristine. What happens a few years down the road, if you’ve got free space fragmentation and those drives are having to seek all over? They’re not going to deliver 100MB/s each - you’ll be lucky to get 25MB/s.

One second of writes now causes 800MB to be “backed up” in your dirty data queue. In only three seconds, you’re now throttling; and you’re going to throttle harder and faster until you hit the 200MB/s your pool is capable of.

So what does all this have to do with SLOG size?

A lot, really. If your workload pattern is very bursty and results in your SLOG “filling up” to a certain level, but never significantly throttling, and then giving ZFS enough time to flush all of that outstanding dirty data to disk, you can have an array that absorbs several GB of writes at line-speed, without having to buy sufficient vdev hardware to sustain that level of performance. If you know your data pattern, you can allow just enough space in that dirty data value to soak it all up quickly into SLOG, and then lazily flush it out to disk. It’s magical.

On the other hand, if your workload involves sustained writes with hardly a moment’s rest, you simply need faster vdevs. A larger dirty data/SLOG only kicks the can down the road; eventually it will fill up and begin throttling your network down to the speed of your vdevs. If your vdevs are faster than your network? Congratulations, you’ll never throttle. But now you aren’t using your vdevs to their full potential. You should upgrade your network. Which means you need faster vdevs. Repeat as desired/financially practical.

This was a longer post than I intended but the takeaway is fairly simple; the default SLOG sizing and write throttle limits you to 4GB. Vdev speed is still the more important factor, but if you know your data and your system well enough, you can cheat a little bit.