This resource was originally created by user: @NickF1227 on the TrueNAS Community Forums Archive.

NOTE: This script is provided as-is and and is not endorsed by TrueNAS or iXsystems. Use at your own risk.

R.7e18eb0c2fc76f81dc341dea85523339 R (1).jpg

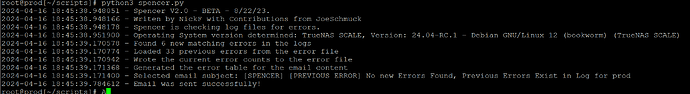

Spencer v2.0 BETA-1

Not Quite Psychic…

Code:

Version 2.0 08/22/23

Refactored alot of code.

Dynamically determine whether run from MultiReport or if Spencer is called directly.

Overall improvements to robustness of the script, the accuracy of it’s findings, and an increase in scope.

New search patterns and customizability.

-----------------------------------------------

Verion 1.3 08/13/23 - Contibution by JoeSchmuck merged

Added a new feature for tracking and reporting previous errors differantly than new errors.

-----------------------------------------------

A special thanks to @joeschmuck for his recent and welcome contributions!!

TL;DR

This script was written to identify issues which may otherwise be silent, as they are not reported by the built in TrueNAS Alert system nor in some 3rd party community scripts like @joeschmuck’s Multi-Report.

multi_report.sh version for Core and Scale - Updates

www.truenas.com www.truenas.com

This script works with both CORE and SCALE.

Due to a change in logging on SCALE Cobia, this script will not work on versions newer than 22.12.x!

I will update it when I am able.

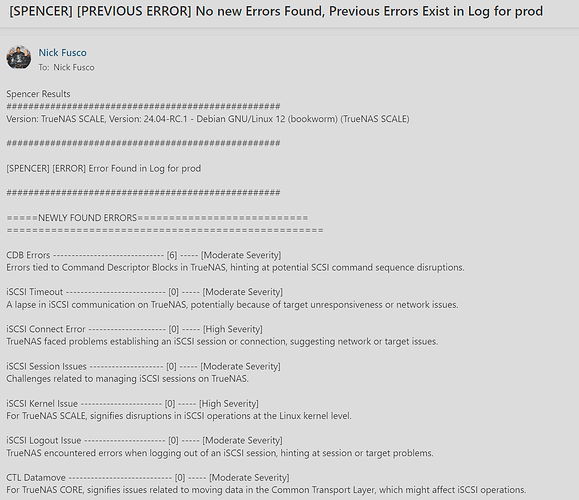

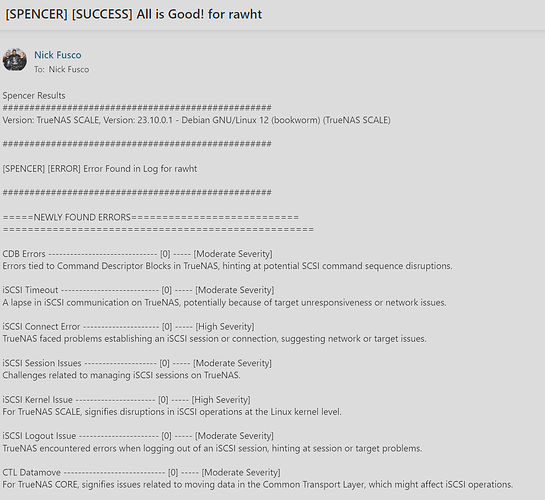

“Spencer Reports”

I Know, You Know

Okay, Gus, listen up! This isn’t your grandmother’s script, it’s the “Spencer” script! You know, named after me, the Shawn Spencer. It’s got that psychic detective edge.

You see, first, it’s got this fancy thing where it talks to the spirits – or a JSON file, but who’s keeping track – and figures out what errors it’s seen before. I like to think of it as the script’s own little psychic vision.

Then, it gets down to business, checking out the current scene (or, you know, the logs) and finding any new mysterious events that occurred. If there’s something fishy, like there’s no new errors but old ones are lurking around, it’s going to shout out loud with our “PREVIOUS ERROR” alert. If everything’s hunky-dory, then it celebrates with a joyful “SUCCESS” note. And if there are any new secrets unveiled, it’ll don the “ERROR” hat.

Now, what’s cooler is this script doesn’t just stop at identifying these errors. Oh no! It dresses them up real nice and presents them like I present clues. And of course, it won’t leave you hanging. It provides all the juicy details about each error, including a super intense description and how many times that sneaky error appeared.

Oh, and just in case you’re super curious, it will also tell you the exact time and place (or you know, log entry) where the error made its appearance.

And when all is said and done, it makes sure to remember all the new mysteries it encountered for next time. Kinda like my “remember that for later” moments.

So, in a nutshell: this script is like me if I were a bunch of code, always on the lookout for errors and doing it with style. And pineapples. Definitely with pineapples.

“Spencer’s Psych-O Error Patterns List!”

Your Glimpse into the Supernatural World of Error Patterns

Hey TrueNAS users and pineapple enthusiasts, this is your guide to what’s going haywire in your system, or as Gus likes to call it - “That thing that beeps a lot.”

______________________________________________________________________________________

The Delicious Menu of Error Patterns

81afc8f9c6dfdf68b4b480e0844e054c.jpg

______________________________________________________________________________________

Linux (The TrueNAS SCALE Experience)

______________________________________________________________________________________

iSCSI Drama Club:

High Severity:

“Timeout Timeout”: It’s like when Gus takes too long ordering food.

“Session Drama”: iSCSI’s been rejected more times than I’ve been kicked out of the police station.

Moderate Moody Moments:

“Connection Feels”: Someone just can’t connect, like me with technology.

“Kernel Kerfuffle”: Even the kernel is having a dramatic day.

Low-Key Laments:

“Logout Blues”: Saying goodbye is hard. For everyone.

NFS Shenanigans:

High Drama:

“Server Ghosting”: I’m pretty sure it’s haunted.

“Stale Like Last Week’s Doughnuts”: And not the delicious kind.

“Time’s Up!”: The NFS clock ran out, probably too busy watching reruns of 80s sitcoms.

Middle-of-the-Road Malarkey:

“Server’s Feeling OK”: It had a good night’s sleep.

“Lost the Portmap”: Somewhere between here and the snack bar.

“Try, Try Again”: NFS’s mantra for the day.

“Mounting Mischief”: Not the horse kind.

“Access Denied”: Even NFS sometimes doesn’t get invited to the party.

SCSI’s Soap Opera:

High-Flying Histrionics:

“CDB’s Dramatic Monologues”: These blocks have stories, man!

______________________________________________________________________________________

FreeBSD (The TrueNAS CORE Chronicles)

______________________________________________________________________________________

NFS’s Plot Twists:

High Peaks of Peril:

“FreeBSD Timeout Trials”: The drama continues in another dimension.

iSCSI Intrigue:

High Stakes Storylines:

“CAM Status Conundrums”: It’s like a whodunit, but for iSCSI.

Moderate Mysteries:

“Datamove Dance”: A ballet of binary.

“iSCSI’s General Gossip”: All the juicy details.

More SCSI Sagas:

High Drama Days:

“CAM Status Cliffhangers”: Every good series needs them.

General Dramedies (Comedy + Drama, Gus!)

High Points of Perplexity:

“SCSI’s Soliloquy”: Pure poetry.

“Link Reset Riddles”: More challenging than that time we played trivia.

Moderate Musings:

“Failed Reading Romances”: I feel this on a spiritual level.

“Write Wrongs”: It’s like a typo, but more dramatic.

“ATA Bus Stops”: Where does it even go?

“Exceptional Exceptions”: Because even errors are special.

“ACPI’s Moods”: Sometimes it’s upset, sometimes it’s just warning you.

Low-Level Lightheartedness:

“ACPI Chuckles”: More of a snicker, really.

______________________________________________________________________________________

End of Spencer’s Spectacular List!

And remember, when things go sideways, always keep a pineapple handy. Oh, and maybe a technician. But mostly the pineapple.

1727a64c0d8e6180495a83500f61335e.jpg

______________________________________________________________________________________

You can run the script as a cron job!

1688687876781.png

WARNING: Totally AI Generated Conversation

Gus: “Sean, have you seen these new reports? The details are incredible! My inner ZFS geek is genuinely excited.”

Sean: “Reports? Probably got lost somewhere while our server was throwing a tantrum connecting to TrueNAS.”

Gus: “That’s just it! This might actually be our breakthrough for that issue. This isn’t just about the clarity; it has crucial information about the connectivity problem we’ve been tearing our hair out over.”

Sean: “Come on, Gus. We’ve been at this for days. How is some flashy report going to change anything?”

Gus: “Hear me out. Look at this line: the iSCSI Kernel Issue on SCALE. It might be the root cause of our connection issues.”

Sean: “I’ve seen a million error reports, Gus. Why is this one any different?”

Gus: “Because this time, it’s not just an error. It’s giving us specific data about what’s going wrong. ZFS and Spencer are practically screaming the answer at us!”

Sean: “Alright, alright! Let me see… Damn…you might be onto something. Ugh, Let’s get this fixed.”

TrueNAS CORE Example Report:

Code:

Spencer Results

##################################################

Version: CORE

##################################################

NO NEW ERRORS FOUND

##################################################

=====Previously Found Errors=================

iSCSI General Errors -------------------------------------- [1] ----- [Moderate Severity - Broad category for other iSCSI disruptions on TrueNAS.]

NFS Server Not Responding --------------------------------- [1] ----- [High Severity - The NFS service on TrueNAS isn’t responding, hinting at service downtimes or network issues.]

==================================================

TrueNAS SCALE Example Report:

Code:

[

Spencer Results

##################################################

Version: SCALE

##################################################

[SPENCER] [ERROR] Error Found in Log for Pineapple

##################################################

=====NEWLY FOUND ERRORS===========================

Hard Resetting Link --------------------------------------- [1] ----- [High Severity - Indicates that a storage communication link on the TrueNAS system was forcibly reset, which may affect data transfer or access.]

ACPI Error ----------------------------------------------- [1] ----- [Moderate Severity - For TrueNAS SCALE, indicates hardware or power management issues, which might impact system performance.]

iSCSI Kernel Issue --------------------------------------- [1] ----- [High Severity - For TrueNAS SCALE, signifies disruptions in iSCSI operations at the Linux kernel level.]

NFS Retrying ---------------------------------------------- [1] ----- [Low Severity - TrueNAS is retrying a previously failed NFS operation, suggesting transient errors.]

==================================================

=====Previously Found Errors=================

Exception Emask ------------------------------------------ [1] ----- [High Severity - For TrueNAS SCALE users, this points to exceptions in the system, potentially related to disk operations or kernel disruptions.]

ACPI Exception ------------------------------------------- [1] ----- [Moderate Severity - For TrueNAS SCALE, highlights unexpected hardware or power configurations that should be checked.]

==================================================

##################################################

Corresponding Log Entries with Timestamps:

##################################################

2023-08-22 12:30:45 - hard_resetting_link: Encountered an error.

2023-08-22 12:31:50 - acpi_error: Power management alert.

2023-08-22 12:35:00 - iscsi_kernel_issue: Kernel disruption detected.

2023-08-22 12:37:05 - nfs_retrying: Retrying NFS operation.

Version 1.3 and onward will be an"officially" supported extension in a future version of MultiReport. Version 1.3 of Spencer can run in stand-alone mode as is with no additional changes.

However, with the upcoming version of Multi-Report it will automagically run Spencer and attach the relevant logs to the email, so additional Cronjobs will no longer be required if you choose. One single source of truth email instead of two different ones.

Code:

root@mini[~]# ./multi_report.sh

Multi-Report v2.4.3a dtd:2023-08-16 (TrueNAS Scale 22.12.2)

Checking for Updates

Current Version 2.4.3a – GitHub Version 2.4.3

No Update Required

2023-08-16 23:14:15.789564 - Spencer is checking log files for errors and pushing output to Multi-Report.

1692243820684.png

Spencer Script Customization Guide

![]() SPENCER V2.0 - BETA - 8/22/23 - Guide to Mastering the Mysteries Within

SPENCER V2.0 - BETA - 8/22/23 - Guide to Mastering the Mysteries Within ![]()

Hi there, seeker of knowledge! It’s me, Gus, your friendly, quirky guide to the complex, yet incredibly powerful, world of the Spencer Script. If you’ve got a moment (or several), let’s dive deep into the abyss of this code. Oh, and always remember: “Read the manual, but with flair!”

![]() 1. Email Setup - Your First Port of Call

1. Email Setup - Your First Port of Call

Customizing the Recipient Address:

Seek and you shall find the line: DEFAULT_RECIPIENT = “YourEmail Address.com”. It’s practically calling out for you!

Replace “YourEmail Address.com” with the target email address of your choosing. This will direct Spencer’s precious messages straight into their virtual arms.

![]() 2. Dive into The Configurations

2. Dive into The Configurations

Using Multi-Report:

The line use_multireport = True decides if you want the outputs in a multi-report (writing to a file) or sent as an email. Adjust as you see fit.

Command Line Extraordinaire:

If you invoke this script with command line arguments, make sure you know about the USE_WITH_MULTI_REPORT line. If you add ‘multi_report’ as the argument when you call the script, Spencer switches to multi-report mode. Neat, right?

Subject Lines & The Emailing Game:

Various subject lines are predefined (ERROR_SUBJECT, SUCCESS_SUBJECT, and PREV_ERROR_SUBJECT). They include the hostname dynamically. You can modify these if you fancy a change in your notifications.

File Locations for the Knowledge Seeker:

ERRORS_FILE & CONTENT_FILE: Spencer has designated spots to store previous errors and report content. Tread carefully and adjust if you have specific paths in mind.

![]() 3. Patterns & Errors - The Heart and Soul

3. Patterns & Errors - The Heart and Soul

Behold! Predefined error patterns tailored for Linux (TrueNAS SCALE) & FreeBSD (TrueNAS CORE). Each pattern is categorized by severity (High, Moderate, Low). But hey, remember, it’s not the size of the error, but how you handle it!

Deciphering The Pattern:

For the brave-hearted, here’s a breakdown of the pattern anatomy:

Main Term: e.g., (“nfs:”, [“Stale file handle”], , “nfs_stale”).

Positive Matches: These are keywords or phrases Spencer seeks.

Negative Matches: Keywords that, if present, will exclude the log line.

Pattern Key: A unique identifier for each pattern.

Severity Levels & Descriptions:

Deep within, you’ll find SEVERITIES which categorizes each error by its impact.

ERROR_DESCRIPTIONS gives a detailed breakdown of each error. So next time an error comes knocking, you’ll be ready with an answer.

![]() Pro Tips:

Pro Tips:

Avoid swimming in the deep end without your floaties! Changes to the error patterns and descriptions can have ripple effects. Test after modifications.

Keep backups! It’s not magic; it’s just common sense. Before making major changes, keep a copy of the original script.

Have fun! Coding is an art, and you’re the artist. Enjoy the process.

Remember, every journey with code is a chance to learn, grow, and possibly break a few things. But isn’t that the fun of it all? So go on, adventurer, and make this script your own! And, as always, “Read the manual!”… but, ya know, with a pinch of panache.

Fare thee well!

- Gus

C’mon son, let’s run the script!

VERSION V2.0 BETA 1

Code:`

# Spencer is a basic Python script which is designed to run in conjunction with "multi_report" by Joe Schmuck

# https://github.com/JoeSchmuck/Multi-Report

# Spencer checks for additional errors which may appear in your logs that you otherwise may be unaware of.

# The initial version of this script is versioned v1.1 and was written by ChatGPT and NickF

# -----------------------------------------------

# Version 2.0 08/22/23

# Refactored alot of code.

# Dynamically determine whether run from MultiReport or if Spencer is called directly.

# Overall improvements to robustness of the script, the accuracy of it's findings, and an increase in scope.

# New search patterns and customizability.

# -----------------------------------------------

# Verion 1.3 08/13/23 - Contibution by JoeSchmuck merged

# Added a new feature for tracking and reporting previous errors differantly than new errors.

# -----------------------------------------------

# This updated script will run normally and will run with Multi-Report.

# To use with Multi-Report, call the script with parameter 'multi_report'.

# When using the 'multi_report' switch, the email will not be sent and a few files will be created in /tmp/ space

# that Multi-Report will use and delete during cleanup.

# This script must be names "spencer.py" by default. Multi-Report would need to be updated if the script name changes.

# If Spencer finds no errors, Multi-Report will not issue a Spencer report. You can tell Spencer ran by observing the Standard Output.

# -----------------------------------------------

# Recommendations:

# 1-Record all the errors in a file for later comparison.

# 2-When displaying the error data, include both pre and post non-error lines of data. Sort of what you do now.

# 3-Do not include any line of data twice. So a list of error messages would start with the message before the error

# and end with the non-error message after the sting of errors.

# 4-When determining if a problem is new or old, conduct an exact match line by line to the file you saved in step 1.

# Do not just count the number of errors becasue if 4 errors clear and 4 new errors are generated, the user will never know

# about the new errors under the current setup. I considered creating a file to solve this but my Python skills are limited.

# 5-Remove all my "# (Joe)" comments, they are there to make it clear to you what lines I changed.

# 6-I want to leave this script in your hands because I do not have a SAS or iSCSI interface so I'm not the correct person to maintain this.

# -----------------------------------------------

# Importing necessary modules

from importlib.resources import contents

import json

from mailbox import linesep

import subprocess

import datetime

import socket

import sys

import platform

import logging

import re

import os

# Setup logging

logging.basicConfig(filename='spencer.log', level=logging.DEBUG, format='%(asctime)s - %(levelname)s - %(message)s')

logging.info('Spencer script started')

hostname = socket.gethostname()

# Get the hostname of the current machine and store it in the variable 'hostname'

# Configuration

use_multireport = True # set this to True for multireport (writing to a file), otherwise False for email

USE_WITH_MULTI_REPORT = sys.argv[1] if len(sys.argv) > 1 else "False"

# Check if a command line argument is provided, if yes, assign its value to 'USE_WITH_MULTI_REPORT',

# otherwise assign "False" to 'USE_WITH_MULTI_REPORT'

DEFAULT_RECIPIENT = "YourEmail Address.com"

# Set the default email recipient address to "YourEmail Address.com"

to_address = DEFAULT_RECIPIENT

ERROR_SUBJECT = f"[SPENCER] [ERROR] Error Found in Log for {hostname}"

# Create a string for the error email subject, including the hostname

SUCCESS_SUBJECT = f"[SPENCER] [SUCCESS] All is Good! for {hostname}"

# Create a string for the success email subject, including the hostname

PREV_ERROR_SUBJECT = f"[SPENCER] [PREVIOUS ERROR] No new Errors Found, Previous Errors Exist in Log for {hostname}"

# Create a string for the previous error email subject, including the hostname

ERRORS_FILE = "previous_errors.json"

# Set the filename for the previous errors file to "previous_errors.json"

CONTENT_FILE = "/tmp/spencer_report.txt"

# Set the filename for the content file to "/tmp/spencer_report.txt"

print(f"{datetime.datetime.now()} - Spencer V2.0 - BETA - 8/22/23.")

print(f"{datetime.datetime.now()} - Writen by NickF with Contributions from JoeSchmuck")

print(f"{datetime.datetime.now()} - Spencer is checking log files for errors{' and pushing output to Multi-Report' if USE_WITH_MULTI_REPORT == 'multi_report' else ''}.")

# -----------------------------------------------

# Error Patterns List

# -----------------------------------------------

# Linux (TrueNAS SCALE)

# -----------------------------------------------

# iSCSI related patterns

# High Severity:

ERROR_PATTERN_ISCSI_TIMEOUT = ("iscsi", ["timeout", "timed out"], [], "iscsi_timeout")

ERROR_PATTERN_ISCSI_SESSION_ISSUES = ("iSCSI Initiator: session", ["login rejected", "recovery timed out"], [], "iscsi_session_issues")

# Moderate Severity:

ERROR_PATTERN_ISCSI_CONNECT_ERROR = ("iSCSI Initiator: connect to", ["failed"], [], "iscsi_connect_error")

ERROR_PATTERN_ISCSI_KERNEL_ISSUE = ("iSCSI Initiator: kernel reported", ["error"], [], "iscsi_kernel_issue")

# Low Severity:

ERROR_PATTERN_ISCSI_LOGOUT_ISSUE = ("iSCSI Initiator: received iferror", ["-"], [], "iscsi_logout_issue")

# NFS-related error patterns

# High Severity:

ERROR_PATTERN_NFS_SERVER_NOT_RESPONDING = ("nfs: server", ["not responding"], [], "nfs_server_not_responding")

ERROR_PATTERN_NFS_STALE = ("nfs:", ["Stale file handle"], [], "nfs_stale")

ERROR_PATTERN_NFS_TIMED_OUT = ("nfs:", ["timed out"], [], "nfs_timed_out")

# Moderate Severity:

ERROR_PATTERN_NFS_SERVER_OK = ("nfs: server", ["OK"], [], "nfs_server_ok")

ERROR_PATTERN_NFS_PORTMAP = ("portmap:", ["is not running"], [], "nfs_portmap_issue")

ERROR_PATTERN_NFS_RETRYING = ("nfs:", ["retrying"], [], "nfs_retrying")

ERROR_PATTERN_NFS_MOUNT_ISSUE = ("nfs: mount", ["failure", "failed", "unable", "error"], [], "nfs_mount_issue")

ERROR_PATTERN_NFS_ACCESS_DENIED = ("nfs:", ["access denied", "permission denied"], [], "nfs_access_denied")

# SCSI related patterns

# High Severity:

ERROR_PATTERN_CDB_ERRORS = ("cdb:", ["read", "write", "verify", "inquiry", "mode sense"], ["inquiry"], "cdb_errors")

# FreeBSD (TrueNAS CORE)

# -----------------------------------------------

# NFS-related patterns

# High Severity:

ERROR_PATTERN_FREEBSD_NFS_TIMED_OUT = ("NFS server", ["operation timed out"], [], "freebsd_nfs_timed_out")

# iSCSI-related patterns

# High Severity:

ERROR_PATTERN_CAM_STATUS_ISCSI_ISSUES = ("CAM status:", ["iSCSI Initiator Connection Reset", "iSCSI Target Suspended", "iSCSI Connection Lost"], [], "cam_status_iscsi_issues")

# Moderate Severity:

ERROR_PATTERN_CTL_DATAMOVE = ("ctl_datamove", ["aborted"], [], "ctl_datamove")

ERROR_PATTERN_ISCSI_GENERAL_ERRORS = ("iSCSI:", ["connection is dropped", "connection is now full feature phase", "task command timed out for connection", "connection is logged out", "received an illegal iSCSI PDU"], [], "iscsi_general_errors")

# Other SCSI patterns (not specific to iSCSI)

# High Severity:

ERROR_PATTERN_CAM_STATUS_ERROR = ("cam status:", ["scsi status error", "ata status error", "command timeout", "command aborted"], ["command retry", "command complete"], "cam_status_error")

# General error patterns (could be from both or either OS)

# -----------------------------------------------

# High Severity:

ERROR_PATTERN_SCSI_ERROR = ("scsi error", [], [], "scsi_error")

ERROR_PATTERN_HARD_RESETTING_LINK = ("hard resetting link", [], [], "hard_resetting_link")

ERROR_PATTERN_ATA_BUS_ERROR = ("ata bus error", [], [], "ata_bus_error")

# Moderate Severity:

ERROR_PATTERN_FAILED_COMMAND_READ = ("failed command: read FPDMA queued", [], [], "failed_command_read")

ERROR_PATTERN_FAILED_COMMAND_WRITE = ("failed command: write FPDMA queued", [], [], "failed_command_write")

ERROR_PATTERN_EXCEPTION_EMASK = ("exception Emask", [], [], "exception_emask")

# Low Severity:

ERROR_PATTERN_ACPI_ERROR = ("ACPI Error", [], [], "acpi_error")

ERROR_PATTERN_ACPI_EXCEPTION = ("ACPI Exception", [], [], "acpi_exception")

ERROR_PATTERN_ACPI_WARNING = ("ACPI Warning", [], [], "acpi_warning")

# -----------------------------------------------

# END Error Patterns List

# -----------------------------------------------

# Start Severities List

# -----------------------------------------------

SEVERITIES = {

"high": "High Severity",

"moderate": "Moderate Severity",

"low": "Low Severity"

}

ERROR_DESCRIPTIONS = {

'hard_resetting_link': ('Hard Resetting Link', 'Indicates that a storage communication link on the TrueNAS system was forcibly reset, which may affect data transfer or access.'),

'failed_command_read': ('Failed Command Read', 'TrueNAS encountered a failure when trying to read data, suggesting potential disk or communication issues.'),

'failed_command_write': ('Failed Command Write', 'TrueNAS encountered a failure during a write operation, which might signal disk problems or connection disruptions.'),

'ata_bus_error': ('ATA Bus Error', 'Detected on the ATA interface of TrueNAS, suggesting possible issues with the attached storage devices.'),

'exception_emask': ('Exception Emask', 'For TrueNAS SCALE users, this points to exceptions in the system, potentially related to disk operations or kernel disruptions.'),

'acpi_error': ('ACPI Error', 'For TrueNAS SCALE, indicates hardware or power management issues, which might impact system performance.'),

'acpi_exception': ('ACPI Exception', 'For TrueNAS SCALE, highlights unexpected hardware or power configurations that should be checked.'),

'acpi_warning': ('ACPI Warning', 'For TrueNAS SCALE, points to minor power or hardware concerns, although not critical, they should be reviewed.'),

'ctl_datamove': ('CTL Datamove', 'For TrueNAS CORE, signifies issues related to moving data in the Common Transport Layer, which might affect iSCSI operations.'),

'cam_status_error': ('CAM Status Error', 'For TrueNAS CORE, this suggests disruptions in accessing storage devices at the CAM layer.'),

'scsi_error': ('SCSI Error', 'General SCSI issues on TrueNAS, pointing to problems with connected SCSI devices or the communication bus.'),

'cdb_errors': ('CDB Errors', 'Errors tied to Command Descriptor Blocks in TrueNAS, hinting at potential SCSI command sequence disruptions.'),

'iscsi_timeout': ('iSCSI Timeout', 'A lapse in iSCSI communication on TrueNAS, potentially because of target unresponsiveness or network issues.'),

'iscsi_connect_error': ('iSCSI Connect Error', 'TrueNAS faced problems establishing an iSCSI session or connection, suggesting network or target issues.'),

'iscsi_session_issues': ('iSCSI Session Issues', 'Challenges related to managing iSCSI sessions on TrueNAS.'),

'iscsi_kernel_issue': ('iSCSI Kernel Issue', 'For TrueNAS SCALE, signifies disruptions in iSCSI operations at the Linux kernel level.'),

'iscsi_logout_issue': ('iSCSI Logout Issue', 'TrueNAS encountered errors when logging out of an iSCSI session, hinting at session or target problems.'),

'cam_status_iscsi_issues': ('CAM Status iSCSI Issues', 'For TrueNAS CORE, this indicates iSCSI issues at the CAM layer, affecting iSCSI operations.'),

'iscsi_general_errors': ('iSCSI General Errors', 'Broad category for other iSCSI disruptions on TrueNAS.'),

'nfs_server_not_responding': ('NFS Server Not Responding', 'The NFS service on TrueNAS isnt responding, hinting at service downtimes or network issues.'),

'nfs_server_ok': ('NFS Server OK', 'The NFS service on TrueNAS, previously unresponsive, has resumed normal operations.'),

'nfs_stale': ('NFS Stale', 'Points to outdated NFS file handles on TrueNAS, possibly affecting file or directory access.'),

'nfs_portmap_issue': ('NFS Portmap Issue', 'For TrueNAS CORE, suggests issues with the NFS port mapper service, potentially affecting client connections.'),

'nfs_timed_out': ('NFS Timed Out', 'NFS operations on TrueNAS exceeded the allowed time, hinting at server-side or client-side issues.'),

'nfs_retrying': ('NFS Retrying', 'TrueNAS is retrying a previously failed NFS operation, suggesting transient errors.'),

'nfs_mount_issue': ('NFS Mount Issue', 'Problems encountered when mounting NFS shares on TrueNAS, pointing to configuration or network issues.'),

'freebsd_nfs_timed_out': ('FreeBSD NFS Timed Out', 'For TrueNAS CORE, an NFS operation took longer than expected, suggesting performance or connection concerns.')

}

ERROR_SEVERITIES = {

'hard_resetting_link': 'high',

'failed_command_read': 'high',

'failed_command_write': 'high',

'ata_bus_error': 'moderate',

'exception_emask': 'high',

'acpi_error': 'moderate',

'acpi_exception': 'moderate',

'acpi_warning': 'low',

'ctl_datamove': 'moderate',

'cam_status_error': 'high',

'scsi_error': 'high',

'cdb_errors': 'moderate',

'iscsi_timeout': 'moderate',

'iscsi_connect_error': 'high',

'iscsi_session_issues': 'moderate',

'iscsi_kernel_issue': 'high',

'iscsi_logout_issue': 'moderate',

'cam_status_iscsi_issues': 'high',

'iscsi_general_errors': 'moderate',

'nfs_server_not_responding': 'high',

'nfs_server_ok': 'low',

'nfs_stale': 'moderate',

'nfs_portmap_issue': 'moderate',

'nfs_timed_out': 'high',

'nfs_retrying': 'low',

'nfs_mount_issue': 'moderate',

'freebsd_nfs_timed_out': 'high'

}

for key, value in ERROR_DESCRIPTIONS.items():

title, description = value

severity_key = ERROR_SEVERITIES.get(key, 'high') # defaulting to high if not specified

new_description = f"{SEVERITIES[severity_key]} - {description}"

ERROR_DESCRIPTIONS[key] = (title, new_description)

# -----------------------------------------------

# End Severities List

# -----------------------------------------------

def write_content_status(content):

with open('report.txt', 'w') as file: # Appending to report.txt, if you don't want to overwrite, change 'w' to 'a'

file.write(content + '\n\n') # Writing content followed by two newlines

# Email Validation Function

def validate_email(email):

# Regex for a simple email validation

email_regex = r"[^@]+@[^@]+\.[^@]+"

return bool(re.match(email_regex, email))

def safe_read_previous_errors():

try:

# Call the function read_previous_errors() to retrieve previous errors

return read_previous_errors()

except Exception as e:

# If an exception occurs, log the error message and return an empty dictionary

logging.error(f"Error reading previous errors: {e}")

return {}

def read_previous_errors():

if os.path.isfile(ERRORS_FILE):

with open(ERRORS_FILE, "r") as file:

return json.load(file)

return {}

def get_os_version():

try:

# Execute the command "cat /etc/os-release" and capture the output

os_version_output = subprocess.check_output(["cat", "/etc/os-release"], text=True).strip()

# Extract the PRETTY_NAME

pretty_name = None

for line in os_version_output.split('\n'):

if "PRETTY_NAME" in line:

pretty_name = line.split('=')[1].replace('"', '').strip()

# Initialize os_version as None

os_version = None

# Read the version number from /etc/version file

try:

with open("/etc/version", "r") as version_file:

os_version = version_file.read().strip()

except FileNotFoundError:

logging.warning("Version file '/etc/version' not found.")

# Add the appropriate suffix

if pretty_name:

if "Debian GNU/Linux" in pretty_name:

pretty_name += " (TrueNAS SCALE)"

elif "FreeBSD" in pretty_name:

pretty_name += " (TrueNAS CORE)"

output_str = f"TrueNAS SCALE, Version: {os_version} - {pretty_name}"

return output_str

else:

logging.error("Failed to extract PRETTY_NAME from os-release file.")

return "Unknown OS"

except subprocess.CalledProcessError as cpe:

# If there is an error executing the command, log the error message

logging.error(f"Error executing command: {cpe}")

except Exception as e:

# If there is any other unexpected error, log the error message

logging.error(f"Unexpected error when getting OS version: {e}")

# If any error occurs, return "Unknown OS"

return "Unknown OS"

def extract_pretty_name(os_version):

# Extracting the PRETTY_NAME value from os_version

for line in os_version.split('\n'):

if "PRETTY_NAME" in line:

pretty_name = line.split('=')[1].replace('"', '').strip()

return pretty_name

return None

def search_log_file(os_version):

matches = []

repeat_errors = []

previous_errors = safe_read_previous_errors() # Initializing previous_errors

match_counts = {k: 0 for k in [

"cdb_errors",

"iscsi_timeout",

"iscsi_connect_error",

"iscsi_session_issues",

"iscsi_kernel_issue",

"iscsi_logout_issue",

"ctl_datamove",

"cam_status_error",

"cam_status_iscsi_issues",

"iscsi_general_errors",

"scsi_error",

"hard_resetting_link",

"failed_command_read",

"failed_command_write",

"ata_bus_error",

"exception_emask",

"acpi_error",

"acpi_exception",

"acpi_warning",

"nfs_server_not_responding",

"nfs_server_ok",

"nfs_stale",

"nfs_portmap_issue",

"nfs_timed_out",

"nfs_retrying",

"nfs_mount_issue",

"freebsd_nfs_timed_out"

]}

error_patterns = [

ERROR_PATTERN_CDB_ERRORS,

ERROR_PATTERN_ISCSI_TIMEOUT,

ERROR_PATTERN_ISCSI_CONNECT_ERROR,

ERROR_PATTERN_ISCSI_SESSION_ISSUES,

ERROR_PATTERN_ISCSI_KERNEL_ISSUE,

ERROR_PATTERN_ISCSI_LOGOUT_ISSUE,

ERROR_PATTERN_CTL_DATAMOVE,

ERROR_PATTERN_CAM_STATUS_ERROR,

ERROR_PATTERN_CAM_STATUS_ISCSI_ISSUES,

ERROR_PATTERN_ISCSI_GENERAL_ERRORS,

ERROR_PATTERN_SCSI_ERROR,

ERROR_PATTERN_HARD_RESETTING_LINK,

ERROR_PATTERN_FAILED_COMMAND_READ,

ERROR_PATTERN_FAILED_COMMAND_WRITE,

ERROR_PATTERN_ATA_BUS_ERROR,

ERROR_PATTERN_EXCEPTION_EMASK,

ERROR_PATTERN_ACPI_ERROR,

ERROR_PATTERN_ACPI_EXCEPTION,

ERROR_PATTERN_ACPI_WARNING,

ERROR_PATTERN_NFS_SERVER_NOT_RESPONDING,

ERROR_PATTERN_NFS_SERVER_OK,

ERROR_PATTERN_NFS_STALE,

ERROR_PATTERN_NFS_PORTMAP,

ERROR_PATTERN_NFS_TIMED_OUT,

ERROR_PATTERN_FREEBSD_NFS_TIMED_OUT,

ERROR_PATTERN_NFS_RETRYING,

ERROR_PATTERN_NFS_MOUNT_ISSUE,

ERROR_PATTERN_NFS_ACCESS_DENIED

]

with open('/var/log/messages', 'r') as file:

for i, line in enumerate(file):

line_lower = line.lower() # Convert the line to lowercase for case-insensitive matching

for pattern_tuple in error_patterns:

pattern, errors, ignored, count_key = pattern_tuple

if pattern in line_lower and any(error in line_lower for error in errors) and not any(ignore in line_lower for ignore in ignored):

# Get the surrounding lines

match = "".join([file.readline() for _ in range(3)]).strip()

matches.append(match)

# Increment the respective counter in the match_counts dictionary

match_counts[count_key] += 1

for match in matches:

# Assuming the timestamp is at the beginning of each log entry

timestamp = match.split()[0]

if match in previous_errors and previous_errors[match] == timestamp:

repeat_errors.append(match)

else:

previous_errors[match] = timestamp # Update or add the new error with its timestamp

save_errors(previous_errors)

return matches, match_counts, repeat_errors

def save_errors(errors):

with open(ERRORS_FILE, 'w') as file:

json.dump(errors, file)

# Return the 'matches' list and the 'match_counts' dictionary as the result

def get_error_info(error_key):

# Fetching the error title and combined description (which includes severity)

error_title, combined_description = ERROR_DESCRIPTIONS.get(error_key, ("Unknown Error", "No description available"))

# Separating severity from description. We assume the format is "Severity - Description"

parts = combined_description.split(' - ', 1)

if len(parts) == 2:

severity, description = parts

else:

# Handle edge case where the split didn't work (maybe the description doesn't include severity)

severity = "Unknown Severity"

description = combined_description

return error_title, description, severity

def generate_table(match_counts, os_version, log_entries):

previous_errors = safe_read_previous_errors()

separator = "#" * 50

section_separator = "=" * 50

table = f"Spencer Results\n{separator}\nVersion: {os_version}\n\n{separator}\n\n"

# Distinguishing between new errors and previous errors based on the JSON file.

prev_errors_from_logs = {k: v for k, v in match_counts.items() if k in previous_errors and previous_errors[k] >= v}

missing_prev_errors = {k: v for k, v in previous_errors.items() if k not in match_counts}

# If no new errors but there are previous errors in the log, indicate that.

if not match_counts and (prev_errors_from_logs or missing_prev_errors):

table += f"{PREV_ERROR_SUBJECT}\n\n{separator}\n\n"

elif not match_counts and not (prev_errors_from_logs or missing_prev_errors):

table += f"{SUCCESS_SUBJECT}\n\n{separator}\n\n"

return table # We can return early as no further information is needed for the success case.

elif match_counts:

table += f"{ERROR_SUBJECT}\n\n{separator}\n\n"

def generate_error_section(errors_data, section_title):

section_output = f"{section_title}\n{section_separator}\n\n"

for error_key in errors_data.keys(): # Loop over the keys of the errors_data dictionary

error_count = errors_data.get(error_key, 0) # Use the error count or default to 0

error_name, error_description, severity = get_error_info(error_key)

section_output += f"{error_name} {'-'*(40-len(error_name))} [{error_count}] ----- [{severity}]\n"

section_output += f"{error_description}\n\n"

section_output += f"\n{section_separator}\n\n"

return section_output

table += generate_error_section(match_counts, "=====NEWLY FOUND ERRORS===========================")

table += generate_error_section({**prev_errors_from_logs, **missing_prev_errors}, "=====Previously Found Errors=================")

table += f"\n{separator}\nCorresponding Log Entries with Timestamps:\n{separator}\n"

for entry in log_entries:

table += entry + "\n"

save_errors(match_counts)

return table

def safe_send_email(content, to_address, subject):

# Check if the email address is valid

if not validate_email(to_address):

print(f"{datetime.datetime.now()} - Failed to send email: Invalid email address '{to_address}'")

logging.error(f"Invalid email address: {to_address}")

return

try:

# Attempt to send the email

send_email(content, to_address, subject)

except Exception as e:

print(f"{datetime.datetime.now()} - Failed to send email due to an exception: {e}")

logging.error(f"Error sending email: {e}")

def send_email(content, to_address, subject):

email_message = f"From: {DEFAULT_RECIPIENT}\nTo: {to_address}\nSubject: {subject}\n\n{content}"

sendmail_command = ["sendmail", "-t", "-oi"]

with subprocess.Popen(sendmail_command, stdin=subprocess.PIPE) as process:

process.communicate(email_message.encode())

print(f"{datetime.datetime.now()} - Email was sent successfully!")

def write_to_file(file, content):

# Writing the content to a file

with open(file, "w") as f:

f.write(content)

# Read previously found errors from a fil

def read_previous_errors():

# Loading previous errors from a JSON file if it exists, otherwise returning an empty dictionary

if os.path.isfile(ERRORS_FILE):

with open(ERRORS_FILE, 'r') as f:

return json.load(f)

else:

return {}

def write_to_file(file, content):

# Writing the content to a file

with open(file, "w") as f:

f.write(content)

os_version = get_os_version()

print(f"{datetime.datetime.now()} - Operating System version determined: {os_version}")

matches, match_counts, repeat_errors = search_log_file(os_version)

print(f"{datetime.datetime.now()} - Found {len(matches)} new matching errors in the logs")

previous_errors = read_previous_errors()

print(f"{datetime.datetime.now()} - Loaded {len(previous_errors)} previous errors from the error file")

write_to_file(ERRORS_FILE, json.dumps(match_counts))

print(f"{datetime.datetime.now()} - Wrote the current error counts to the error file")

table_content = generate_table(match_counts, os_version, matches)

print(f"{datetime.datetime.now()} - Generated the error table for the email content")

# We have removed the error_message portion since that has now been incorporated into generate_table.

content = table_content

new_errors_exist = matches and any(count > previous_errors.get(error, 0) for error, count in match_counts.items())

# Determine the subject of the email based on the presence of new errors or previous errors

if new_errors_exist:

subject = ERROR_SUBJECT

elif any(previous_errors.values()):

subject = PREV_ERROR_SUBJECT

else:

subject = SUCCESS_SUBJECT

print(f"{datetime.datetime.now()} - Selected email subject: {subject}")

# Print informational messages

if not matches:

print(f"{datetime.datetime.now()} - No matching errors found in the logs.")

if not any(previous_errors.values()):

print(f"{datetime.datetime.now()} - No previous errors exist.")

# Handle email sending or file writing based on USE_WITH_MULTI_REPORT

if USE_WITH_MULTI_REPORT == "multi_report":

print(f"{datetime.datetime.now()} - Email sending is skipped due to USE_WITH_MULTI_REPORT setting.")

with open(CONTENT_FILE, 'w') as f:

selected_message = 'New Error Messages\n' if new_errors_exist else 'Previous Errors\n' if any(previous_errors.values()) else 'No Errors\n'

f.write(f"{selected_message}{content}")

else:

safe_send_email(content, to_address, subject)

HERE IS VERSION V1.3

Code:

Spencer is a basic Python script which is designed to run in conjunction with “multi_report” by Joe Schmuck

GitHub - JoeSchmuck/Multi-Report: FreeNAS/TrueNAS Script for emailed drive information.

Spencer checks for additional errors which may appear in your logs that you otherwise may be unaware of.

The initial version of this script is versioned v1.1 and was written by ChatGPT and NickF

Verion 1.3 08/13/23

Added a new feature for tracking and reporting previous errors differantly than new errors.

This updated script will run normally and will run with Multi-Report.

To use with Multi-Report, call the script with parameter ‘multi_report’.

When using the ‘multi_report’ switch, the email will not be sent and a few files will be created in /tmp/ space

that Multi-Report will use and delete during cleanup.

This script must be names “spencer.py” by default. Multi-Report would need to be updated if the script name changes.

If Spencer finds no errors, Multi-Report will not issue a Spencer report. You can tell Spencer ran by observing the Standard Output.

Recommendations:

1-Record all the errors in a file for later comparison.

2-When displaying the error data, include both pre and post non-error lines of data. Sort of what you do now.

3-Do not include any line of data twice. So a list of error messages would start with the message before the error

and end with the non-error message after the sting of errors.

4-When determining if a problem is new or old, conduct an exact match line by line to the file you saved in step 1.

Do not just count the number of errors becasue if 4 errors clear and 4 new errors are generated, the user will never know

about the new errors under the current setup. I considered creating a file to solve this but my Python skills are limited.

5-Remove all my “# (Joe)” comments, they are there to make it clear to you what lines I changed.

6-I want to leave this script in your hands because I do not have a SAS or iSCSI interface so I’m not the correct person to maintain this.

Importing necessary modules

from importlib.resources import contents # (Joe)

import os

import json

from sre_constants import SUCCESS # (Joe)

import subprocess

import datetime

import socket

import sys # (Joe)

import platform # (Joe)

The hostname of the system where the script is running is retrieved using socket.gethostname()

hostname = socket.gethostname()

Email related constants: the default recipient and the subject of the email for error and success cases

USE_WITH_MULTI_REPORT=“False” #(Joe)

if len(sys.argv) > 1: USE_WITH_MULTI_REPORT = sys.argv[1] #(Joe)

DEFAULT_RECIPIENT = “YourEmail Address.com” # Used only when NOT using Multi-Report to run Spencer.

ERROR_SUBJECT = f"[SPENCER] [ERROR] Error Found in Log for {hostname}"

SUCCESS_SUBJECT = f"[SPENCER] [SUCCESS] All is Good! for {hostname}"

PREV_ERROR_SUBJECT = f"[SPENCER] [PREVIOUS ERROR] No new Errors Found, Previous Errors Exist in Log for {hostname}"

The file name of the file where previously found errors will be stored

ERRORS_FILE = “previous_errors.json”

CONTENT_FILE = “/tmp/spencer_report.txt” # (Joe) This file is attached to the Multi-Report email.

if USE_WITH_MULTI_REPORT != “multi_report”: print(f"{datetime.datetime.now()} - Spencer is checking log files for errors.“)

if USE_WITH_MULTI_REPORT == “multi_report”: print(f”{datetime.datetime.now()} - Spencer is checking log files for errors and pushing output to Multi-Report.")

print(platform.system()) # (Joe) This will tell you if it’s Linux or FreeBSD.

This function retrieves the version of the operating system by executing the command “cat /etc/version” in a subprocess.

def get_os_version():

version_command = [“cat”, “/etc/version”]

version = subprocess.run(version_command, capture_output=True, text=True).stdout.strip()

return version

This function searches the log file for specified errors.

It distinguishes between different operating system versions (SCALE vs CORE) and looks for specific error patterns accordingly.

def search_log_file(os_version):

matches = # List to store the matched lines

match_counts = { # Dictionary to store the match counts

“ctl_datamove”: 0,

“cdb_errors”: 0,

“cam_status_error”: 0,

“iscsi_timeout”: 0

}

# Define the possible error messages for each type of error

cdb_errors = ["read", "write", "verify", "inquiry", "mode sense"]

cam_errors = ["scsi status error", "ata status error", "command timeout", "command aborted"]

cam_ignored = ["command retry", "command complete"]

cdb_ignored = ["inquiry"]

# Open the log file

with open("/var/log/messages", "r") as file:

lines = file.readlines()

# Iterate over each line in the log file

for i, line in enumerate(lines):

line_lower = line.lower() # Convert line to lowercase for case-insensitive matching

The version checks were not needed and causeing a problem with Core recognition, so I removed them. # (Joe)

if "cdb:" in line_lower and any(error in line_lower for error in cdb_errors) and not any(ignore in line_lower for ignore in cdb_ignored):

match = lines[max(0, i - 1) : i + 2] # Capture previous, current, and next line

matches.append("".join(match).strip()) # Append the matching lines to the matches list

match_counts["cdb_errors"] += 1 # Increase the count for cdb_errors

elif "iscsi" in line_lower and ("timeout" in line_lower or "timed out" in line_lower):

match = lines[max(0, i - 1) : i + 2] # Capture previous, current, and next line

matches.append("".join(match).strip()) # Append the matching lines to the matches list

match_counts["iscsi_timeout"] += 1 # Increase the count for iscsi_timeout

# If it's not a SCALE version, we assume it's a CORE version

# For a CORE version, check for ctl_datamove and cam_status_error

elif "ctl_datamove" in line_lower and "aborted" in line_lower:

match = lines[max(0, i - 1) : i + 2] # Capture previous, current, and next line

matches.append("".join(match).strip()) # Append the matching lines to the matches list

match_counts["ctl_datamove"] += 1 # Increase the count for ctl_datamove

elif "cam status:" in line_lower and any(error in line_lower for error in cam_errors) and not any(ignore in line_lower for ignore in cam_ignored):

match = lines[max(0, i - 1) : i + 2] # Capture previous, current, and next line

matches.append("".join(match).strip()) # Append the matching lines to the matches list

match_counts["cam_status_error"] += 1 # Increase the count for cam_status_error

# Return all matches and their counts

return matches, match_counts

This function generates a plain text table with the counts of matches, only if there are any errors for the tracked message. # (Joe)

def generate_table(match_counts, os_version, previous_errors):

table = “Spencer Results\n”

if match_counts[‘cam_status_error’] > 0 or match_counts[‘ctl_datamove’] > 0 or match_counts[‘cdb_errors’] > 0 or match_counts[‘iscsi_timeout’] > 0:

if platform.system() == “Linux”:

table += “TrueNAS SCALE\n”

else:

table += “TrueNAS CORE\n”

table += “\n”

table += “Error Type\tNew Errors\tExisting Errors\n”

if match_counts[‘cam_status_error’] > 0: # (Joe)

table += f"cam_status_error\t{match_counts[‘cam_status_error’] - previous_errors.get(‘cam_status_error’, 0)}\t{previous_errors.get(‘cam_status_error’, 0)}\n" # (Joe)

if match_counts[‘ctl_datamove’] > 0: # (Joe)

table += f"ctl_datamove\t\t{match_counts[‘ctl_datamove’] - previous_errors.get(‘ctl_datamove’, 0)}\t{previous_errors.get(‘ctl_datamove’, 0)}\n" # (Joe) Added tab to line things up

if match_counts[‘cdb_errors’] > 0: # (Joe)

table += f"cdb_errors\t\t{match_counts[‘cdb_errors’] - previous_errors.get(‘cdb_errors’, 0)}\t{previous_errors.get(‘cdb_errors’, 0)}\n" # (Joe)

if match_counts[‘iscsi_timeout’] > 0: # (Joe)

table += f"iscsi_timeout\t\t{match_counts[‘iscsi_timeout’] - previous_errors.get(‘iscsi_timeout’, 0)}\t{previous_errors.get(‘iscsi_timeout’, 0)}\n" # (Joe)

return table

This function sends an email using the sendmail command.

The email contains the table of matches and the list of matching lines from the log file.

def send_email(content, to_address, subject):

email_message = f"From: {DEFAULT_RECIPIENT}\nTo: {to_address}\nSubject: {subject}\n\n{content}"

sendmail_command = ["sendmail", "-t", "-oi"]

with subprocess.Popen(sendmail_command, stdin=subprocess.PIPE) as process:

process.communicate(email_message.encode())

print(f"{datetime.datetime.now()} - Spencer sent an email successfully!")

Read previously found errors from a file

def read_previous_errors():

if os.path.isfile(ERRORS_FILE):

with open(ERRORS_FILE, “r”) as file:

return json.load(file)

return {}

Write the current errors to a file

def write_current_errors(errors):

with open(ERRORS_FILE, “w”) as file:

json.dump(errors, file)

def write_content_status(content): # (Joe)

with open(CONTENT_FILE, “w”) as file: # (Joe)

file.write(content) # (Joe)

The recipient email address is set to the default recipient.

to_address = DEFAULT_RECIPIENT

The OS version is retrieved.

os_version = get_os_version()

The log file is searched for matches and match counts.

matches, match_counts = search_log_file(os_version)

Read the previous errors from a file

previous_errors = read_previous_errors()

Write the current errors to a file

write_current_errors(match_counts)

A plain text table with match counts is generated.

table_content = generate_table(match_counts, os_version, previous_errors)

If matches are found, they are appended to the table content.

If not, a message indicating that no matches were found is appended.

if matches:

content = “{}\n\n{}”.format(table_content, ‘\n\n’.join(matches))

elif previous_errors and any(previous_errors.values()):

content = f"{table_content}\n\nNo new occurrences have been seen, but previous errors still exist in the logs."

else:

content = f"{table_content}\n\nNo matching lines found in the log file."

An email is sent with the appropriate subject depending on whether matches were found.

A file is written for multi_report use.

if matches:

if any(count > previous_errors.get(error, 0) for error, count in match_counts.items()):

if USE_WITH_MULTI_REPORT != “multi_report”: send_email(content, to_address, ERROR_SUBJECT)

write_content_status(“New Error Messages\n”+content) # (Joe)

else:

if USE_WITH_MULTI_REPORT != “multi_report”: send_email(content, to_address, PREV_ERROR_SUBJECT if previous_errors else SUCCESS_SUBJECT)

write_content_status(“Previous Errors\n”+content if previous_errors else “Good”) # (Joe)

else:

if previous_errors and any(previous_errors.values()):

if USE_WITH_MULTI_REPORT != “multi_report”: send_email(content, to_address, PREV_ERROR_SUBJECT)

write_content_status(“Previous Errors\n”+content) # (Joe)

else:

if USE_WITH_MULTI_REPORT != “multi_report”: send_email(content, to_address, SUCCESS_SUBJECT)

write_content_status(“No Errors”+content) # (Joe)

HERE IS VERSION V1.2

Code:

Spencer is a basic Python script which is designed to run in conjunction with “multi_report” by Joe Schmuck

GitHub - JoeSchmuck/Multi-Report: FreeNAS/TrueNAS Script for emailed drive information.

Spencer checks for additional errors which may appear in your logs that you otherwise may be unaware of.

The initial version of this script is versioned v1.1 and was written by ChatGPT and NickF

Version 1.2 07/29/23

Added a new feature for tracking and reporting previous errors differently than new errors.

Importing necessary modules

import os

import json

import subprocess

import datetime

import socket