No, in that case, don’t touch it.

For those of you concerned about the zvol VM disk sync issue, I just go a notification that they actually fixed this a week ago and the fix will be in the release. NAS-134891 / 25.04.0 / linux: zvols: correctly detect flush requests (#17131) by ixhamza · Pull Request #281 · truenas/zfs · GitHub

You mean they removed the built-in ability to do this?

That’s so awesome. ![]()

Maybe you’re supposed to enabled and initiate the “Apps” subsystem, and then install an app that allows those SMART features again?

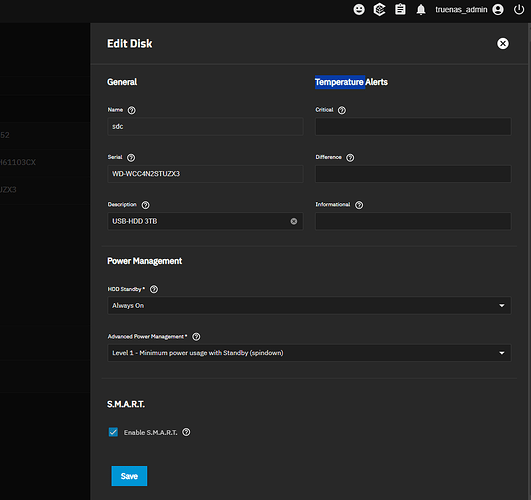

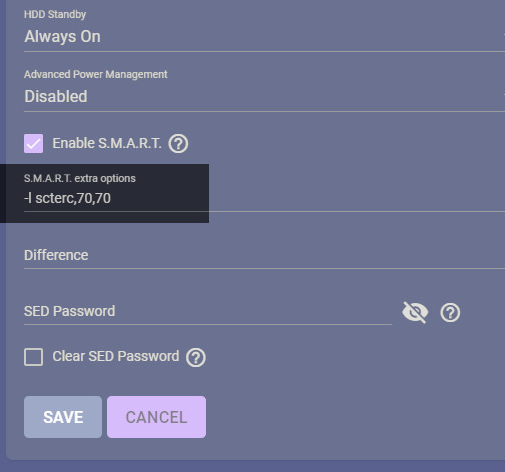

Fangtooth and later users: How do you set your drive’s scterc timeout?

Its probably best to start a new thread in General. What specifically are you trying to do and why? What types of drives?

In general, SMART is getting a review. We are finding too many false positives (drive failures) and poor understanding of flash drives.

from the last podcast: Yeah it seems you’re meant to install Scrutiny if you want to fiddle with SMART parameters.

So I have to activate an “Apps” pool and install an app in order to configure and monitor my drives… with something that you would expect to be a built-in feature of a NAS? That comes off as tacky.

I don’t use “Apps” or ever plan to use them.

Why offload SMART to a separate app? Why not just integrate Scrutiny’s features into the TrueNAS GUI?

If you want to keep using the Rsync Service… need to install an app.

If you want to configure and monitor your drives… need to install an app.

Will Reporting be outsourced to an app too?

I’ve installed Scrutiny and … it doesn’t appear to do more than offer a UI. I don’t see a way to handle extra-special SMART features.

So, maybe that’s not the way to go. Maybe best to open a separate thread.

When you install Scrutiny, does it integrate the Disk / SMART elements into the TrueNAS GUI, or do you actually need to navigate to your “Apps” to access such options?

Please don’t tell me that you need to visit Scrutiny’s IP:port in your web browser, completely separate from the TrueNAS GUI…

in that case i’m definitely not saying it… ![]()

Just keep using CORE. ![]()

No, reporting is not being outsourced. And no, SMART isn’t going away. Its all still fully there, you can setup CRON jobs / scripts if you want to do esoteric custom smartd setups. The background service will be setup to automatically monitor SMART for the critical errors that are worth your notice though. The only major thing moved away from SMART is the temperature monitoring in Goldeye, which moved to a Kernel module that is far far more efficient at keeping tabs of drive temps.

Is anyone else seeing really high utilization on the zd## devices when a VM discards data? I’m seeing a lot of spikes.

Aaaaand my VM with NIC passthru crashed again, this is so weird.

Can you create a separate thread with all the hardware details as well as info on VMs and PCIe device. There are tens of potential causes of crashes and it can often require a few eyes.

I’m also tracking this in NAS-134960 FYI.

There is something terribly wrong with zvols, my system is locked up because I tried to copy from one disk to another inside a VM.

Maybe because the mirrored vdev is backed by SMR drives

This may be a significant contributing factor to your issues. @gedavids

I thought so too, so I moved it on to my 3 vdev CMR pool (like a week ago). The issue remains.

Unfortunately, my main system also froze today. Three Incus VMs were running (idle). It was perfectly stable on 24.10 before and upgraded to 25.04-RC.1.

During the freeze, the web UI and VMs were unreachable. I could ping the TrueNAS IP though. Weirdly, pings to VMs only responded 4 minutes(!) later, and only some.

Force rebooted the machine after it made no recovery about 10 minutes later.

This was the only relevant thing after the syslog in the reboot, about two minutes after the freeze started (it might have not saved some other messages):

Apr 02 07:50:24 Prime kernel: INFO: task txg_sync:1264 blocked for more than 120 seconds.

Apr 02 07:50:24 Prime kernel: Tainted: P OE 6.12.15-production+truenas #1

Apr 02 07:50:27 Prime kernel: "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

Apr 02 07:50:31 Prime kernel: task:txg_sync state:D stack:0 pid:1264 tgid:1264 ppid:2 flags:0x00004000

Apr 02 07:50:31 Prime kernel: Call Trace:

Apr 02 07:50:31 Prime kernel: <TASK>

Apr 02 07:50:31 Prime kernel: __schedule+0x461/0xa10

Apr 02 07:50:31 Prime kernel: schedule+0x27/0xd0

Apr 02 07:50:31 Prime kernel: schedule_timeout+0x9e/0x170

Apr 02 07:50:31 Prime kernel: ? __pfx_process_timeout+0x10/0x10

Apr 02 07:50:31 Prime kernel: io_schedule_timeout+0x51/0x70

Apr 02 07:50:31 Prime kernel: __cv_timedwait_common+0x129/0x160 [spl]

Apr 02 07:50:31 Prime kernel: ? __pfx_autoremove_wake_function+0x10/0x10

Apr 02 07:50:31 Prime kernel: __cv_timedwait_io+0x19/0x20 [spl]

Apr 02 07:50:31 Prime kernel: zio_wait+0x11a/0x240 [zfs]

Apr 02 07:50:31 Prime kernel: dsl_pool_sync+0xb9/0x410 [zfs]

Apr 02 07:50:31 Prime kernel: spa_sync_iterate_to_convergence+0xd8/0x200 [zfs]

Apr 02 07:50:31 Prime kernel: spa_sync+0x30a/0x600 [zfs]

Apr 02 07:50:31 Prime kernel: txg_sync_thread+0x1ec/0x270 [zfs]

Apr 02 07:50:31 Prime kernel: ? __pfx_txg_sync_thread+0x10/0x10 [zfs]

Apr 02 07:50:31 Prime kernel: ? __pfx_thread_generic_wrapper+0x10/0x10 [spl]

Apr 02 07:50:31 Prime kernel: thread_generic_wrapper+0x5a/0x70 [spl]

Apr 02 07:50:31 Prime kernel: kthread+0xcf/0x100

Apr 02 07:50:31 Prime kernel: ? __pfx_kthread+0x10/0x10

Apr 02 07:50:31 Prime kernel: ret_from_fork+0x31/0x50

Apr 02 07:50:31 Prime kernel: ? __pfx_kthread+0x10/0x10

Apr 02 07:50:31 Prime kernel: ret_from_fork_asm+0x1a/0x30

Apr 02 07:50:31 Prime kernel: </TASK>

I’ve had issues with my other test system freezing on RC.1 as well, but I kinda hoped it was due to the unsupported install config, but this system has a dedicated NVMe SSD for booting TrueNAS and one for VMs and Docker. It’s also set as the “System Pool”.

Not sure if it’s the same issue as I’ve had on my HPE server: TrueNAS 25.04-RC.1 is Now Available! - #85 by TheJulianJES

This system is running an i7-7700k, 500 GB WD Black NVMe SSD for booting TrueNAS and a 1 TB Samsung 970 Evo for VMs + Docker + “System Pool”. Both SSDs have the latest firmware, are mostly empty, and “work fine”.

(Auto TRIM was off, but they’re trimed every couple of weeks. No TRIM happened before or during the freeze.)

After the system was force restarted, it’s working just fine again.

I guess I should create a ticket about these freezes, now that’s it happened on two separate systems… which both have been fine on all previous versions. Guessing this will be hard to debug.

Saved a debug file directly after I restarted the system.

Just curious, has anyone else experience seemingly random freezes of the entire TrueNAS machine (maybe especially when running Incus VMs)? Kinda have a feeling that could be related.