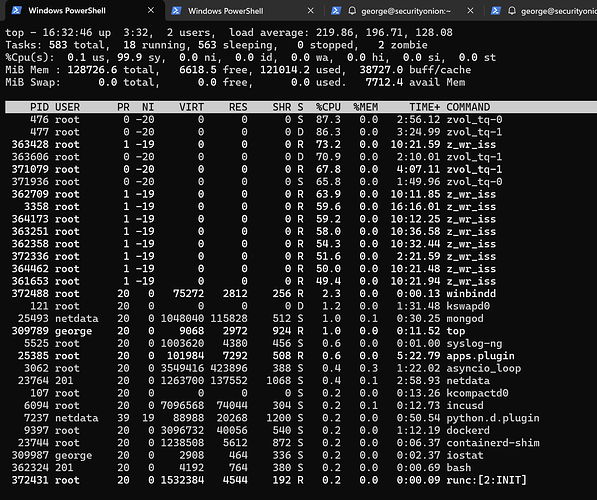

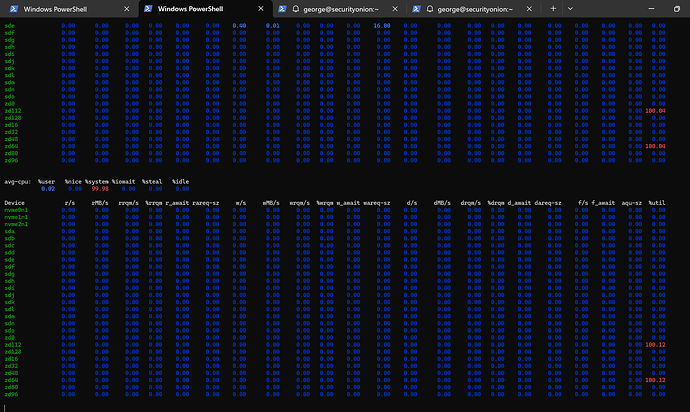

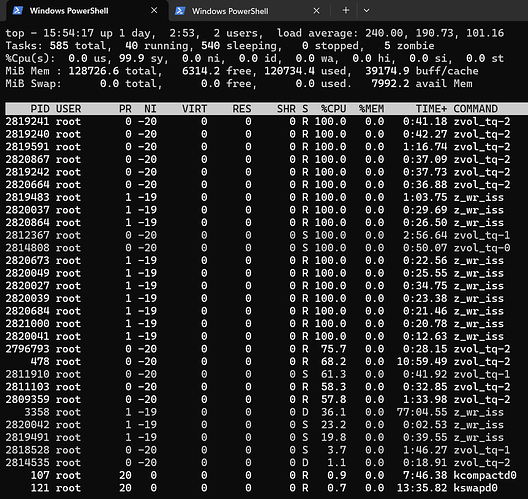

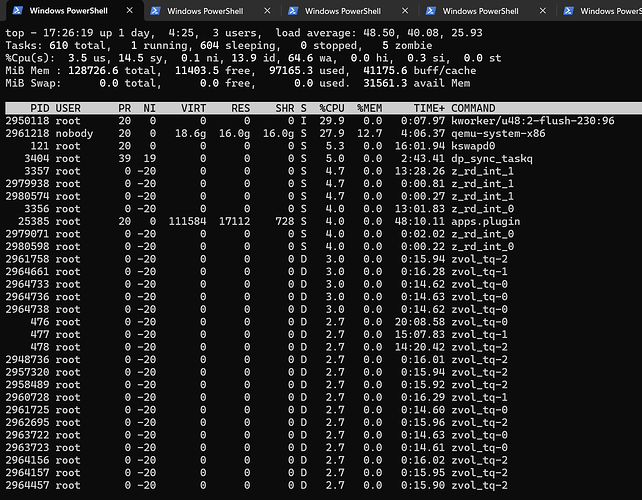

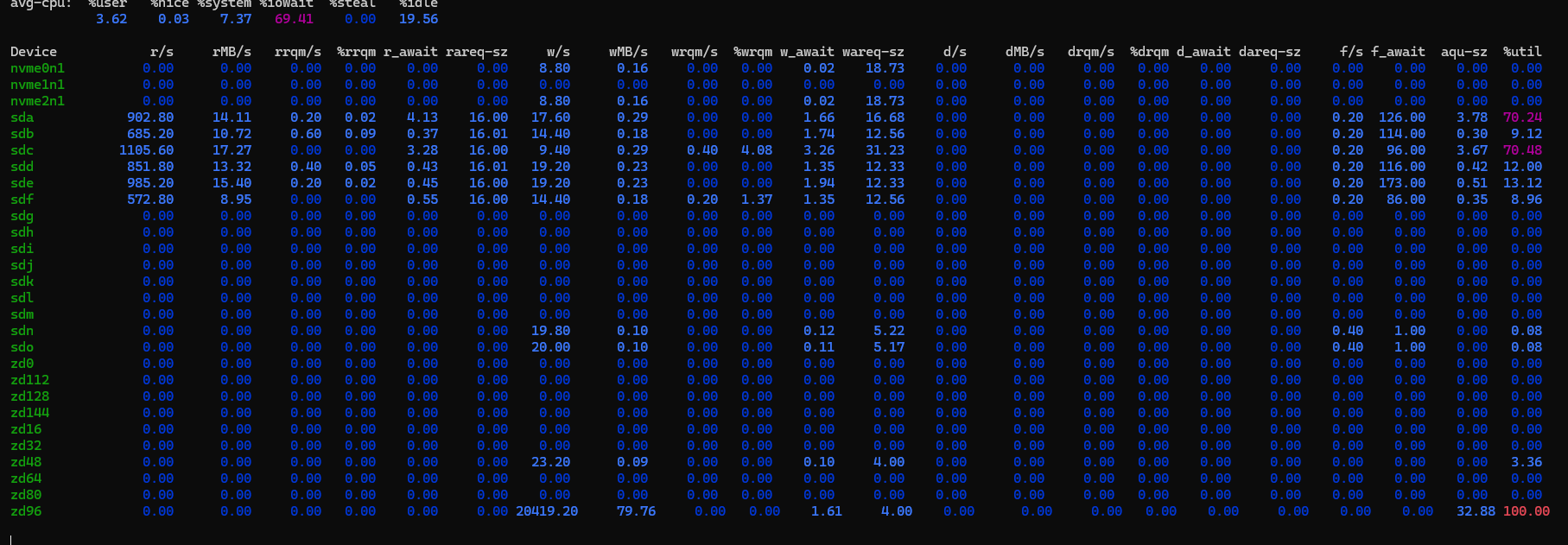

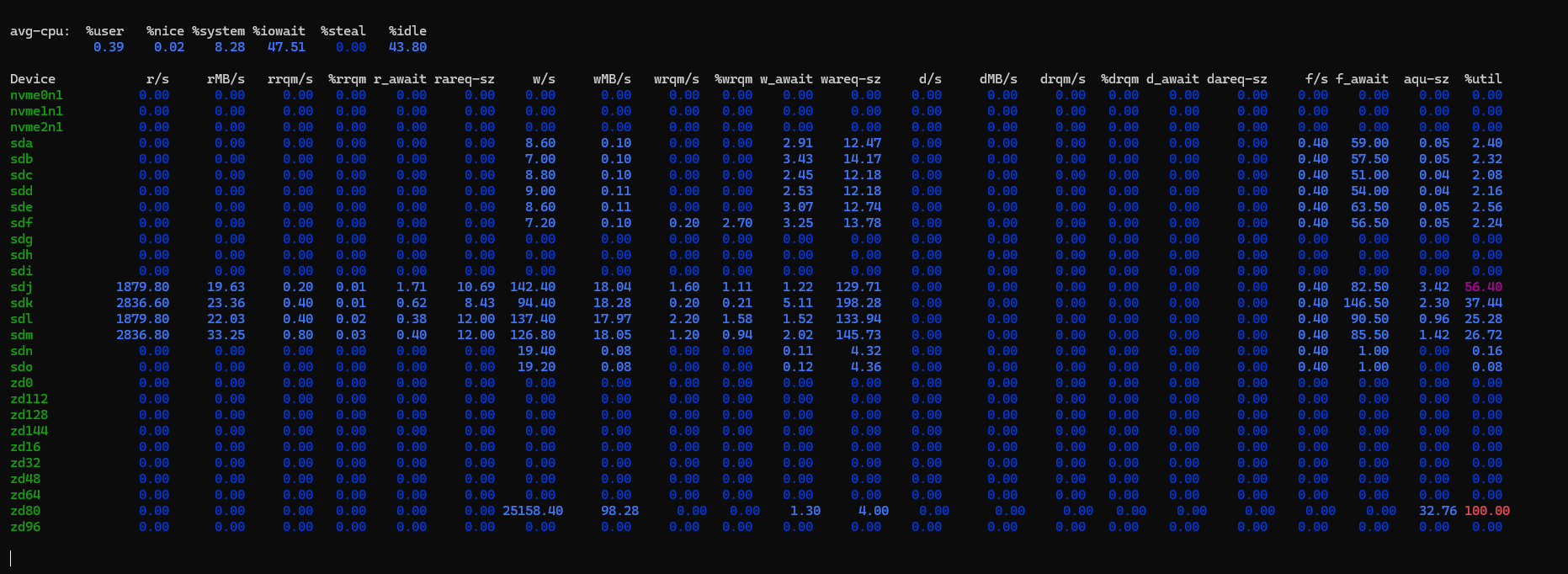

I have a problem with an Incus VM crashing. This VM started life on 24.10 as a sparse zvol and was migrated over to the 25.04 beta1 and now rc1. It is a network monitoring security appliance known as Security Onion. I pass thru a physical NIC to this VM that monitors mirrored traffic from my router uplink. I’ve had this VM rash twice now with an out of memory error and the VM is large (32GB), my system has 128GB of RAM. I don’t think the PCIe passthru is necessarily the cause. I’m seeing spikes of IOwait on the zd### devices related to the VM. I’m not sure. I’m going to post the syslog below around when the system crashed, let me know if there’s something else anyone would like to see.

Mar 31 16:00:03 truenas42 systemd[1]: Starting sysstat-collect.service - system activity accounting tool...

Mar 31 16:00:03 truenas42 systemd[1]: sysstat-collect.service: Deactivated successfully.

Mar 31 16:00:03 truenas42 systemd[1]: Finished sysstat-collect.service - system activity accounting tool.

Mar 31 16:02:25 truenas42 chronyd[5558]: Selected source 192.168.42.1

Mar 31 16:10:03 truenas42 systemd[1]: Starting sysstat-collect.service - system activity accounting tool...

Mar 31 16:10:03 truenas42 systemd[1]: sysstat-collect.service: Deactivated successfully.

Mar 31 16:10:03 truenas42 systemd[1]: Finished sysstat-collect.service - system activity accounting tool.

Mar 31 16:14:51 truenas42 containerd[5568]: time="2025-03-31T16:14:48.313101758-04:00" level=error msg="post event" error="context deadline exceeded"

Mar 31 16:14:51 truenas42 containerd[5568]: time="2025-03-31T16:14:50.468212760-04:00" level=error msg="ttrpc: received message on inactive stream" stream=266387

Mar 31 16:15:23 truenas42 dockerd[9439]: time="2025-03-31T16:15:23.527600819-04:00" level=warning msg="Failed to retrieve containerd version" error="Canceled: context canceled"

Mar 31 16:16:53 truenas42 kernel: runc:[2:INIT] invoked oom-killer: gfp_mask=0x42cc0(GFP_KERNEL|__GFP_NOWARN|__GFP_COMP), order=2, oom_score_adj=0

Mar 31 16:16:53 truenas42 kernel: CPU: 7 UID: 999 PID: 829830 Comm: runc:[2:INIT] Tainted: P OE 6.12.15-production+truenas #1

Mar 31 16:16:53 truenas42 kernel: Tainted: [P]=PROPRIETARY_MODULE, [O]=OOT_MODULE, [E]=UNSIGNED_MODULE

Mar 31 16:16:53 truenas42 kernel: Hardware name: ASUS System Product Name/Pro WS X570-ACE, BIOS 4902 08/29/2024

Mar 31 16:16:53 truenas42 kernel: Call Trace:

Mar 31 16:16:53 truenas42 kernel: <TASK>

Mar 31 16:16:53 truenas42 kernel: dump_stack_lvl+0x64/0x80

Mar 31 16:16:53 truenas42 kernel: dump_header+0x43/0x160

Mar 31 16:16:53 truenas42 kernel: oom_kill_process+0xfa/0x200

Mar 31 16:16:53 truenas42 kernel: out_of_memory+0x257/0x520

Mar 31 16:16:53 truenas42 kernel: __alloc_pages_slowpath.constprop.0+0x696/0xdf0

Mar 31 16:16:53 truenas42 kernel: __alloc_pages_noprof+0x30e/0x330

Mar 31 16:16:53 truenas42 kernel: ___kmalloc_large_node+0x80/0x110

Mar 31 16:16:53 truenas42 kernel: __kmalloc_large_node_noprof+0x1d/0xa0

Mar 31 16:16:53 truenas42 kernel: ? __pfx_seccomp_check_filter+0x10/0x10

Mar 31 16:16:53 truenas42 kernel: __kmalloc_node_track_caller_noprof+0x331/0x3f0

Mar 31 16:16:53 truenas42 kernel: ? __kmalloc_large_node_noprof+0x1d/0xa0

Mar 31 16:16:53 truenas42 kernel: ? bpf_prepare_filter+0x35c/0x510

Mar 31 16:16:53 truenas42 kernel: ? __pfx_seccomp_check_filter+0x10/0x10

Mar 31 16:16:53 truenas42 kernel: ? kmemdup_noprof+0x20/0x50

Mar 31 16:16:53 truenas42 kernel: kmemdup_noprof+0x20/0x50

Mar 31 16:16:53 truenas42 kernel: bpf_prepare_filter+0x35c/0x510

Mar 31 16:16:53 truenas42 kernel: ? __pfx_seccomp_check_filter+0x10/0x10

Mar 31 16:16:53 truenas42 kernel: bpf_prog_create_from_user+0xc1/0x120

Mar 31 16:16:53 truenas42 kernel: do_seccomp+0x12f/0xa90

Mar 31 16:16:53 truenas42 kernel: do_syscall_64+0x82/0x190

Mar 31 16:16:53 truenas42 kernel: ? srso_alias_return_thunk+0x5/0xfbef5

Mar 31 16:16:53 truenas42 kernel: ? __lruvec_stat_mod_folio+0x83/0xd0

Mar 31 16:16:53 truenas42 kernel: ? srso_alias_return_thunk+0x5/0xfbef5

Mar 31 16:16:53 truenas42 kernel: ? folio_add_file_rmap_ptes+0x38/0xb0

Mar 31 16:16:53 truenas42 kernel: ? srso_alias_return_thunk+0x5/0xfbef5

Mar 31 16:16:53 truenas42 kernel: ? srso_alias_return_thunk+0x5/0xfbef5

Mar 31 16:16:53 truenas42 kernel: ? next_uptodate_folio+0x85/0x2a0

Mar 31 16:16:53 truenas42 kernel: ? srso_alias_return_thunk+0x5/0xfbef5

Mar 31 16:16:53 truenas42 kernel: ? filemap_map_pages+0x521/0x650

Mar 31 16:16:53 truenas42 kernel: ? __entry_text_end+0x101e86/0x101e89

Mar 31 16:16:53 truenas42 kernel: ? srso_alias_return_thunk+0x5/0xfbef5

Mar 31 16:16:53 truenas42 kernel: ? do_fault+0x281/0x4d0

Mar 31 16:16:53 truenas42 kernel: ? srso_alias_return_thunk+0x5/0xfbef5

Mar 31 16:16:53 truenas42 kernel: ? __handle_mm_fault+0x7b8/0xfd0

Mar 31 16:16:53 truenas42 kernel: ? srso_alias_return_thunk+0x5/0xfbef5

Mar 31 16:16:53 truenas42 kernel: ? srso_alias_return_thunk+0x5/0xfbef5

Mar 31 16:16:53 truenas42 kernel: ? __count_memcg_events+0x53/0xf0

Mar 31 16:16:53 truenas42 kernel: ? srso_alias_return_thunk+0x5/0xfbef5

Mar 31 16:16:53 truenas42 kernel: ? __rseq_handle_notify_resume+0xa4/0x4f0

Mar 31 16:16:53 truenas42 kernel: ? srso_alias_return_thunk+0x5/0xfbef5

Mar 31 16:16:53 truenas42 kernel: ? handle_mm_fault+0xae/0x2d0

Mar 31 16:16:53 truenas42 kernel: ? srso_alias_return_thunk+0x5/0xfbef5

Mar 31 16:16:53 truenas42 kernel: ? srso_alias_return_thunk+0x5/0xfbef5

Mar 31 16:16:53 truenas42 kernel: entry_SYSCALL_64_after_hwframe+0x76/0x7e

Mar 31 16:16:53 truenas42 kernel: RIP: 0033:0x561e9e79c92e

Mar 31 16:16:53 truenas42 kernel: Code: 48 83 ec 38 e8 13 00 00 00 48 83 c4 38 5d c3 cc cc cc cc cc cc cc cc cc cc cc cc cc 49 89 f2 48 89 fa 48 89 ce 48 89 df 0f 05 <48> 3d 01 f0 ff ff 76 15 48 f7 d8 48 89 c1 48 c7 c0 ff ff ff ff 48

Mar 31 16:16:53 truenas42 kernel: RSP: 002b:000000c00013f738 EFLAGS: 00000202 ORIG_RAX: 000000000000013d

Mar 31 16:16:53 truenas42 kernel: RAX: ffffffffffffffda RBX: 0000000000000001 RCX: 0000561e9e79c92e

Mar 31 16:16:53 truenas42 kernel: RDX: 000000c00013f840 RSI: 0000000000000004 RDI: 0000000000000001

Mar 31 16:16:53 truenas42 kernel: RBP: 000000c00013f778 R08: 0000000000000000 R09: 0000000000000000

Mar 31 16:16:53 truenas42 kernel: R10: 0000000000000000 R11: 0000000000000202 R12: 000000c00013f880

Mar 31 16:16:53 truenas42 kernel: R13: 0000000000000000 R14: 000000c0000061c0 R15: 000000000000019a

Mar 31 16:16:53 truenas42 kernel: </TASK>

Mar 31 16:16:53 truenas42 kernel: Mem-Info:

Mar 31 16:16:53 truenas42 kernel: active_anon:9343870 inactive_anon:1012761 isolated_anon:32

active_file:22619 inactive_file:9058 isolated_file:0

unevictable:0 dirty:86 writeback:31008

slab_reclaimable:718210 slab_unreclaimable:571489

mapped:9433488 shmem:9434075 pagetables:23795

sec_pagetables:35434 bounce:0

kernel_misc_reclaimable:0

free:1401467 free_pcp:2049 free_cma:0

Mar 31 16:16:53 truenas42 kernel: Node 0 active_anon:37375480kB inactive_anon:4051044kB active_file:80508kB inactive_file:44648kB unevictable:0kB isolated(anon):128kB isolated(file):0kB mapped:37733616kB dirty:344kB writeback:124032kB shmem:37736300kB shmem_thp:0kB shmem_pmdmapped:0kB anon_thp:1785856kB writeback_tmp:0kB kernel_stack:20224kB pagetables:95180kB sec_pagetables:141736kB all_unreclaimable? no

Mar 31 16:16:53 truenas42 kernel: Node 0 DMA free:1020kB boost:0kB min:4kB low:16kB high:28kB reserved_highatomic:0KB active_anon:504kB inactive_anon:5640kB active_file:0kB inactive_file:0kB unevictable:0kB writepending:0kB present:15984kB managed:15360kB mlocked:0kB bounce:0kB free_pcp:0kB local_pcp:0kB free_cma:0kB

Mar 31 16:16:53 truenas42 kernel: lowmem_reserve[]: 0 3165 128711 0 0

Mar 31 16:16:53 truenas42 kernel: Node 0 DMA32 free:500056kB boost:0kB min:1620kB low:4784kB high:7948kB reserved_highatomic:0KB active_anon:35476kB inactive_anon:325560kB active_file:816kB inactive_file:0kB unevictable:0kB writepending:16kB present:3307036kB managed:3241500kB mlocked:0kB bounce:0kB free_pcp:0kB local_pcp:0kB free_cma:0kB

Mar 31 16:16:53 truenas42 kernel: lowmem_reserve[]: 0 0 125546 0 0

Mar 31 16:16:53 truenas42 kernel: Node 0 Normal free:5106392kB boost:0kB min:65952kB low:194504kB high:323056kB reserved_highatomic:0KB active_anon:37339500kB inactive_anon:3719844kB active_file:124556kB inactive_file:400kB unevictable:0kB writepending:124440kB present:130797056kB managed:128559144kB mlocked:0kB bounce:0kB free_pcp:6400kB local_pcp:0kB free_cma:0kB

Mar 31 16:16:53 truenas42 kernel: lowmem_reserve[]: 0 0 0 0 0

Mar 31 16:16:53 truenas42 kernel: Node 0 DMA: 1*4kB (U) 1*8kB (U) 1*16kB (U) 1*32kB (U) 1*64kB (U) 1*128kB (U) 1*256kB (U) 1*512kB (U) 0*1024kB 0*2048kB 0*4096kB 0*8192kB 0*16384kB 0*32768kB 0*65536kB = 1020kB

Mar 31 16:16:53 truenas42 kernel: Node 0 DMA32: 10948*4kB (UME) 17089*8kB (UME) 14751*16kB (UME) 237*32kB (UME) 369*64kB (UM) 399*128kB (UM) 0*256kB 0*512kB 0*1024kB 0*2048kB 0*4096kB 0*8192kB 0*16384kB 0*32768kB 0*65536kB = 498792kB

Mar 31 16:16:54 truenas42 kernel: Node 0 Normal: 139025*4kB (UMEC) 568959*8kB (UMEC) 4*16kB (C) 13*32kB (C) 3*64kB (C) 1*128kB (C) 1*256kB (C) 0*512kB 0*1024kB 0*2048kB 0*4096kB 0*8192kB 0*16384kB 0*32768kB 0*65536kB = 5108828kB

Mar 31 16:16:54 truenas42 kernel: Node 0 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=1048576kB

Mar 31 16:16:54 truenas42 kernel: Node 0 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=2048kB

Mar 31 16:16:54 truenas42 kernel: 9466130 total pagecache pages

Mar 31 16:16:54 truenas42 kernel: 0 pages in swap cache

Mar 31 16:16:54 truenas42 kernel: Free swap = 0kB

Mar 31 16:16:54 truenas42 kernel: Total swap = 0kB

Mar 31 16:16:54 truenas42 kernel: 33530019 pages RAM

Mar 31 16:16:54 truenas42 kernel: 0 pages HighMem/MovableOnly

Mar 31 16:16:54 truenas42 kernel: 576018 pages reserved

Mar 31 16:16:54 truenas42 kernel: 65536 pages cma reserved

Mar 31 16:16:54 truenas42 kernel: 0 pages hwpoisoned

Mar 31 16:16:54 truenas42 kernel: Tasks state (memory values in pages):

Mar 31 16:16:54 truenas42 kernel: [ pid ] uid tgid total_vm rss rss_anon rss_file rss_shmem pgtables_bytes swapents oom_score_adj name

Mar 31 16:16:54 truenas42 kernel: [ 874] 104 874 2097 537 347 190 0 57344 0 -900 dbus-daemon

Mar 31 16:16:54 truenas42 kernel: [ 886] 0 886 15158 633 224 409 0 94208 0 -250 systemd-journal

Mar 31 16:16:54 truenas42 kernel: [ 903] 0 903 6788 625 464 161 0 81920 0 -1000 systemd-udevd

Mar 31 16:16:54 truenas42 kernel: [ 3066] 0 3066 972955 219077 218736 341 0 2461696 0 -1000 asyncio_loop

Mar 31 16:16:54 truenas42 kernel: [ 3084] 0 3084 4194 1862 1728 134 0 73728 0 -1000 python3

Mar 31 16:16:54 truenas42 kernel: [ 3119] 0 3119 565746 34864 34791 73 0 671744 0 -1000 middlewared (ze

Mar 31 16:16:54 truenas42 kernel: [ 3133] 0 3133 9218 4388 4214 174 0 110592 0 -1000 python3

Mar 31 16:16:54 truenas42 kernel: [ 5398] 106 5398 1970 113 96 17 0 57344 0 0 rpcbind

Mar 31 16:16:54 truenas42 kernel: [ 5399] 0 5399 21733 179 66 113 0 53248 0 -1000 auditd

Mar 31 16:16:54 truenas42 kernel: [ 5453] 0 5453 27525 4259 4080 179 0 118784 0 0 truenas_audit_h

Mar 31 16:16:54 truenas42 kernel: [ 5475] 0 5475 5605 410 288 122 0 77824 0 0 certmonger

Mar 31 16:16:54 truenas42 kernel: [ 5490] 0 5490 11738 173 96 77 0 69632 0 0 gssproxy

Mar 31 16:16:54 truenas42 kernel: [ 5498] 0 5498 38187 171 32 139 0 69632 0 -1000 lxcfs

Mar 31 16:16:54 truenas42 kernel: [ 5510] 0 5510 1223 234 32 202 0 45056 0 0 upsmon

Mar 31 16:16:54 truenas42 kernel: [ 5520] 122 5520 2074 153 66 87 0 53248 0 0 upsmon

Mar 31 16:16:54 truenas42 kernel: [ 5522] 0 5522 3502 860 699 161 0 65536 0 0 smartd

Mar 31 16:16:54 truenas42 kernel: [ 5538] 0 5538 256741 1760 1443 317 0 315392 0 0 syslog-ng

Mar 31 16:16:54 truenas42 kernel: [ 5548] 0 5548 12401 399 256 143 0 86016 0 0 systemd-logind

Mar 31 16:16:54 truenas42 kernel: [ 5554] 0 5554 60211 635 448 187 0 86016 0 0 zed

Mar 31 16:16:54 truenas42 kernel: [ 5558] 131 5558 2666 213 138 75 0 61440 0 0 chronyd

Mar 31 16:16:54 truenas42 kernel: [ 5568] 0 5568 635277 5713 5552 161 0 425984 0 -999 containerd

Mar 31 16:16:54 truenas42 kernel: [ 5575] 131 5575 2633 205 118 87 0 61440 0 0 chronyd

Mar 31 16:16:54 truenas42 kernel: [ 5614] 0 5614 3910 491 288 203 0 77824 0 -1000 sshd

Mar 31 16:16:54 truenas42 kernel: [ 5643] 0 5643 1005 172 32 140 0 53248 0 0 cron

Mar 31 16:16:54 truenas42 kernel: [ 5972] 0 5972 220907 13663 13483 180 0 278528 0 0 cli

Mar 31 16:16:54 truenas42 kernel: [ 5978] 0 5978 7078 487 372 115 0 57344 0 0 nginx

Mar 31 16:16:54 truenas42 kernel: [ 5979] 33 5979 7442 703 596 107 0 69632 0 0 nginx

Mar 31 16:16:54 truenas42 kernel: [ 6019] 0 6019 18960 856 640 120 96 135168 0 0 winbindd

Mar 31 16:16:54 truenas42 kernel: [ 6021] 0 6021 18979 738 639 61 38 135168 0 0 wb[TRUENAS]

Mar 31 16:16:54 truenas42 kernel: [ 6023] 0 6023 20138 1460 608 133 719 139264 0 0 smbd

Mar 31 16:16:54 truenas42 kernel: [ 6035] 0 6035 19605 692 620 72 0 122880 0 0 smbd-notifyd

Mar 31 16:16:54 truenas42 kernel: [ 6036] 0 6036 19609 719 620 99 0 131072 0 0 smbd-cleanupd

Mar 31 16:16:54 truenas42 kernel: [ 6115] 0 6115 1859687 15386 15320 66 0 593920 0 0 incusd

Mar 31 16:16:54 truenas42 kernel: [ 6805] 999 6805 129784 42447 42299 116 32 573440 0 -900 netdata

Mar 31 16:16:54 truenas42 kernel: [ 6916] 999 6916 11686 379 192 187 0 73728 0 -900 netdata

Mar 31 16:16:54 truenas42 kernel: [ 7293] 999 7293 22614 5311 5224 87 0 143360 0 -900 python.d.plugin

Mar 31 16:16:54 truenas42 kernel: [ 8875] 105 8875 1821 155 96 59 0 61440 0 0 avahi-daemon

Mar 31 16:16:54 truenas42 kernel: [ 8904] 105 8904 1722 137 73 64 0 61440 0 0 avahi-daemon

Mar 31 16:16:54 truenas42 kernel: [ 9012] 1 9012 8070 3995 3840 155 0 106496 0 0 wsdd.py

Mar 31 16:16:54 truenas42 kernel: [ 9439] 0 9439 792808 9629 9583 46 0 585728 0 -500 dockerd

Mar 31 16:16:54 truenas42 kernel: [ 10019] 0 10019 309627 1340 1258 82 0 139264 0 -998 containerd-shim

Mar 31 16:16:54 truenas42 kernel: [ 10039] 999 10039 1040 105 96 9 0 57344 0 0 bash

Mar 31 16:16:54 truenas42 kernel: [ 10255] 999 10255 2308153 235327 235223 104 0 2940928 0 0 java

Mar 31 16:16:54 truenas42 kernel: [ 10256] 999 10256 606 180 0 180 0 49152 0 0 tail

Mar 31 16:16:54 truenas42 kernel: [ 11182] 999 11182 336782 89563 89500 63 0 1032192 0 0 mongod

Mar 31 16:16:54 truenas42 kernel: [ 16922] 0 16922 436267 750 625 125 0 159744 0 -500 docker-proxy

Mar 31 16:16:54 truenas42 kernel: [ 16932] 0 16932 436203 726 625 101 0 151552 0 -500 docker-proxy

Mar 31 16:16:54 truenas42 kernel: [ 17000] 0 17000 309563 1195 1195 0 0 139264 0 -998 containerd-shim

Mar 31 16:16:54 truenas42 kernel: [ 17022] 201 17022 336687 47413 47244 137 32 1212416 0 0 netdata

Mar 31 16:16:54 truenas42 kernel: [ 17175] 201 17175 23945 3013 3013 0 0 151552 0 0 spawn-plugins

Mar 31 16:16:54 truenas42 kernel: [ 17855] 201 17855 32030 289 224 65 0 90112 0 0 NETWORK-VIEWER

Mar 31 16:16:54 truenas42 kernel: [ 17861] 201 17861 67690 44019 43872 147 0 421888 0 0 apps.plugin

Mar 31 16:16:54 truenas42 kernel: [ 17874] 201 17874 353057 15954 15881 73 0 442368 0 0 go.d.plugin

Mar 31 16:16:54 truenas42 kernel: [ 17905] 201 17905 3352 211 185 26 0 61440 0 0 spawn-setns

Mar 31 16:16:54 truenas42 kernel: [ 76145] 0 76145 19475 854 634 148 72 135168 0 0 wb-idmap

Mar 31 16:16:54 truenas42 kernel: [ 358269] 0 358269 4200 377 224 153 0 73728 0 0 systemd-machine

Mar 31 16:16:54 truenas42 kernel: [1812340] 0 1812340 22580 1474 861 167 446 159744 0 0 smbd[192.168.42

Mar 31 16:16:54 truenas42 kernel: [2578657] 65534 2578657 9852341 8412932 24404 176 8388352 68923392 0 0 qemu-system-x86

Mar 31 16:16:54 truenas42 kernel: [ 327535] 201 327535 21773 291 224 67 0 69632 0 0 debugfs.plugin

Mar 31 16:16:54 truenas42 kernel: [ 694458] 999 694458 1113 187 160 27 0 53248 0 -900 bash

Mar 31 16:16:54 truenas42 kernel: [ 741509] 65534 741509 2351246 1056363 13410 265 1042688 9646080 0 0 qemu-system-x86

Mar 31 16:16:54 truenas42 kernel: [ 767192] 201 767192 1048 154 64 90 0 45056 0 0 bash

Mar 31 16:16:54 truenas42 kernel: [ 785474] 0 785474 209597 15499 15356 143 0 335872 0 -1000 middlewared (wo

Mar 31 16:16:54 truenas42 kernel: [ 785487] 0 785487 209343 15197 15153 44 0 335872 0 -1000 middlewared (wo

Mar 31 16:16:54 truenas42 kernel: [ 787271] 0 787271 209327 15177 15081 96 0 344064 0 -1000 middlewared (wo

Mar 31 16:16:54 truenas42 kernel: [ 798049] 0 798049 45062 189 64 125 0 86016 0 0 nscd

Mar 31 16:16:54 truenas42 kernel: [ 801427] 0 801427 209151 15036 14922 114 0 339968 0 -1000 middlewared (wo

Mar 31 16:16:54 truenas42 kernel: [ 812012] 0 812012 206757 12373 12302 71 0 303104 0 -1000 middlewared (wo

Mar 31 16:16:54 truenas42 kernel: [ 829447] 999 829447 974 109 64 45 0 45056 0 0 bash

Mar 31 16:16:54 truenas42 kernel: [ 829448] 999 829448 974 72 38 34 0 45056 0 0 bash

Mar 31 16:16:54 truenas42 kernel: [ 829449] 999 829449 974 95 38 57 0 45056 0 0 bash

Mar 31 16:16:54 truenas42 kernel: [ 829450] 999 829450 974 96 38 58 0 45056 0 0 bash

Mar 31 16:16:54 truenas42 kernel: [ 829819] 0 829819 456692 706 672 34 0 192512 0 -998 runc

Mar 31 16:16:54 truenas42 kernel: [ 829830] 999 829830 401657 933 930 3 0 167936 0 0 runc:[2:INIT]

Mar 31 16:16:54 truenas42 kernel: oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null),cpuset=e9545de3c82f5116126767d46bf84d775c688f35e0323991d4a96bdc98d3437d,mems_allowed=0,global_oom,task_memcg=/system.slice/incus.service,task=qemu-system-x86,pid=2578657,uid=65534

Mar 31 16:16:54 truenas42 kernel: Out of memory: Killed process 2578657 (qemu-system-x86) total-vm:39409364kB, anon-rss:97616kB, file-rss:704kB, shmem-rss:33553408kB, UID:65534 pgtables:67308kB oom_score_adj:0

Mar 31 16:16:54 truenas42 systemd[1]: incus.service: A process of this unit has been killed by the OOM killer.

Mar 31 16:16:56 truenas42 kernel: oom_reaper: reaped process 2578657 (qemu-system-x86), now anon-rss:12kB, file-rss:320kB, shmem-rss:33553408kB

Mar 31 16:17:01 truenas42 CRON[830113]: (root) CMD (cd / && run-parts --report /etc/cron.hourly)

Mar 31 16:17:24 truenas42 systemd[1]: incus.service: State 'stop-sigterm' timed out. Killing.

Mar 31 16:17:24 truenas42 systemd[1]: incus.service: Killing process 6115 (incusd) with signal SIGKILL.

Mar 31 16:17:24 truenas42 systemd[1]: incus.service: Main process exited, code=killed, status=9/KILL

Mar 31 16:17:24 truenas42 systemd[1]: incus.service: Failed with result 'oom-kill'.

Mar 31 16:17:24 truenas42 systemd[1]: incus.service: Unit process 741509 (qemu-system-x86) remains running after unit stopped.

Mar 31 16:17:24 truenas42 systemd[1]: incus.service: Unit process 2578657 (qemu-system-x86) remains running after unit stopped.

Mar 31 16:17:24 truenas42 systemd[1]: incus.service: Consumed 4d 3h 13min 57.955s CPU time.

Mar 31 16:17:24 truenas42 systemd[1]: incus.service: Scheduled restart job, restart counter is at 1.

Mar 31 16:17:24 truenas42 systemd[1]: Stopped incus.service - Incus - Main daemon.

Mar 31 16:17:24 truenas42 systemd[1]: incus.service: Consumed 4d 3h 13min 57.957s CPU time.

Mar 31 16:17:24 truenas42 systemd[1]: incus.service: Found left-over process 741509 (qemu-system-x86) in control group while starting unit. Ignoring.

Mar 31 16:17:24 truenas42 systemd[1]: This usually indicates unclean termination of a previous run, or service implementation deficiencies.

Mar 31 16:17:24 truenas42 systemd[1]: incus.service: Found left-over process 2578657 (qemu-system-x86) in control group while starting unit. Ignoring.

Mar 31 16:17:24 truenas42 systemd[1]: This usually indicates unclean termination of a previous run, or service implementation deficiencies.

Mar 31 16:17:24 truenas42 systemd[1]: Starting incus.service - Incus - Main daemon...

Mar 31 16:17:24 truenas42 systemd[1]: incus.service: Found left-over process 741509 (qemu-system-x86) in control group while starting unit. Ignoring.

Mar 31 16:17:24 truenas42 systemd[1]: This usually indicates unclean termination of a previous run, or service implementation deficiencies.

Mar 31 16:17:24 truenas42 systemd[1]: incus.service: Found left-over process 2578657 (qemu-system-x86) in control group while starting unit. Ignoring.

Mar 31 16:17:24 truenas42 systemd[1]: This usually indicates unclean termination of a previous run, or service implementation deficiencies.

Mar 31 16:17:29 truenas42 systemd[1]: Started incus.service - Incus - Main daemon.

Mar 31 16:17:32 truenas42 kernel: br0: port 3(tapc6912c96) entered disabled state

Mar 31 16:20:04 truenas42 systemd[1]: Starting sysstat-collect.service - system activity accounting tool...

Mar 31 16:20:04 truenas42 systemd[1]: sysstat-collect.service: Deactivated successfully.

Mar 31 16:20:04 truenas42 systemd[1]: Finished sysstat-collect.service - system activity accounting tool.

Mar 31 16:28:14 truenas42 smartd[5522]: Device: /dev/sda [SAT], SMART Usage Attribute: 194 Temperature_Celsius changed from 120 to 119

Mar 31 16:28:14 truenas42 smartd[5522]: Device: /dev/sda [SAT], SMART Usage Attribute: 194 Temperature_Celsius changed from 120 to 119

Mar 31 16:28:14 truenas42 smartd[5522]: Device: /dev/sdo [SAT], SMART Usage Attribute: 190 Airflow_Temperature_Cel changed from 63 to 71

Mar 31 16:28:14 truenas42 smartd[5522]: Device: /dev/sdo [SAT], SMART Usage Attribute: 190 Airflow_Temperature_Cel changed from 63 to 71

Mar 31 16:28:14 truenas42 smartd[5522]: Device: /dev/sdn [SAT], SMART Usage Attribute: 190 Airflow_Temperature_Cel changed from 71 to 73

Mar 31 16:28:14 truenas42 smartd[5522]: Device: /dev/sdn [SAT], SMART Usage Attribute: 190 Airflow_Temperature_Cel changed from 71 to 73

Mar 31 16:28:15 truenas42 smartd[5522]: Device: /dev/sdj [SAT], SMART Prefailure Attribute: 8 Seek_Time_Performance changed from 121 to 119

Mar 31 16:28:16 truenas42 smartd[5522]: Device: /dev/sdj [SAT], SMART Prefailure Attribute: 8 Seek_Time_Performance changed from 121 to 119

Mar 31 16:28:17 truenas42 smartd[5522]: Device: /dev/sdh [SAT], SMART Prefailure Attribute: 8 Seek_Time_Performance changed from 121 to 119

Mar 31 16:28:17 truenas42 smartd[5522]: Device: /dev/sdh [SAT], SMART Usage Attribute: 194 Temperature_Celsius changed from 142 to 146

Mar 31 16:28:18 truenas42 smartd[5522]: Device: /dev/sdh [SAT], SMART Prefailure Attribute: 8 Seek_Time_Performance changed from 121 to 119

Mar 31 16:28:18 truenas42 smartd[5522]: Device: /dev/sdh [SAT], SMART Usage Attribute: 194 Temperature_Celsius changed from 142 to 146

Mar 31 16:30:00 truenas42 systemd[1]: Starting sysstat-collect.service - system activity accounting tool...

Mar 31 16:30:00 truenas42 systemd[1]: sysstat-collect.service: Deactivated successfully.

Mar 31 16:30:00 truenas42 systemd[1]: Finished sysstat-collect.service - system activity accounting tool.

Mar 31 16:40:00 truenas42 systemd[1]: Starting sysstat-collect.service - system activity accounting tool...

Mar 31 16:40:00 truenas42 systemd[1]: sysstat-collect.service: Deactivated successfully.

Mar 31 16:40:00 truenas42 systemd[1]: Finished sysstat-collect.service - system activity accounting tool.

Mar 31 16:50:01 truenas42 systemd[1]: Starting sysstat-collect.service - system activity accounting tool...

Mar 31 16:50:01 truenas42 systemd[1]: sysstat-collect.service: Deactivated successfully.

Mar 31 16:50:01 truenas42 systemd[1]: Finished sysstat-collect.service - system activity accounting tool.