Hello!

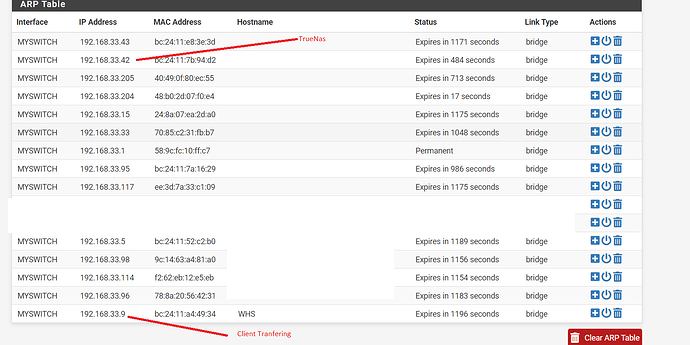

I’ve got a new TrueNAS server (CORE 13) and I am moving files from one pool to another. I am doing this from a Windows 10 client over SMB shares. Currently I’m doing a rather large transfer of ~3TB. The files are mostly ISO files so the individual files are generally pretty large 5GB-100GB.

I’m seeing speeds from 250MB/s to 0bytes. With the speed most commonly sitting around 100MB/s.

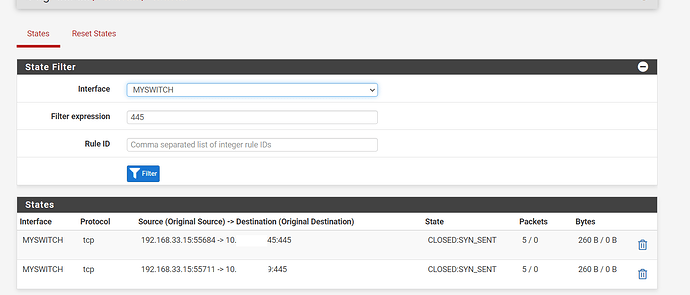

Every 5-20min the speed drops from “normal” speed to 0bytes and then starts to slowly speed back up after around 1 min to around 250MB/s before settling in back to around 100MB/s and the cycle repeats.

Screen Recording of this: https://youtu.be/a6WAygnYzLs

This doesn’t seem to have anything to do with the size of files as I’ve seen cycle play out on a single larger file as well as many smaller files.

I’m pretty new to TrueNAS and ZFS in general but this doesn’t seem like it could be normal.

Hardware:

CPU: E5-2650L v3

MB: Asrock X99 Taichi

RAM: 128GB Samsung 2Rx4 PC4-2133P-RA0-10-D0 ECC REG

HBA: LSI 9300 16i

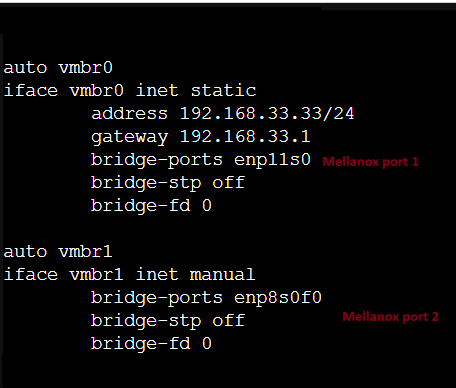

NICs: Intel i340-T4, Mellenox ConnectX-3 Dual Port 10GbE

Chassis: Rosewill RSV-L4412U

Software/Setup:

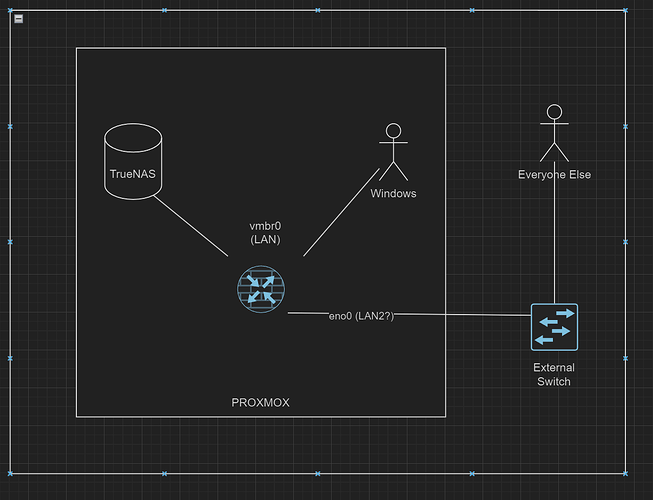

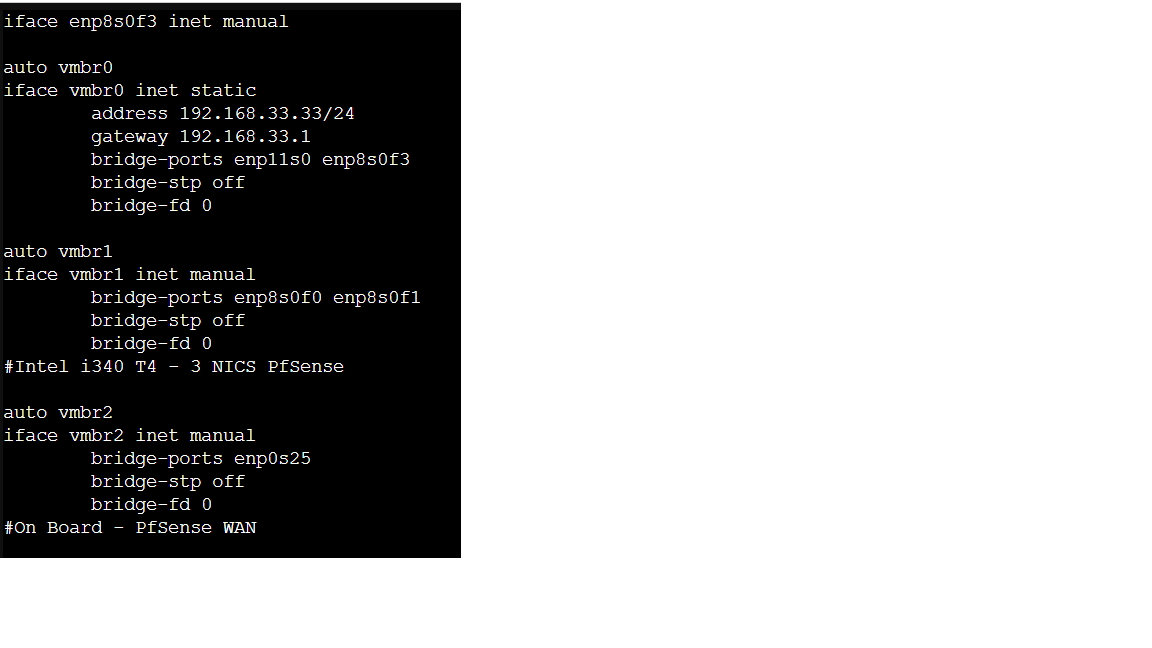

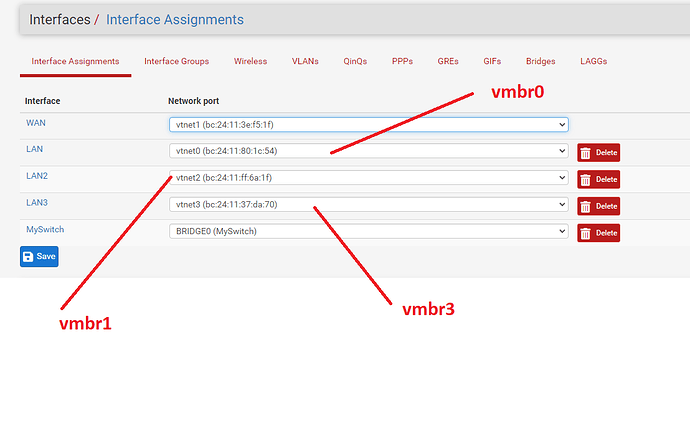

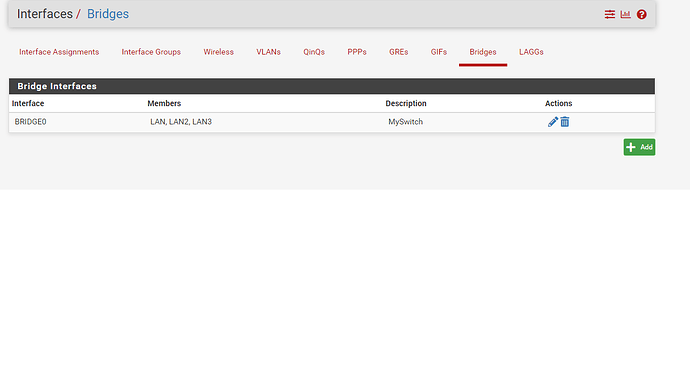

-Proxmox Host

-TrueNAS Core 13 is VM (guest):

- 8 cores

- 64GB ram

- 64GB boot drive

- LSI 9300 16i Passed through

All TrueNas Drives are on the HBA except for boot drive.

TrueNAS Drives:

RAIDZ2: 6 x 8TB WD80EMAZ

Mirror: 2 x 14TB WD Ultrastar DC HC530

Stripe: 1 x 14TB WD Ultrastar DC HC530

The current transfer I mentioned above is from the single 14TB to the Mirrored 14TB drives, but I’ve witnessed similar behavior on the RAIDZ2 array.

One thing I tried was changing “zfs_dirty_data_max” (default: 4294967296) but that still yields similar results.

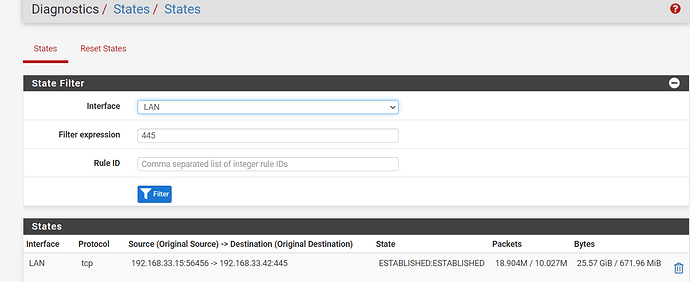

Edit: Forgot to mention the Windows 10 Client doing the transfer is a VM on the same machine. It has a 10Gb VirtIO NIC assigned and running iperf to the Truenas server shows 2-3Gbps speeds so I believe the network speed is fine.

Any ideas?

Thank you!