I finally stumbled across the new forum too, hi guys.

(Nice to see the design is the same as Lawrence Systems, kudos!)

So I have a virtualization server running that is my main home lab and NAS, and in the last few days I started backing up data to my TrueNAS Core VM via SFTP and my Nextcloud WebDav via an NFS share when I noticed that both transfers were failing.

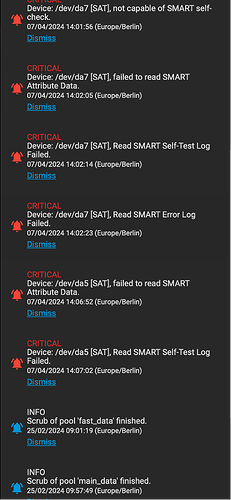

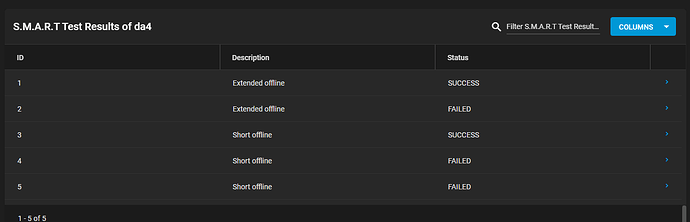

Upon investigation, the TrueNAS VM showed me two failed SMART attempts for the da5 and da7 drives.

What surprised me was that the system complained that it could not read or execute the Smart Self check on two of the 10 IronWolfs.

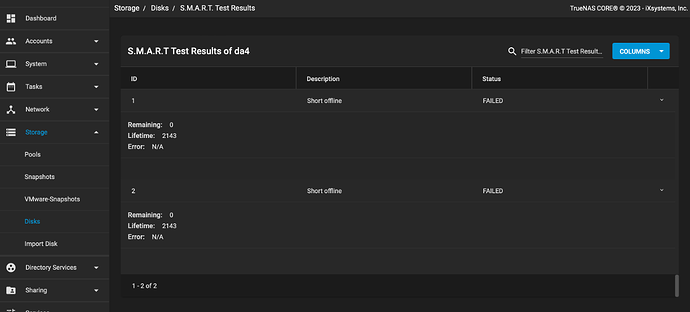

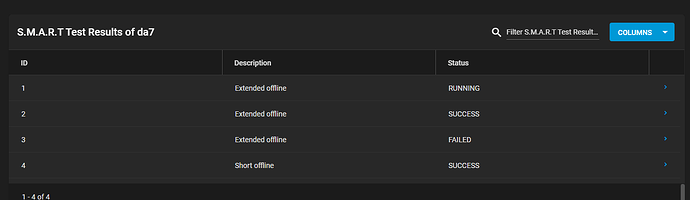

I then wanted to start troubleshooting and started first a short and then a long SMART test on a few drives when the UI started acting up. I tried to run a SMART check on da4 and it loaded for minutes when I finally saw the message that it had started the task. The button for da5 was not responding at all. After that I tried it over selecting a series of disks and also tried scheduling, without success.

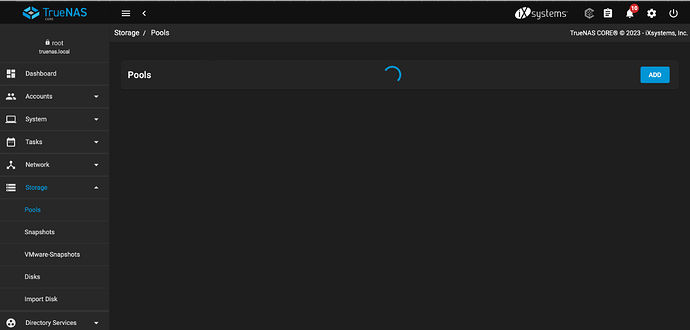

After that the GUI partially froze and I could not return to the dashboard, while a few entries like disks still worked normally. A day later I logged onto the system and was greeted with a still buggy UI that greeted me with a loading pool screen.

EDIT: the Dashboard does seem to work again and I didn’t want to restart TrueNAS on purpose to see how it would behave if I didn’t immediately noticed the fault. Pools page still doesn’t load though !

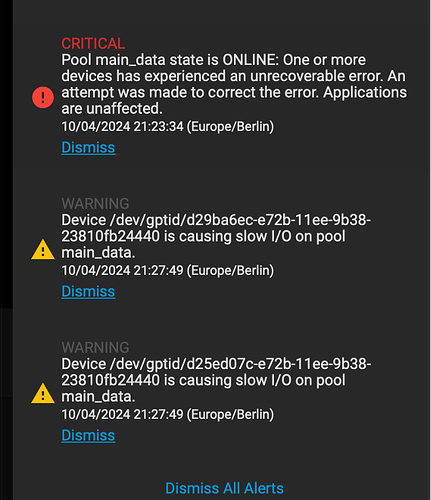

The following errors were reported since the last log on:

Failed to check for alert Quota: concurrent.futures.process._RemoteTraceback: """ Traceback (most recent call last): File "/usr/local/lib/python3.9/concurrent/futures/process.py", line 246, in _process_worker r = call_item.fn(*call_item.args, **call_item.kwargs) File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 111, in main_worker res = MIDDLEWARE._run(*call_args) File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 45, in _run return self._call(name, serviceobj, methodobj, args, job=job) File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 39, in _call return methodobj(*params) File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 39, in _call return methodobj(*params) File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/zfs.py", line 483, in query_for_quota_alert config = self.middleware.call_sync("systemdataset.config") File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 78, in call_sync return self.client.call(method, *params, timeout=timeout, **kwargs) File "/usr/local/lib/python3.9/site-packages/middlewared/client/client.py", line 456, in call raise c.py_exception RuntimeError: can't start new thread """ The above exception was the direct cause of the following exception: Traceback (most recent call last): File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/alert.py", line 740, in __run_source alerts = (await alert_source.check()) or [] File "/usr/local/lib/python3.9/site-packages/middlewared/alert/base.py", line 212, in check return await self.middleware.run_in_thread(self.check_sync) File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1159, in run_in_thread return await self.run_in_executor(self.thread_pool_executor, method, *args, **kwargs) File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1156, in run_in_executor return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs)) File "/usr/local/lib/python3.9/site-packages/middlewared/utils/io_thread_pool_executor.py", line 43, in worker fut.set_result(fn(*args, **kwargs)) File "/usr/local/lib/python3.9/site-packages/middlewared/alert/source/quota.py", line 38, in check_sync datasets = self.middleware.call_sync("zfs.dataset.query_for_quota_alert") File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1303, in call_sync return self.run_coroutine(self._call_worker(name, *prepared_call.args)) File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1339, in run_coroutine return fut.result() File "/usr/local/lib/python3.9/concurrent/futures/_base.py", line 439, in result return self.__get_result() File "/usr/local/lib/python3.9/concurrent/futures/_base.py", line 391, in __get_result raise self._exception File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1254, in _call_worker return await self.run_in_proc(main_worker, name, args, job) File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1173, in run_in_proc return await self.run_in_executor(self.__procpool, method, *args, **kwargs) File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1156, in run_in_executor return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs)) RuntimeError: can't start new thread

Failed to check for alert UnencryptedDatasets: Traceback (most recent call last): File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/alert.py", line 740, in __run_source alerts = (await alert_source.check()) or [] File "/usr/local/lib/python3.9/site-packages/middlewared/alert/source/datasets.py", line 18, in check for dataset in await self.middleware.call('pool.dataset.query', [['encrypted', '=', True]]): File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1283, in call return await self._call( File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1251, in _call return await self.run_in_executor(prepared_call.executor, methodobj, *prepared_call.args) File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1156, in run_in_executor return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs)) File "/usr/local/lib/python3.9/site-packages/middlewared/utils/io_thread_pool_executor.py", line 43, in worker fut.set_result(fn(*args, **kwargs)) File "/usr/local/lib/python3.9/site-packages/middlewared/schema.py", line 985, in nf return f(*args, **kwargs) File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/pool.py", line 2820, in query sys_config = self.middleware.call_sync('systemdataset.config') File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1299, in call_sync return self.run_coroutine(methodobj(*prepared_call.args)) File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1339, in run_coroutine return fut.result() File "/usr/local/lib/python3.9/concurrent/futures/_base.py", line 439, in result return self.__get_result() File "/usr/local/lib/python3.9/concurrent/futures/_base.py", line 391, in __get_result raise self._exception File "/usr/local/lib/python3.9/site-packages/middlewared/schema.py", line 981, in nf return await f(*args, **kwargs) File "/usr/local/lib/python3.9/site-packages/middlewared/service.py", line 385, in config return await self._get_or_insert(self._config.datastore, options) File "/usr/local/lib/python3.9/site-packages/middlewared/service.py", line 397, in _get_or_insert return await self.middleware.call('datastore.config', datastore, options) File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1283, in call return await self._call( File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1240, in _call return await methodobj(*prepared_call.args) File "/usr/local/lib/python3.9/site-packages/middlewared/schema.py", line 981, in nf return await f(*args, **kwargs) File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/datastore/read.py", line 186, in config return await self.query(name, [], options) File "/usr/local/lib/python3.9/site-packages/middlewared/schema.py", line 981, in nf return await f(*args, **kwargs) File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/datastore/read.py", line 164, in query result = await self._queryset_serialize( File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/datastore/read.py", line 214, in _queryset_serialize result.append(await self._serialize( File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/datastore/read.py", line 232, in _serialize data = await self.middleware.call(extend, data) File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1283, in call return await self._call( File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1240, in _call return await methodobj(*prepared_call.args) File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/sysdataset.py", line 55, in config_extend licensed = await self.middleware.call('failover.licensed') File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1283, in call return await self._call( File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1251, in _call return await self.run_in_executor(prepared_call.executor, methodobj, *prepared_call.args) File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1156, in run_in_executor return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs)) File "/usr/local/lib/python3.9/site-packages/middlewared/utils/io_thread_pool_executor.py", line 43, in worker fut.set_result(fn(*args, **kwargs)) File "/usr/local/lib/python3.9/site-packages/middlewared/schema.py", line 985, in nf return f(*args, **kwargs) File "/usr/local/lib/middlewared_truenas/plugins/failover.py", line 192, in licensed info = self.middleware.call_sync('system.info') File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1299, in call_sync return self.run_coroutine(methodobj(*prepared_call.args)) File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1339, in run_coroutine return fut.result() File "/usr/local/lib/python3.9/concurrent/futures/_base.py", line 439, in result return self.__get_result() File "/usr/local/lib/python3.9/concurrent/futures/_base.py", line 391, in __get_result raise self._exception File "/usr/local/lib/python3.9/site-packages/middlewared/schema.py", line 981, in nf return await f(*args, **kwargs) File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/system.py", line 655, in info dmidecode = await self.middleware.call('system.dmidecode_info') File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1283, in call return await self._call( File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1251, in _call return await self.run_in_executor(prepared_call.executor, methodobj, *prepared_call.args) File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1156, in run_in_executor return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs)) File "/usr/local/lib/python3.9/asyncio/base_events.py", line 819, in run_in_executor executor.submit(func, *args), loop=self) File "/usr/local/lib/python3.9/site-packages/middlewared/utils/io_thread_pool_executor.py", line 35, in submit start_daemon_thread(name=f"ExtraIoThread_{next(counter)}", target=worker, args=(fut, fn, args, kwargs)) File "/usr/local/lib/python3.9/site-packages/middlewared/utils/io_thread_pool_executor.py", line 18, in start_daemon_thread t.start() File "/usr/local/lib/python3.9/threading.py", line 899, in start _start_new_thread(self._bootstrap, ()) RuntimeError: can't start new thread

And while I could still access the disks tab, I saw that the SMART test for a drive that previously worked also failed.

Does anyone have any idea what’s going on here? Why is the GUI so badly affected by this error and why can’t I even start a SMART test to begin with?

My system:

Hypervisor: UNRAID

VM: TrueNAS TrueNAS-13.0-U6.1

DISKS: 10x 4tb Iron Wolf (2x 5 disk RadiZ2 ) & 6x 2tb Samsung SSD (3x mirrors) distributed on two controllers

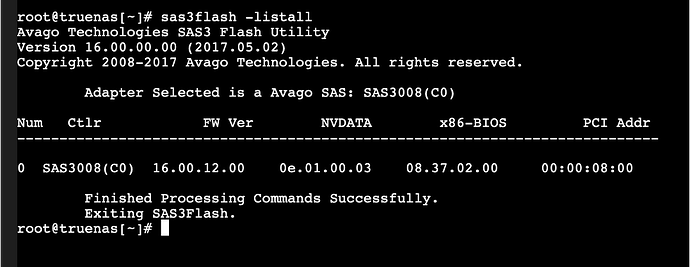

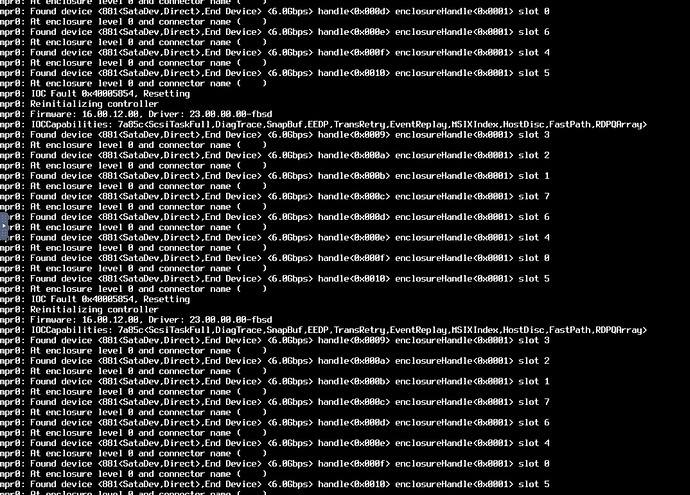

Controller #1: LSI9300-16i HBA looped through to VM (only half is looped through, the controller is actually two 8i controllers) with the recommended TrueNAS firmware 16.00.12.00 (8x 4tb HDD)

#2 Controller: Mainboard SATA from a Pro WS W680-ACE with the 6 SSDs and two more HDDs (also routed through)

Before that TrueNAS was actually running smoothly and I even had transfer rates of over 2Gb/s from local Windows VMs, but now, I’m not sure if the software, virtualization or the drives are the problem. I remembered having problems with the da7 drive in the past, but as far as I know the names can change and I didn’t record the drive ID unfortunately. Ignoring that the UI should work normally even with a failed drive.

Does anyone have any ideas on how to troubleshoot/fix this?

I would start with swapping drive bays/ potentially new drives but without even seeing able to execute a SMART check this seems to be a dead end.

Thanks for any reply.