Hi Team,

while getting some help on reddit, iX Chris suggested I setup an account here and see if it might be easier with the knowledge of the forum.

I’ll explain everything here again just so I can be clear;

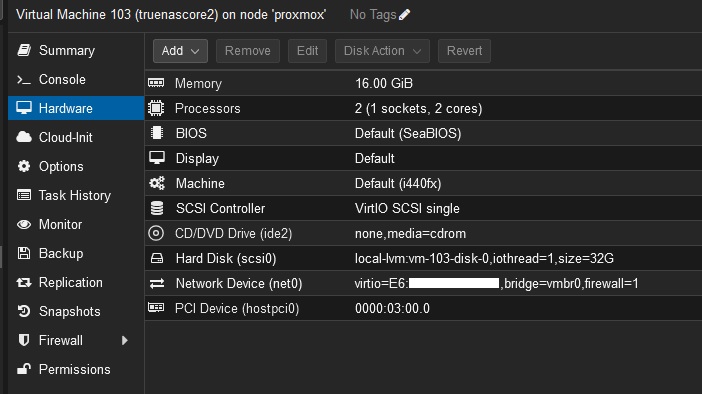

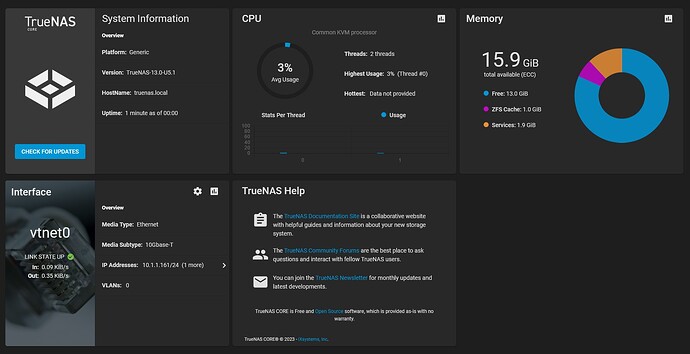

I have a TrueNAS core VM instance that I am running on a proxmox host on my small homelab. I am passing a PCI device directly to the VM (basically a HBA) so the VM has physical access to the disks

I was running a test on the host, added an additional PCI card, and doing so changed the PCI order without my knowledge. I added a second set of disks along with this new PCI card that I had planned to use.

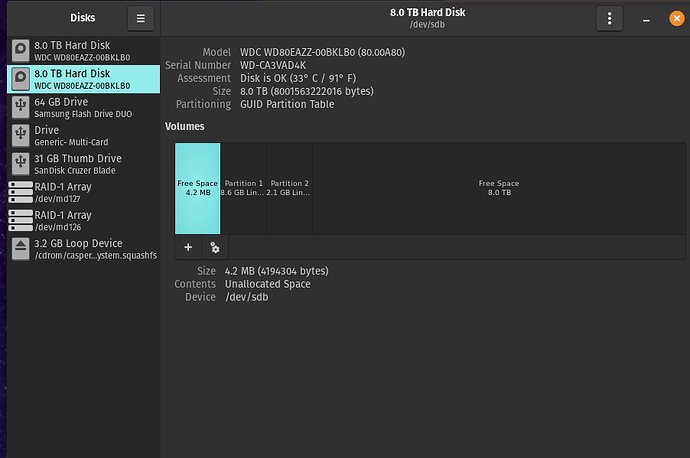

Full honesty, I was testing something called xpnology, which is a bootloader for synology’s nas software. I gave it the new PCI device in proxmox, and did the installation. It’s only 3x 3TB drives but i ignored it saying it was going to use 5 disks. Thought it was a glitch. maybe using the bootable disk or something. I clicked next a bunch of times, added the disks and was asking how to setup this new pool. something clicked and I shut it down, but was already too late. It had created some new partitions over my existing truenas instances

I attached the disks back to my truenas instance, and obviously nothing worked. disks showing in the portal, but the shares were gone, the pool was gone. it was gone. did some reading, and took the pool offline, which made no difference, but now not showing in the portal… might have been another bad step in hindsight,.

More reading suggested that it might be possible to recover data of the disks.

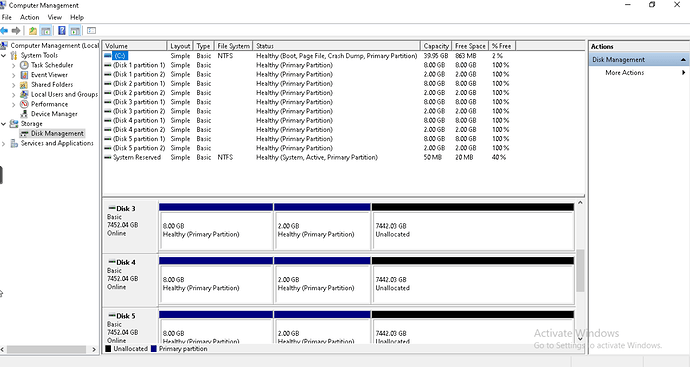

I attached the disks to a win10 vm.

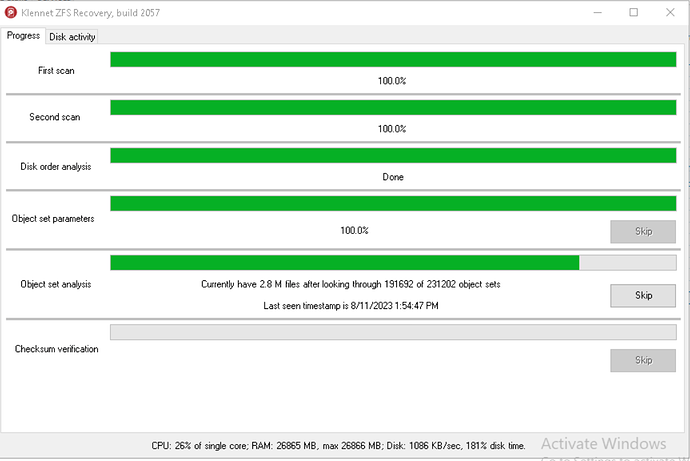

Tried an application called Klennet ZFS Recovery. That ran for close to two days and eventually crashed.

it looked like it was working at least…

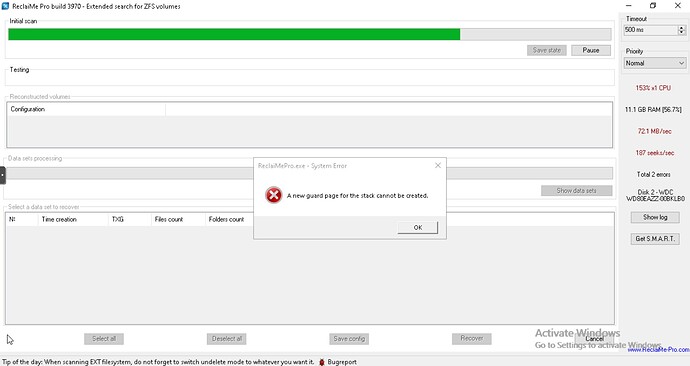

Tried running another application called ReclaiMePro which took a few more days to scan, but eventually crashed/failed also

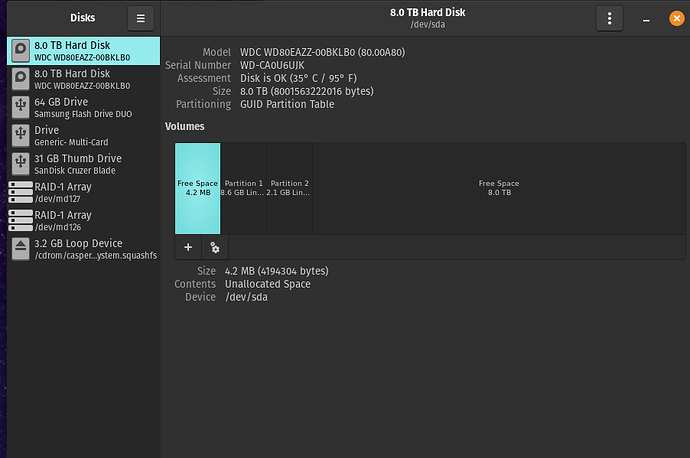

At some point, I was clicking around in the settings and discovered a MBR disk check window. from here i can see something that gave me hope. still showing some TRUENAS info on the disk!

This made me change gears. maybe instead of trying to recover data, i could fix my partitions and perhaps get some (and if luck most/all) of the stuff back.

as mentioned before, Chris was nice enough to reach out, and ask some questions.

To answer those here, I dont have an additional disk at the moment to use for some testing, but I can possibly grab one (or more) for testing potentially. The pool was a z1 using 5x 8tb disks, or at least im 90% sure it was. 10% maybe z2.

to answer anyone else asking, My most valuable data has indeed been backed up. There are however less valuable data that consisted of backups of phones and computers and configs that wasnt super important, but i’d kinda like back if possible

I haven’t written anything else to the disk since this event as far as i know at least