So I’ve reformatted your dedup output for readability here:

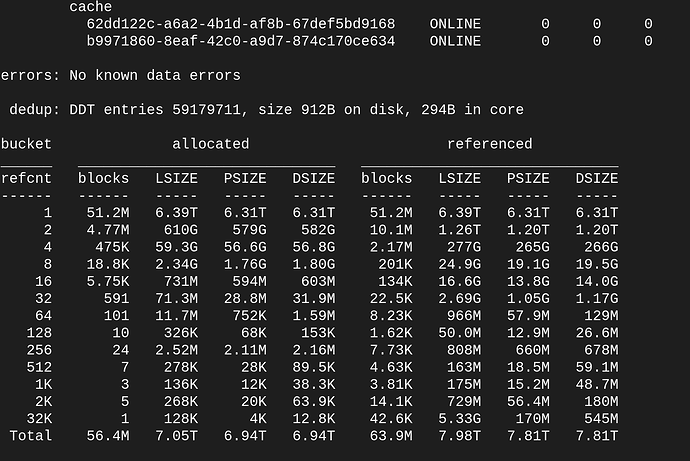

dedup: DDT entries 59179711, size 912B on disk, 294B in core

bucket allocated referenced

refcnt blocks LSIZE PSIZE DSIZE blocks LSIZE PSIZE DSIZE

1 51.2M 6.39T 6.31T 6.31T 51.2M 6.39T 6.31T 6.31T

2 4.77M 610G 579G 582G 10.1M 1.26T 1.20T 1.20T

4 475K 59.3G 56.6G 56.8G 2.17M 277G 265G 266G

8 18.8K 2.34G 1.76G 1.80G 201K 24.9G 19.1G 19.5G

16 5.75K 731M 594M 603M 134K 16.6G 13.8G 14.0G

32 591 71.3M 28.8M 31.9M 22.5K 2.69G 1.05G 1.17G

64 101 11.7M 752K 1.59M 8.23K 966M 57.9M 129M

128 10 326K 68K 153K 1.62K 50.0M 12.9M 26.6M

256 24 2.52M 2.11M 2.16M 7.73K 808M 660M 678M

512 7 278K 28K 89.5K 4.63K 163M 18.5M 59.1M

1K 3 136K 12K 38.3K 3.81K 175M 15.2M 48.7M

2K 5 268K 20K 63.9K 14.1K 729M 56.4M 180M

32K 1 128K 4K 12.8K 42.6K 5.33G 170M 545M

Total 56.4M 7.05T 6.94T 6.94T 63.9M 7.98T 7.81T 7.81T

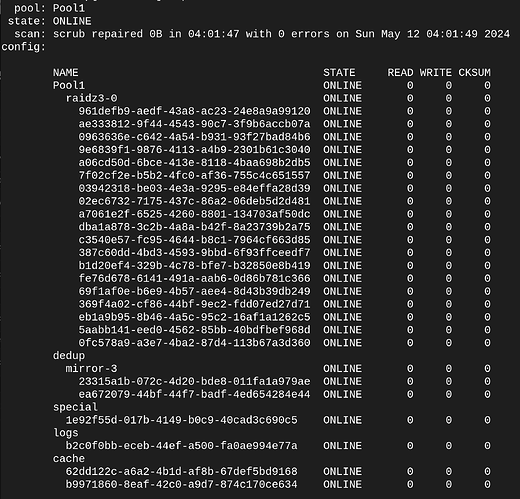

This indicates that you’re only saving a little under 1T from enabling dedup - about a 1.13:1 reduction ratio.

What that’s costing you, is 59179711 records being 912B each on disk, and 294B each in RAM. So that’s a total of about 50.2G on disk, and 16.2G in RAM. It’s small enough that reading and comparing against dedup fits in RAM - but the problem comes when it’s time to go through and delete the associated hashes from the deduplication table on your spinning disks. Dedup table entries are tiny little things, and put a huge level of I/O demand on the underlying storage - spinning disks are quite simply not fit for purpose here.

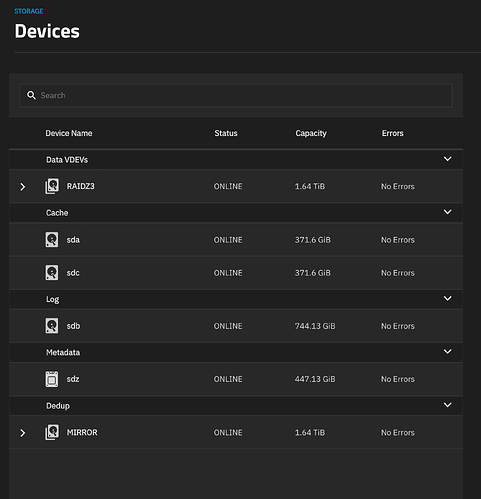

I’m disappointed here as well. Your pool is basically reliant on your single metadata SSD remaining alive, so the mirroring of your dedup HDDs and the RAIDZ3 of your main pool is negated by this. Furthermore, the use of RAIDZ3 means that while you can widen the additional special vdevs, you can’t fully remove them.

Unfortunately your current cache SSDs are too small to be used to mirror the existing 480G “metadata” SSD - you’ll have to find ones at least as large as the current unit. The log drive could be used, assuming that the cache drives are capable of delivering sufficient sync-write performance.

But your dedup tables are ultimately the bottleneck here. Even if you fully disable it (and it seems as if it’s on more than just that one dataset, based on your DDT stats above) there’s no way to “un-dedup” the existing data, so you’ll still be stuck with the necessity of the updates/deletes being bound by the awful random I/O performance of your dedup HDDs.

Can you provide the make and model of your existing SSDs? If your 400G SAS drives make viable log devices, we can do a “shuffle” around, involving removing your cache SSDs, re-using the log SSD as a mirror for your metadata SSD, but you’ll need a pair of SSDs that are at least as large as the 1.8T HDDs (1.92T is the most likely size, target a “mixed use” performance drive) to replace the dedup ones, before finally putting a 400G drive back into service as a log.

But realistically, the single 19-wide RAIDZ3 isn’t doing you any favors from a performance level either. I’d prefer seeing that as a 2x9wZ2 at most, backed up with a mirrored log device, a mirror3 for metadata, and optionally the single cache drive. And no dedup at all.  However, that requires a full backup, pool destroy, and recreation.

However, that requires a full backup, pool destroy, and recreation.