This was reproducible from two different VMs and Docker Containers:

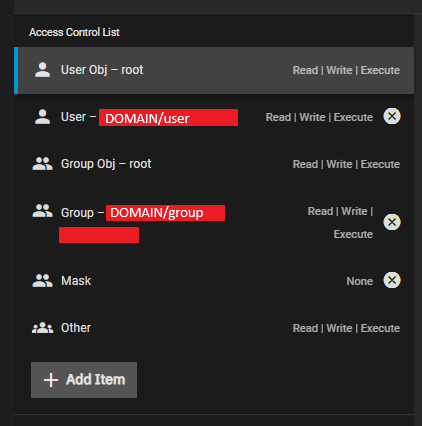

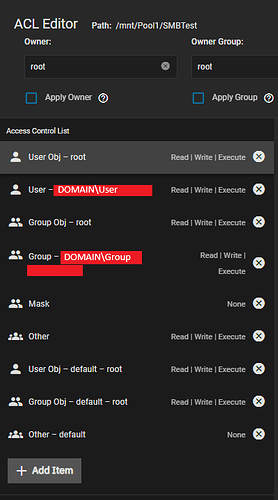

I am running Cobia 23.10.2. I have a ubuntu 22.04.4 host that mounted the SMB share using cifs-utils. The share was mounted using AD credentials and a uid/gid mask for local control of the shared folder.

My two containers that were using this share were unable to view the disk size and were causing a kernel panic when attempting to move files onto the drive. It appeared that they could create and read files fine, they were fully able to traverse the directory, but could not move or copy files into the share.

The samba4 logs showed multiple instances of this error (directory/filename.ext is a placeholder):

[2024/04/09 16:05:08.774429, 1] ../../source3/smbd/close.c:872(close_normal_file) Failed to disconnect durable handle for file directory/filename.ext: NT_STATUS_NOT_SUPPORTED - proceeding with normal close

I rolled back the containers, the truenas version, attempted recreating the share, modifying the ACL, etc. No settings seemed to affect the behavior of the shares. As far as I can tell, this began after the upgrade from 23.10.1.3 to 23.10.2.

I have since modified the share to use NFS instead of SMB, and all is functioning correctly. This is more informational, and I would be willing to reproduce as necessary.

My biggest concern was that it appears to be invisible, I can’t tell what is actually going wrong. I have been using the same setup through multiple ubuntu, docker, and truenas version upgrades.

Those two errors were the only things I could find in any logs that showed anything valuable while I was in the midst of troubleshooting to get it back up and operating.