I am using an 8-disk RAIDZ2 setup with 128GB of RAM. When I first installed TrueNAS Scale 24.04, using the default settings without any changes, I could achieve 10Gbps read and write speeds. However, after reinstalling with the same configuration, the write speed still reaches 10Gbps, but the read speed only reaches 5Gbps. I’m not sure what the issue is.

Were your drives empty when you first benched? Are they empty now?

You have provided less that the minimum amount of information here.

What drives (make/model) do you have?

What hardware do you have? NIC?

Are you running 24.04 or 24.04.1.1 ?

What type/size of files are you transferring? Lots of small random files?

What is the capacity of your pool? Are you at 95% full?

These are things we need to know. Provide some better details and I’m sure you will get an answer.

Edit: How are you benchmarking? Be specific as it matters. And is the dataset compression OFF? That matters too.

I’m not sure is it related to disk space or not. After my first installation, the RAIDZ2 had 87TB of space, and even after writing several terabytes of data, it maintained 10Gbps speeds. After the second installation, the write speed is still 10Gbps, but the read speed is only 5Gbps. I am a bit unsure about the differences between the two installations. I think I didn’t choose swap the first time. Also, the network card settings in the system seem to be different between the two installations, but I am not sure.

What drives (make/model) do you have?

A:seagate X18 16T*8

What hardware do you have? NIC?

A:AQC 107

Are you running 24.04 or 24.04.1.1 ?

A:24.04.1.1

What type/size of files are you transferring? Lots of small random files?

A:some video files, each approximately 20GB.

What is the capacity of your pool? Are you at 95% full?

A:Yes, the disk usage is approximately 97%. Most of the capacity is allocated to the Synology virtual machine. The setup was the same for both installations.

My testing standard is the speed of copying and pasting big files.

Compression is OFF.

If your pool is 80%+ percent full, ZFS performance will suffer significantly due to the file system having little file space left to write to. At 95%, you should be seeing critical warnings in the dashboard of the GUI. That doesn’t explain the read issue, however, unless it’s the destination disk that’s that full.

Another poster here traced his read issues to drivers on the receiving end, ie the windows machine had performance issues while the same hardware worked great under Linux.

I’d test with iperf or a similar package to confirm it’s something associated with the network vs. your NAS that is causing the issue.

Read speed issues could be due to extensive file fragmentation (lots of small pieces vice fewer larger pieces).

Write speeds being good are likely due to the large amount of RAM you have, you can send data to the machine, it will cache it until it can write it to the pool.

As I understand it, once your drive gets too full (I’ve heard recently 90% and 95% but I don’t know for certain) ZFS just starts placing the data where it can find a location and not contiguous on a drive (or portions of the large file contiguous, doesn’t need to be the entire file). Just offering that up as a reason the files are fragment.

Let’s go down the software path:

-

The “second installation”, why did you install a second time? Did something happen and if so, what?

-

Did you install the exact same version of TrueNAS? If NO: You very well could have a different driver for your NIC. If this is the case then submit a Bug Report.

-

Did you restore the configuration file from the first installation to the second installation?

-

[quote=“iamjimmycheng, post:5, topic:7658”]

Most of the capacity is allocated to the Synology virtual machine.

[/quote]

I am unclear what this statement means, I don’t want to assume that I actually know. I’m just trying to get the overall picture of what might be happening.

4a) Do you have a virtual machine that is running Synology software?

4b) Do you have a quota set for this virtual machine?

4c) Is this virtual machine running on TrueNAS or some other hypervisor?

I’d use this method to test for network throughput because using a copy of a large file is not very accurate.

You can also do some internal testing to verify the pool isn’t the issue. Run some dd tests. This is actually where I would start. If you have slow results here, your network is likely not the issue.

- Create a dataset which has compression turned off. This is important because compression will give you a false reading.

- Open up a shell window.

- Type “dd if=/dev/random of=/mnt/pool/dataset/test.dat bs=2048k count=100000”

- Note the results.

- Type “dd of=/dev/null if=/mnt/pool/dataset/test.dat bs=2048k count=100000”

- Note the results.

- Lastly cleanup your mess and “rm /mnt/pool/dataset/test.dat” to delete the file you just created.

This will take time to run, be patient. I mean it will take a lot of time (my pool took 16.3 minutes to create this file) but I have a much older and only a RAIDZ2 with 4 drives.

Writing:

root@freenas:~ # dd if=/dev/random of=/mnt/farm2/test2/test.dat bs=2048k count=100000

100000+0 records in

100000+0 records out

209715200000 bytes transferred in 977.737918 secs (214490198 bytes/sec)

Summary: 200GB file in 16.3 minutes with a transfer rate of ~214MB/sec. Not bad for only 16GB of RAM.

Look at the GUI → Reporting → Memory → Physical memory Utilization and the goal here is to have zero free memory. The “Wired” should be maxed out. Please note that Wired will take time to return to normal, that is the way it just works and will not impact any operations/performance.

Reading:

root@freenas:~ # dd of=/dev/null if=/mnt/farm2/test2/test.dat bs=2048k count=100000

100000+0 records in

100000+0 records out

209715200000 bytes transferred in 942.650516 secs (222473967 bytes/sec)

And for the reading you can see 200GB file in 15.7 seconds with a transfer rate of ~222MB/sec.

If you wanted to try something a little different, you could just see how fast your test file (whatever large file you have that you are reading right now to figure out reading speeds) using this command:

dd of=/dev/null if=/mnt/pool/dataset/filename.mp4

And my results for a 6GB file (not very large at all and fits in RAM).

root@freenas:/mnt/farm2/movies/Movies # dd of=/dev/null if=/mnt/farm2/movies/Movies/The_Avengers.mkv

13232460+1 records in

13232460+1 records out

6775019998 bytes transferred in 20.488518 secs (330673987 bytes/sec)

So here are a few things you can do to try and isolate the issue(s).

Thank you for such a detailed response, but I have some points of confusion.

1.I think this 5Gbps read speed might be the actual speed of the RAID array without the benefit of memory caching. Is this normal?

2.If the above argument is correct, why did I get 10Gbps read speed during the first installation? All my hardware, software, and versions are the same.

3.Regarding the issue of the array’s capacity reaching over 95%, my first installation was exactly the same. I also tested when the capacity reached 97%. Besides doing the copy and paste test on Windows, I also used Docker speedtest inside the virtual machine, and the results were the same as the Windows tests.

4.The reason for the reinstallation was that after the first installation, I was not familiar with the system and made some incorrect settings, which caused the virtual machine to crash. That’s why I had to reinstall.

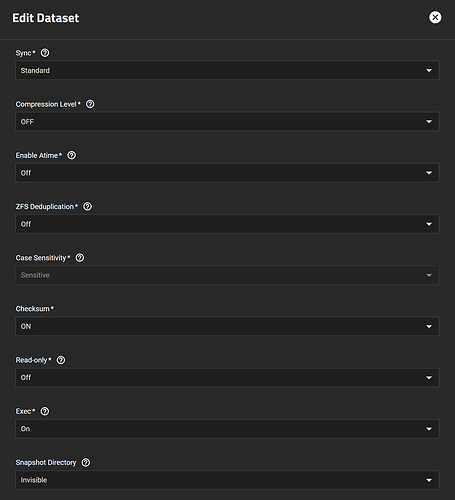

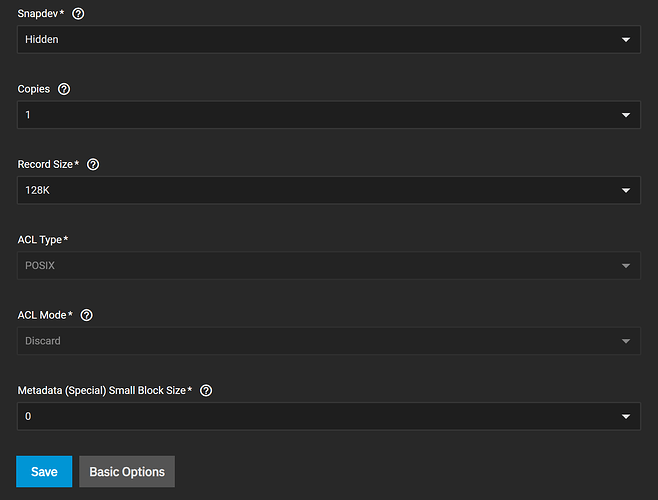

5.Here are the parameters of my Datasets. Could you please check if they are correct?

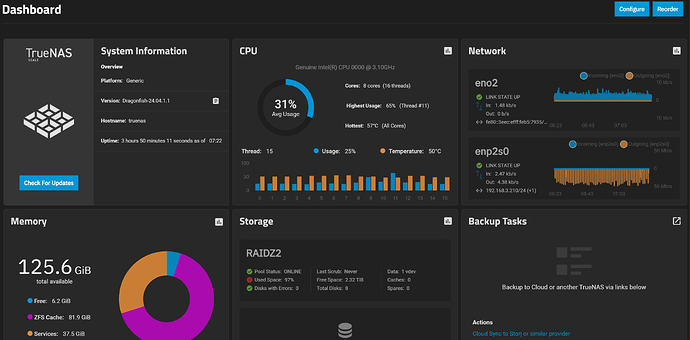

This is my dashboard. I am not sure if there are any issues. I think the IP addresses in the network section are different from what was shown during my first installation, but I can’t remember what the original ones were.

My crystal ball is still broken, sorry. But it could have been due to whatever you were reading was already in RAM. You need to test with files that will not fit in RAM to ensure RAM isn’t caching the data.

My best guess given the data at hand is the pool is the reason for the slowdown, however the iperf tests that @Constantin recommended will validate the network speed and the tests I suggested will validate the pool speed.

You have some testing to do, anything else is guessing.

Good luck.

Thank you all for your suggestions. I will perform the tests later.